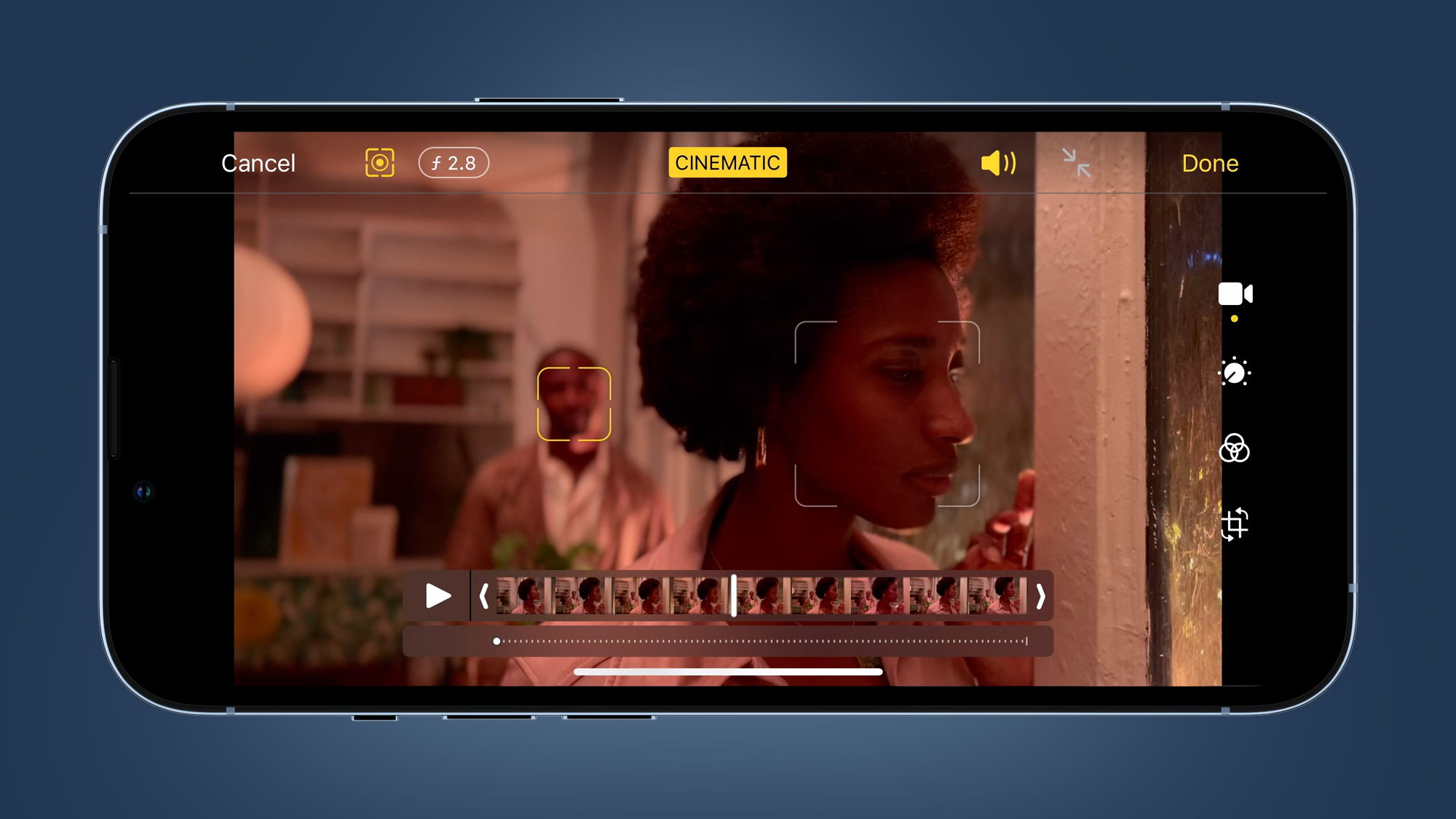

What is Cinematic mode? The iPhone 13’s new video focusing trick explained

How promising is Apple's new video mode?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

The iPhone 13 series bring with them a number of hugely impressive camera upgrades. We get larger photosites (pixels on the sensors), in all four phones, topping out with the 1.9-micron pixels of the iPhone 13 Pro. All four phones now have sensor-based mechanical stabilization for the primary camera, rather than just the iPhone 12 Pro Max last year.

This is all great stuff, but Cinematic mode is perhaps the most eye-catching update.

Cinematic is a video mode that’s available to all four iPhone 13 phones, and it’s intended for people who want to take the framing and cinematography of their videos a bit more seriously.

Apple brought in Oscar-winning director Kathryn Bigelow and Emmy-winning cinematographer Greig Fraser to big the feature up at the phones’ launch, which you can see below.

This may seem like overkill, but the 2015 film Tangerine was shot on an iPhone 5S, which has a tiny 1/3-inch 8MP sensor and only offers 1080p, 30fps video recording. The movie’s director, Sean Baker went on to make The Florida Project – although not on an iPhone on that occasion.

Cinematic mode is designed to act like a virtual focus puller. This is someone who might work alongside a camera operator, ensuring that the right parts of the picture are in focus, and perfectly sharp.

Of course, many modern cameras have this to some extent. We now take features like face detection – where if someone walks into frame, the focus will be drawn to their features – for granted. Cinematic mode goes much further, though, in several areas.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Predictive focusing

The first area is to do with predicting where the focus needs to head to next. In its demo (below), Apple showed the camera focusing back and forth between people as one looks away from the camera. In another scene someone enters the frame from the left, and the camera quickly transitions focus to their face, almost seeming to start the transition before they were fully visible.

This suggests that Cinematic mode’s focus behavior may be influenced by the ultra-wide camera, which in this scenario is not actually used to capture footage.

The ultra-wide becomes a sort of director of photography, if one whose production notes are written a fraction of a second before they’re enacted. This should give your videos a better sense of deliberation, even if it is automated.

It’s not yet clear if the above is the case, and it’s possible that the primary camera does all the focus work. Either way, we’re looking at an autofocus system that uses algorithms informed by Apple’s study of cinematography. The choices it makes about when to change focus, and what to focus on, are deliberately filmic.

Focus pixels are essential here too. For autofocus to look natural and deliberate, it has to avoid the ‘seeking’ effect seen in contrast-detection AF. This is where the autofocus motor overshoots the point of focus and pulls back, with the focus appearing to wobble before it hits its mark.

All the iPhone 13 phones have dual-pixel AF, a phase-detection autofocus method that allows for seek-free focus even with limited lighting – although in Apple’s own demos some of the focus changes don’t land with the confidence of a pro focusing manually.

- These are the world's best video editing apps

Depth of field

Shallow depth of field is the thing that will really make your Cinematic mode videos look truly different, though. This is like the iPhone’s Portrait photo mode, brought over to video.

The background can be blurred to a far more pronounced extent than the lens itself is capable of. While the iPhone 13 Pro has a wide-aperture f/1.5 lens, the tiny scale of these lenses means they can only really throw the background out of focus ‘naturally’ with very close-up subjects. Cinematic mode does it with software.

This isn’t the first time background blur has been added for video. The Huawei Mate 20 Pro had it in 2018, while Samsung’s S10 phones and Note 10 had Live Focus Video in 2019.

However, the results in these cases were pretty patchy, and were not built up into something useful enough for people actually interested in shooting short film-like pieces with their phones.

Cinematic mode offers just that, and also lets you alter the extent of the blurring effect after you shoot, by selecting an aperture value, or f-stop. As with real-world lens depth of field, the smaller the f-stop number, the more blurred the background will be.

Apple says you can change the point of focus after shooting too. And here’s the first slightly crunchy part.

The iPhone 13’s Cinematic Mode can make parts of the image blurrier, but it can’t make out-of-focus parts appear sharp, and this is where the phones’ small lenses actually become a benefit. Their naturally wide depth of field means that even if you want to switch to a subject that isn’t perfectly in focus, it should still appear sharp in motion next to other parts of the image that are deliberately thrown out of focus.

And it’s not as if plenty of films haven’t made it into cinemas with slightly out of focus moments anyway.

There was a camera that let you genuinely change the point of focus post-shoot: a Lytro Illum. It uses a light field sensor that captures the direction of light bouncing off an object rather than just a ‘flat’ representation of a scene. However, it couldn’t shoot video, its image quality wasn’t great, and the company shut down after the follow-up Immerge VR camera came out.

Apple could theoretically use the ultra-wide camera on your iPhone as a second point of focus, on hand at all times with different focus information should you wish to change subject post-shoot. However, this would likely result in more questionable-looking results, as you’d drop down to the cropped view of a camera with a narrower lens aperture, smaller sensor pixels and a slightly different view of a scene.

Cinematic mode will also offer manual controls over the point of focus, rather than letting the software do all the work, because you probably don’t want to automate too much of the creative process.

The iPhone 13 will let you lock focus to a subject, or manually select the focus point if Cinematic mode doesn’t do what you want it to.

This may introduce visible jolts, even if you use a tripod, but all four iPhone 13s have sensor stabilization, which should be able to all-but eradicate such jolts from footage if you’re careful enough with your gestures.

How it works

Questions remain as to exactly how parts of Cinematic mode work. But here’s what we think, and hope, is going on.

The iPhone 13 and iPhone 13 mini must use parallax to determine the various depths and distances present in a scene. This is where the feed of the wide and ultra-wide cameras is compared, and the discrepancies between their views of the scene can separate near objects from ones further away.

It may also analyze motion between frames. If you pan across a scene and assume that objects themselves are static, you can judge their relative distance by the amounts they move frame-to-frame. However, it seems unlikely that Cinematic mode relies on this entirely, as it wouldn’t work when the iPhone 13 is mounted to a static tripod.

The iPhone 13 Pro and iPhone 13 Pro Max have another tool – LiDAR. This uses light wavelengths outside of human vision, analyzing the light that’s bounced off objects and reflected back to the sensor to create a 3D map of a space.

We hope, and would expect, that this is used in the Pro phones’ version of Cinematic mode, as it seems perfect for the job. However, this would also mean that the effectiveness of the blurring effect, and its ability to deal with subjects that have a complicated outline, may vary between Pro and non-Pro iPhone 13s, and Apple hasn't mentioned anything about LiDAR being involved in the process.

Cinematic mode’s limitations

Cinematic mode renders video in Dolby Vision HDR, and you may be able to use ProRes video later in the year with when it is added to iOS 15. We’ve got a separate feature on Apple ProRes to bring you up to speed with that feature.

However, the resolution and frame rate of Cinematic mode is limited to 1080p, 30fps. ‘Normal’ HDR video with Dolby Vision can be recorded at an impressive 4K/60fps.

That’s going to disappoint those who already shoot everything at 4K resolution. However, in a way we find it reassuring. It shows that Cinematic mode is not a simple trick, that even with the Apple A15 Bionic’s 16-core neural processor, the CPU – or something else – acts at a bottleneck, and brings the capture rate down below that of even budget Android phones.

We’re slightly concerned, though, by the character of the focus shifts in Apple’s demos, which suggest the effect of a stills camera made to focus as quickly as possible. To get the real ‘rack focus’ effect seen in films, you’d need the option to slow down the focus motor so that it strolls to its destination rather than leaping there – and as far as we can tell so far, there’s no control over that.

Apple hasn’t confirmed if Cinematic mode is coming to any of the last-generation iPhone 12 phones either. However, it seems unlikely given that Apple has enough excuses not to bring it those handsets — they have last-generation CPUs, and their vertically aligned cameras may affect their ability to judge parallax on the fly. For now we’re just looking forward to seeing what the creative people out there make of the iPhone 13’s Cinematic mode – and what they do with it – when they get their hands on it.

Andrew is a freelance journalist and has been writing and editing for some of the UK's top tech and lifestyle publications including TrustedReviews, Stuff, T3, TechRadar, Lifehacker and others.