How it works: the complete guide to HD

Deinterlacing methods

Deinterlacing isn't just a simple algorithm. Much research has been done to improve the quality of deinterlacing algorithms, but the processing remains time consuming and complex, requiring the display to buffer fields, process them into frames and display them.

There are three key deinterlacing systems: field combination deinterlacing, field extension deinterlacing and motion compensation.

The first combines two succeeding frames to form a frame. Since the simple algorithm of weaving the odd and even scan lines in order produces combing effects, there are other techniques, such as blending (averaging out succeeding lines) and selective blending (averaging out succeeding lines for motion only, otherwise weaving them for frames that don't change). The problem with these techniques is that the resulting video tends to look soft or blurred.

The second system, field extension, either views the fields as actual frames (at half-height, essentially) so other techniques such as edge detection or sharpening can come into play, or doubles each line in each field. The problem with the latter method is that stationary objects in the video tend to bob up and down, and the height resolution is halved. This is known as the line-doubling technique.

The final system blends output from all the others to produce the best picture quality possible. The algorithms here try to predict the direction and speed of motion of objects in the video, minimising combing and softening.

All modern TVs (LCDs and plasmas) are progressive scan systems, but there are still problems once we factor HDTVs into the picture. Consider the standard DVD – it contains a video in what's known as 480p format. This is 480 pixels high (and usually 640 pixels wide for the 4:3 aspect ratio), and uses progressive scanning (the 'p' stands for 'progressive').

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Some DVDs are 480i, where 'i' stands for 'interlaced'. A normal DVD player can read either type of DVD, decode and decompress, and produce output using interlaced scan for CRT televisions or progressive scan for LCD or plasma boxes.

If we connect the output from such a DVD player to a TV via the analogue inputs (composite or S-Video), we'll will get an analogue, interlaced display of the video on the DVD. It won't be completely sharp – it's not digital, after all.

It's better to pipe the video from your DVD into an HDTV digitally using the DVI or HDMI connection – avoid converting to analogue at all costs. The problem here is the difference in resolution. Suppose your HDTV is 720p. That means it has at least 1,280 x 720 pixel resolution for a widescreen display, and uses progressive scan.

Considering only the vertical pixel height, we have to convert 480 pixels into 720, unless we want to view the DVD output directly in a tiny window in the middle of the HDTV. We have to stretch the video approximately 1.5 times in size.

Video upscaling

Enter the video upscaler. This device sits somewhere on the path from the DVD player to the HDTV. It can be integrated into either device or can be a separate box altogether, although it's usually part of the DVD player (on the premise that upscaling should be done as close to the source as possible).

What the video upscaler does is analyse the pixel values and interpolate the values of extra pixels between them in order to stretch the 480 lines into 720. This is sometimes known as resampling.

The simplest scaling algorithm is bilinear interpolation. This is a simple extension of linear interpolation that you probably learned at school. With linear interpolation, you're trying to find the value of a function at some point given two known values at either side.

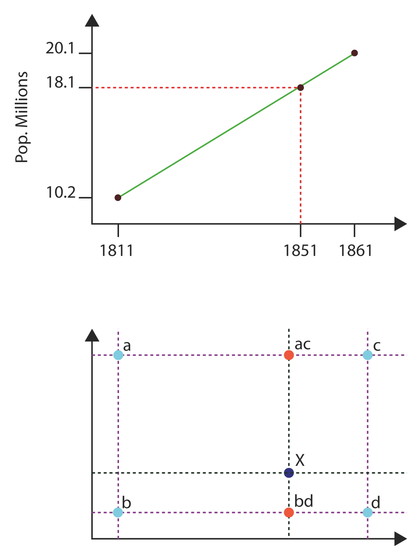

Essentially, you 'draw' a line between the two values at the end and interpolate along that line the value at the point in question. In the example below, we know the populations of England and Wales in 1811 and 1861, and estimate the population in 1851 using linear interpolation.

Bilinear interpolation moves that algorithm into two dimensions: you know the values of the function (in our case, colour values) at the corners of a square and you want to find the value at some point within the square.

Look at the bottom of the above graphs, where you're trying to find the value at x given the values at a, b, c and d. You perform a linear interpolation across the top (corners a and c, interpolated as ac) and bottom (corners b and d, interpolated as bd) of the square, and linearly interpolate between those two new points (from ac to bd, interpolated as x).

Bilinear interpolation is simple to code up, but when applied to images as we stretch them to fit a larger resolution, it causes aliasing artifacts and visual defects.

The process of bilinear interpolation relies on four pixel values to calculate another pixel value. This assumes that the values don't vary wildly across the image (we assume the colour function is fairly continuous with no major breaks), but as we know, images and video frames aren't like that. There are object edges where colour changes dramatically – 'breaks' in the smoothness of colour information.

In these cases, we should use more data points in our interpolation. This has the benefit of coping with edges in the original image, and providing a 'smoother' interpolation.

This is normally equivalent to using a quadratic interpolation. For a video upscaler, one of the most popular non-adaptive algorithms is bicubic interpolation, which relies on not the four closest pixels, but the 16 closest. Those pixels closest to the required point have a higher weighting than those further away, but all 16 are involved in the calculation.

The algorithm is known as non-adaptive because the whole frame is treated equally; no effort is made to identify fast-changing parts of the scene and other video effects.

Non-adaptive algorithms

Bicubic interpolation has the benefit of a fast calculation (slower than bilinear, but fast enough for video), but isn't the only non-adaptive algorithm. Others include nearest neighbour, spline, sinc and Lanczos.

To achieve better visual results, there are many patent-protected scaling or sampling algorithms that detect edges and apply algorithms to them to minimise the visual interpolation defects in those areas.

Others try to detect motion of objects in the video over several frames and apply algorithms to make motion smoother. With video, these algorithms have to be written to spot scene changes and not assume that they are fast motion.

These algorithms have to be fine-tuned for performance; they do, after all, scale video at 25 or 30fps.

The upscaler can't create detail out of nothing, but it does a good job of converting video without artifacts.

For better video, there's nothing for it but to use Blu-ray discs in a Blu-ray player. These are designed to provide 1080p video at 24 frames per second, and will play on a 1080p HDTV without upscaling.

- 1

- 2

Current page: How HD works: Deinterlacing and upscaling

Prev Page How HD works: Converting framerates