Google asked users for their best Nano Banana Pro prompts – these 3 tips stood out

Subtle adjustments for amazing results

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Google's enhanced AI image maker Nano Banana Pro uses Gemini 3 to impressive effect for both image editing and graphics creation. There are a lot of approaches to using the powerful tool to its limits. It can produce marvels, but only when guided with care.

In fact, Google recently asked users to share their favorite techniques for writing text prompts for Nano Banana Pro. Amid the complaints about access, there were plenty of intriguing responses that all pointed to the value of a good prompt.

Google responded positively to several of them, including the strategies below, all of which reflect an increasingly sophisticated style of prompting that has emerged around Nano Banana Pro.

We tried the techniques on offer and these three really stood out:

1. Treating the prompt like code with defined variables

One of the more inventive strategies circulating among Nano Banana Pro power-users is to treat the prompt like pseudo-code. You define key elements as variables, and then refer back to those variables as you describe the scene.

Nano Banana Pro doesn’t understand variables as programming entities, but it does recognize that a defined label establishes conceptual boundaries. The model tends to treat them as stable containers, reducing drift that sometimes occurs when prompts rely solely on repeated natural-language references.

This method works exceptionally well for complex arrangements, such as technical diagrams, multi-object still-life setups, product sequences, or anything requiring internal consistency. When you establish variables such as OBJECT_A, TEXTURE_B, or LIGHTING_C, Nano Banana Pro tends to keep each distinct without accidentally merging their attributes or resizing them illogically. Structure becomes clearer, and the model has a pathway to maintain proportional, spatial, and stylistic coherence throughout the generation.

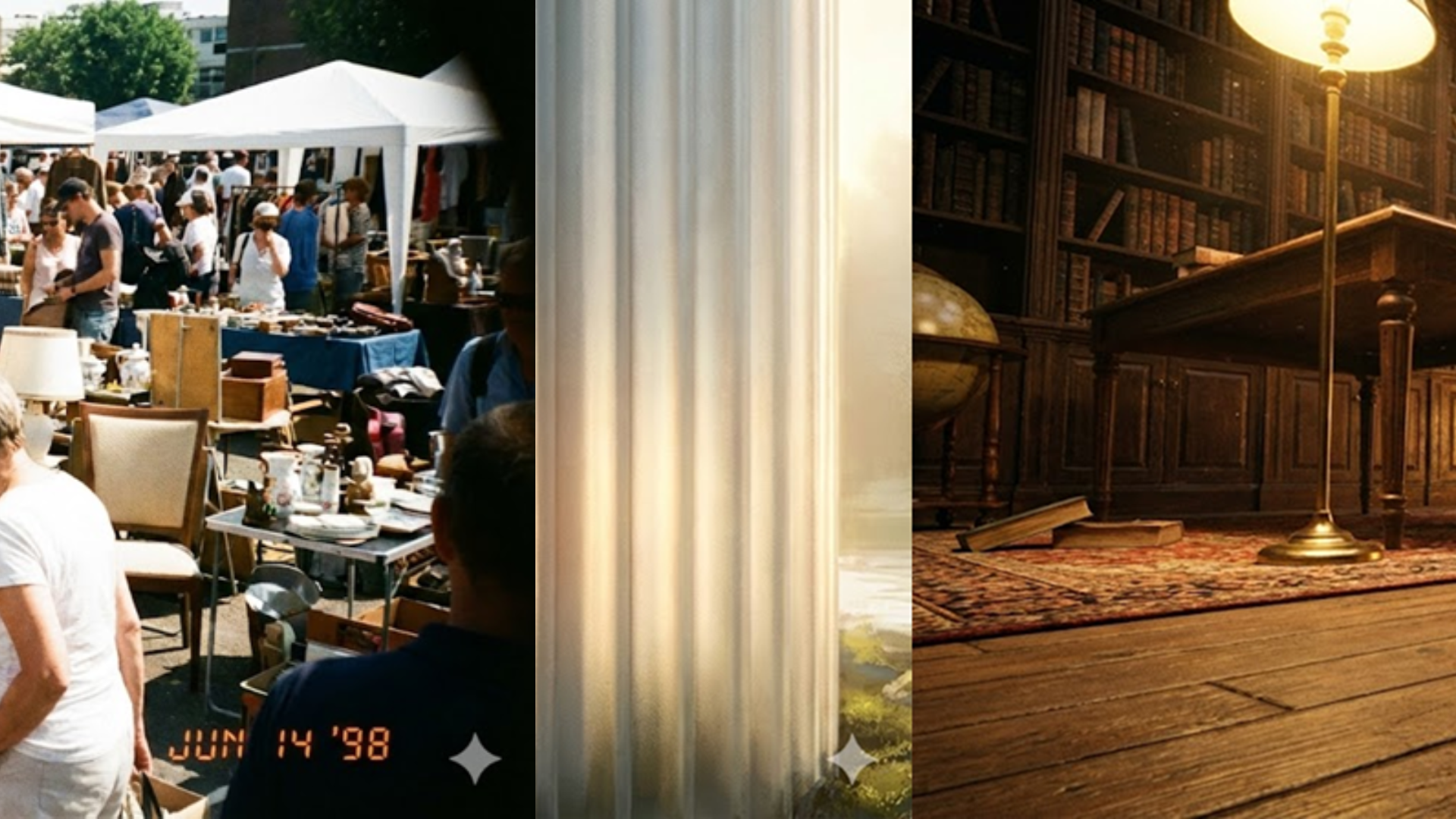

So, for a prompt, I wrote, "Define SHAPE_X as a tall cylindrical tower with ribbed sides. Define TEXTURE_Y as frosted glass. Create an illustration where SHAPE_X incorporates TEXTURE_Y across its full surface." You can see the result above.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

2. Ask for imperfections

Nano Banana Pro excels at delivering clean, clinical, studio-perfect imagery. That’s ideal for mockups or technical illustrations, but when the goal is to mimic the texture of real photographic work, those flawless images can look strangely sterile. Strategically adding imperfections bridges that gap and gives the model permission to reproduce the quirks of human-made images.

Imperfections act as realism cues. A tiny smear on the lens, a subtle handshake blur, a faint light leak, or uneven exposure introduces the kind of incidental detail that physical cameras routinely create. These elements are texture. They convey history, motion, mood, and the sort of visual entropy that makes real photos feel grounded.

In documentary-style generations, asking for “slight handheld shake consistent with a hurried capture” produces dynamic motion that reads naturally. In atmospheric street scenes, requesting “minor dust particles on the lens catching stray light” adds that lived-in aesthetic characteristic of older glass.

Scientific illustrations can benefit too, especially when the goal is to mimic the imperfections of archival photography; slight grain or inconsistent chemical development patterns nudge the images closer to period authenticity.

Nano Banana Pro interprets these imperfections with surprising subtlety. The key is specificity –identify the kind of imperfection and how it should manifest rather than simply saying “make it imperfect.” This gives the model structure without diluting realism.

My prompt to demonstrate the technique, as seen above, was "a crowded flea market at midday, rendered with minor overexposure in bright areas and a subtle vignette as if taken with an aging compact camera."

3. Shift perspectives

Perspective blending, also referred to as “doubling the instructions,” is a method of asking Nano Banana Pro to integrate multiple viewpoints or interpretive angles into one cohesive image.

Rather than instructing the model to show two views side-by-side, you fold the secondary viewpoint into the scene's conceptual fabric. Nano Banana Pro responds by subtly reshaping composition, lighting, or spatial cues to incorporate both perspectives simultaneously.

The technique deepens the frame, enriches spatial storytelling, and reveals elements that would not emerge from a single vantage point alone.

It's useful for scenes requiring layered information. By embedding a second directive that reframes the scene, you essentially ask the model to harmonize two conceptual constraints rather than prioritizing one over the other.

You can see how it works in the image above from the prompt for an “interior of a classical library, warm lamplight, subtly recomposed with spatial cues suggesting how the same room would appear when viewed from the floor at mouse height.”

These Nano Banana Pro strategies offer a toolkit for shaping the model’s considerable imagination with clarity and control. They are not hacks or shortcuts; they are ways of structuring intention so the model can respond with coherence and nuance.

The more thoughtfully users apply these techniques, the more Nano Banana Pro becomes a medium through which ideas can be explored, iterated, and refined with surprising fidelity.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

➡️ Read our full guide to the best business laptops

1. Best overall:

Dell Precision 5690

2. Best on a budget:

Acer Aspire 5

3. Best MacBook:

Apple MacBook Pro 14-inch (M4)

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.