How your operating system works

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Time management

The operating system also manages how much CPU time each running application has to ensure that it gets work done. Most modern PCs have multiple CPUs, but let's imagine we only have one.

Only one application or process can use that CPU at any one time. To create the illusion of many applications running simultaneously, the OS will switch rapidly back and forth between the current set of programs, giving each one a small timeslice in which to get some work done.

Once the current application has run for a certain amount of time (the time slice), or has been suspended waiting for a resource or some form of user input, the OS will save its current state (register values, memory, current execution point), load the saved state for the next application in line, and start executing it.

After it's completed its timeslice or has suspended, the next process gets a turn using the CPU to get work done. This round-robin scheduling continues as long as the OS is running.

Sometimes an interrupt will occur needing immediate attention, in which case the current process is interrupted, its state is saved and the interrupt is serviced.

Modern 32-bit and 64-bit operating system provide services that manage memory for user applications, including the virtualisation of memory. In essence, the memory layout for a user application looks exactly the same to each one.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

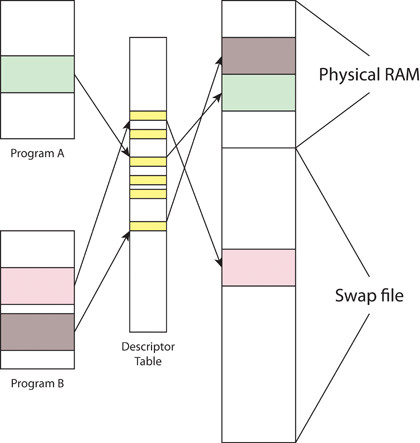

To ensure that different programs don't interfere with each other's memory structures, the OS doesn't provide access to physical memory directly. Instead, it maps user-mode memory through mapping tables called descriptor tables to either real memory or to the swap file on disk.

This provides a great deal of flexibility for the operating system: it can move memory blocks around to accommodate other programs' memory requirements without the original program knowing; it can swap out memory blocks to disk if the program isn't being used and it can defer assigning (or committing) memory requests until the memory is written to.

This mapping of memory through descriptor tables also means the OS gives every user application the convenient fiction that it's the only application running on the system. No applications will clash by trying to use the same memory, and an application can't cause another to crash by writing to its memory space.

FIGURE 2: Mapping virtual memory to physical memory and the swap file with a descriptor table

Figure 2 shows two programs, A and B, both with the same view of their memory space. Program A has one block of memory allocated at a particular position in its memory space; in reality it's found somewhere else in physical memory via the descriptor table. Program B has two blocks allocated, one of which is found in the swap file for the system.

Files and folders

Another important virtualised service provided by the OS is the filesystem. Disk hardware works on logical block addresses (LBAs), which are essentially numbers that define the sector number from the beginning of the drive volume.

The disk controller hardware converts the LBA into physical parameters (such as head, track and platter) to find the actual sector. For SSD drives, the disk controller simply converts the LBA into a memory address (although the controllers on SSDs will move data blocks around to even out access to the flash memory unbeknownst to the OS).

The operating system hides these raw LBAs from user programs by imposing a hierarchical filesystem over the disk. The filesystem organises the physical disk sectors (each one usually being 512 bytes in size, although the market is starting to move towards 4kB sectors since most filesystems use that block size as a minimum allocation and granular size for a file) into files and directories.

The filesystem is responsible for maintaining the mapping between files and blocks, which blocks appear in which files (and in which order they appear), which directory a file is found in, and other similar services.

The filesystem virtualisation also means that user programs only need to worry about high-level operations with files and folders: creating new ones, deleting existing ones, adding data to the end of a file, reading and writing to files and enumerating the folder contents.

All the mapping between userfriendly names and LBAs is done by the operating system under the hood. To the user program, a file is just a contiguous set of bytes somewhere on the disk and it doesn't have to work out that the file consists of a block over here, followed by that one over there.

APIs

This filesystem abstraction points to another set of services provided by the operating system: the application programming interfaces (also know as APIs).

These are plug-in points that let user programs like browsers and word processors to take advantage of various services exposed by the operating system. These include APIs for memory management, file and folder management, network management, user input (keyboard and mouse), the windowing user interface, multimedia (video and audio) and so on.

In all cases, the API provides a standardised way for user applications to obtain and use resources from the PC, no matter what hardware was actually present. So, for example, a user program doesn't have to know anything about which video adaptor or screen the PC is using in order to display something on it. It merely makes calls to the standard API ('draw a window here of this size') and the adaptor and screen drivers translate those standard requests to calls to the hardware that provide the required result.

That is perhaps the last part of the operating system story. It isn't a monolithic program, written to work with every single piece of hardware out there. It is instead a framework into which hardware-specific drivers are plugged.

These drivers know how to access their particular hardware, can translate between standard function calls and the requirements of the device, and are written to use the operating system's APIs.