Twitter flags tweets with manipulated media in India - This is how it works

BJP leader gets the dubious distinction

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Twitter has started to label tweets in India that it thinks contains Manipulated Media to battle misinformation. And as it happened, the IT cell head of India's ruling party, the BJP, has bagged the dubious distinction of being the first Indian to get flagged for such an indiscretion.

Twitter put the warning on a video shared (on his Twitter timeline) by the BJP’s Amit Malviya on the protests by farmers against the recent agriculture laws.

Malviya, while responding to India's opposition party leader Rahul Gandhi’s tweet about alleged brutality against the protesting farmers, had put out a video that showed a cop swinging the baton, but the farmer escaping its blow. Malviya had captioned the video "propaganda vs. reality”.

Self-appointed fact-checking handles on Twitter apparently got in touch with the farmer Sukhdev Singh in Kapurthala district of Punjab. He reportedly said that he had suffered injuries to his arms and legs.

After these things emerged, Twitted flagged Malviya's video as "manipulated media".

In Twitter terms, "manipulated media" is used to refer to an audio-visual piece of content which has been “significantly and deceptively altered or fabricated.”

- How to change your Twitter password or reset it

- How to mute people and words on Twitter

- How to send voice tweets on Twitter

First case of manipulated media in India

Rahul Gandhi must be the most discredited opposition leader India has seen in a long long time. https://t.co/9wQeNE5xAP pic.twitter.com/b4HjXTHPSxNovember 28, 2020

This high-profile case is believed to be the first instance of Twitter implementing its labelling policy in India. The social media platform has been following the practice in the US and adding fact-checking notices to tweets by US President Donald Trump.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

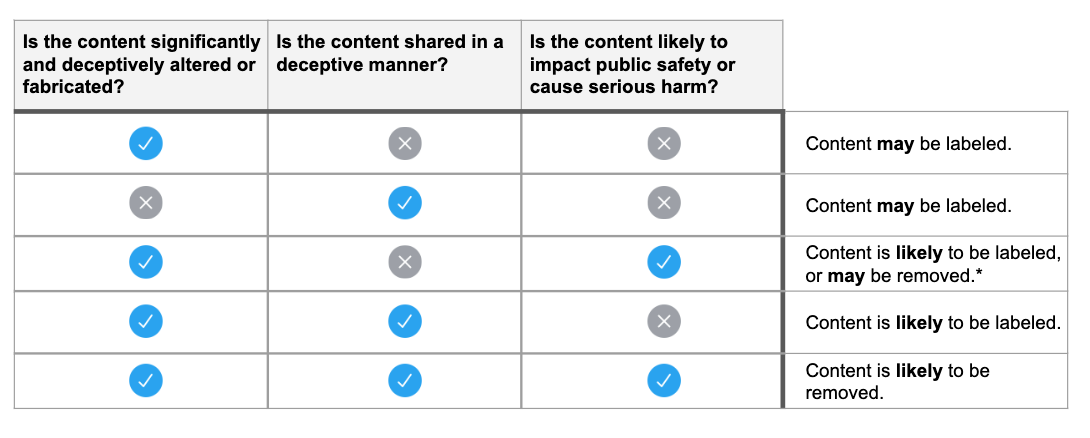

In February 2020 Twitter announced its policy on labelling tweets containing synthetic and manipulated media, including videos, audio and images. It said that such content would be removed if they are “deceptively shared,” and pose “serious harm”.

In order to determine the degree of manipulation, Twitter said it would use its own technology or receive reports through partnerships with third parties.

Twitter's rules on manipulated material

Any pic or clip that Twitter tags as "manipulated video" has an eponymous label at the bottom. If you click on it, it explains on the reasons for flagging it.

In February Twitter had clearly laid out how it decides on manipulated pic or video.

To start off, it checks whether the content has been substantially edited in a manner that fundamentally alters its composition, sequence, timing, or framing.

Secondly, Twitter figures out if any visual or auditory information (such as new video frames, overdubbed audio, or modified subtitles) has been added or removed.

And finally, it finds out whether media depicting a real person have been fabricated or simulated.

In deciding these things, Twitter also considers the context in which media are shared. According to Twitter this could result in confusion or misunderstanding or suggest a deliberate intent to deceive people about the nature or origin of the content.

Most of such shady tweets are removed from the timeline. Twitter said "material, synthetic or manipulated, shared in a deceptive manner, and is likely to cause harm, may not be shared on Twitter and are subject to removal."

Twitter has also put other tools into place in an attempt to help users discern what information on its platform is inaccurate.

Over three decades as a journalist covering current affairs, politics, sports and now technology. Former Editor of News Today, writer of humour columns across publications and a hardcore cricket and cinema enthusiast. He writes about technology trends and suggest movies and shows to watch on OTT platforms.