YouTube declares war on deepfakes with new tool that lets creators flag AI-generated video clones

A welcome move in the era of online impersonation

- YouTube has launched a deepfake detection tool to help creators identify AI-generated videos using their likeness without consent

- The tool works like Content ID, allowing verified creators to review flagged videos and request takedowns

- Initially limited to YouTube Partner Program members, the feature may expand more broadly in the future

YouTube is starting to take illicit deepfakes more seriously, rolling out a new deepfake detection tool designed to help creators identify and erase videos with AI-generated versions of their likeness made without their permission.

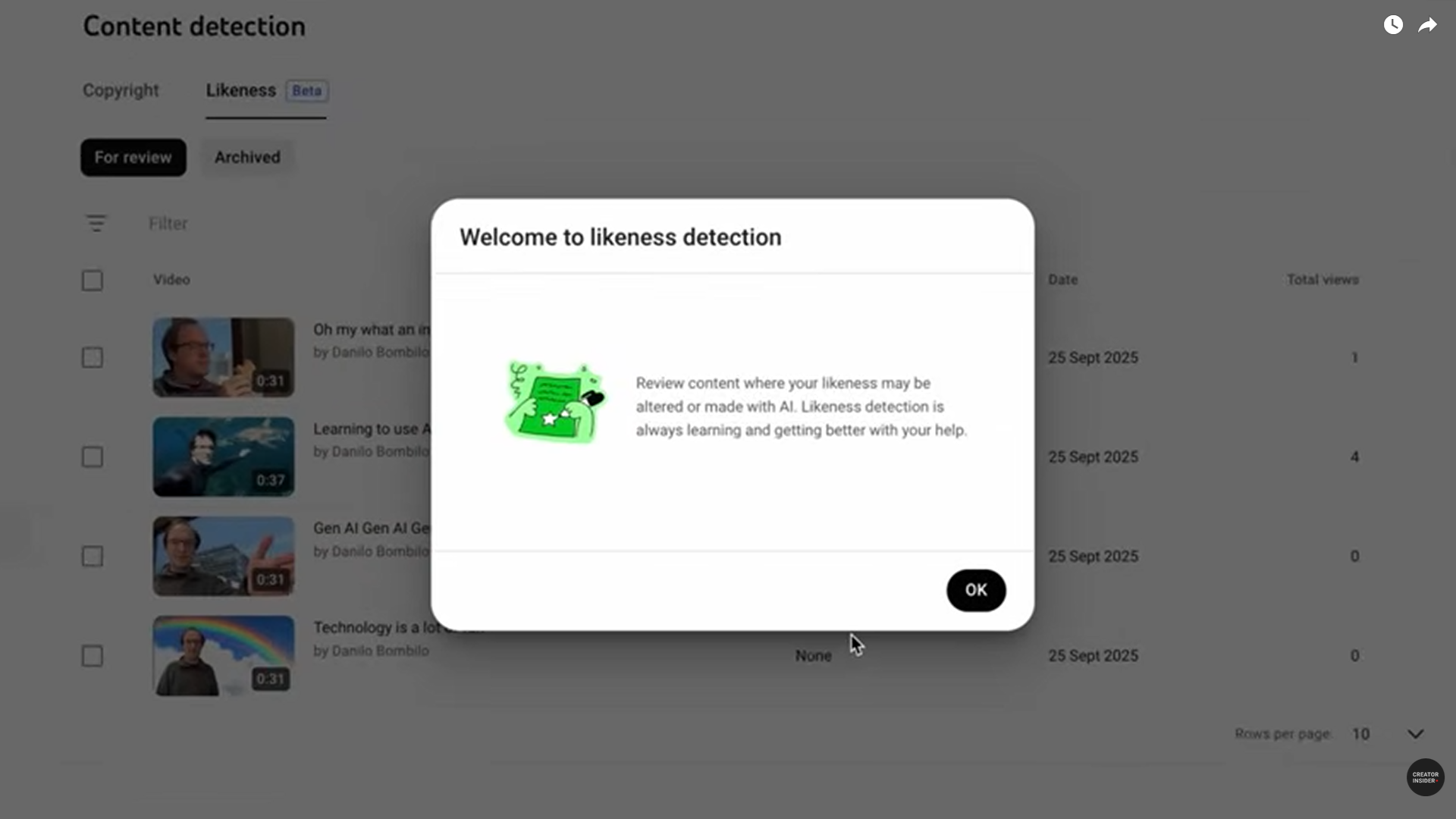

YouTube has begun emailing details to select creators, offering them the chance to scan uploaded videos for potential matches to their face or voice. Once a match is flagged, the creator can review it via a new Content Detection tab in YouTube Studio and decide whether to take action. They can simply report it, submit a takedown request under privacy rules, or file a full copyright claim.

For now, the tool is only available to a limited group of users in YouTube’s Partner Program, though the service will likely be expanded to become available to any monetized creator on the platform eventually.

It's similar to how YouTube worked with Creative Artists Agency (CAA) in 2023 to give high-profile celebrity clients early access to prototype AI detection tools while providing feedback from some of the people most likely to be impersonated by AI.

Creators must opt in by submitting a government-issued photo ID and a short video clip of themselves. This biometric proof helps train the detection system to recognize when it's really them. Once enrolled, they’ll begin receiving alerts when potential matches are spotted. YouTube warns that not all deepfakes will be caught, though, particularly if they’re heavily manipulated or uploaded in low resolution.

The new system is much like the current Content ID tool. But while Content ID scans for reused audio and video clips to protect copyright holders, this new tool focuses on biometric mimicry. YouTube understandably believes creators will value having control over their digital selves in a world where AI can stitch your face and voice onto someone else’s words in seconds.

Face control

Still, for creators worried about their reputations, it’s a start. And for YouTube, it marks a significant turn in its approach to AI-generated content. Last year, the platform revised its privacy policies to allow ordinary users to request takedowns of content that mimics their voice or face.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

It also introduced specific mechanisms for musicians and vocal performers to protect their unique voices from being cloned or repurposed by AI. This new tool brings those protections directly into the hands of creators with verified channels – and hints at a larger ecosystem shift to come.

For viewers, the change might be less visible, but no less meaningful. The rise of AI tools means that impersonation, misinformation, and deceptive edits are now easier than ever to produce. While detection tools won’t eliminate all synthetic content, they do increase accountability: if a creator sees a fake version of themselves circulating, they now have the power to respond, which hopefully means viewers won't fall for a fraud.

That matters in an environment where trust is already frayed. From AI-generated Joe Rogan podcast clips to fraudulent celebrity endorsements hawking crypto, deepfakes have been growing steadily more convincing and harder to trace. For the average person, it can be almost impossible to tell whether a clip is real.

YouTube isn’t alone in trying to address the problem. Meta has said it will label synthetic images across Facebook and Instagram, and TikTok has introduced a tool that allows creators to voluntarily tag synthetic content. But YouTube’s approach is more direct about maliciously misused likenesses.

The detection system is not without limitations. It relies heavily on pattern matching, which means highly altered or stylized content might not be flagged. It also requires creators to place a certain level of trust in YouTube, both to process their biometric data responsibly, and to act quickly when takedown requests are made.

Nonetheless, it's better than doing nothing. And by modeling the feature after the respected Content ID approach to rights protection YouTube is giving some weight to protecting people's likenesses just like any form of intellectual property, respecting that a face and voice are assets in a digital world, and have to be authentic to maintain their value.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

You might also like

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.