From Kinect to InnerEye – How Microsoft is supercharging gaming tech with AI smarts to help diagnose cancer

From tracking limbs to saving lives

No one single technology exists in a vacuum. Microsoft’s Kinect may have suffered an ignoble slide into obscurity following its troubled pairing with the Xbox One. But its underlying principles, technology and the research that went into developing it now has a far more important role than measuring your drunken “Just Dance” performance.

It’s saving lives.

Speaking at the AI Summit in London, Microsoft’s Professor Christopher Bishop (Laboratory Director of Microsoft Research) and Antonio Criminisi, principal researcher for InnerEye Assistive AI for Cancer, discussed how the principal root gaming tech, in conjunction with machine learning principles, is now being used to help diagnose cancers.

Turning hours into seconds

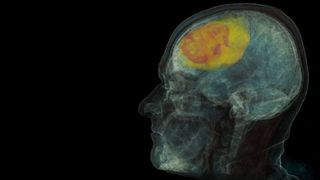

InnerEye Assistive AI for Cancer is a Microsoft-enabled research project using image analysis and tools to dramatically lower the amount of time it takes to identify and diagnose cancerous cells in a patient.

One of the key applications of the technology is in examining the data produced from a Computer Tomography (CT) scan. CT scans look right the way through body tissue and bone matter, resulting in images offering many different layers or slices of views throughout a patient’s body. Together, they build a whole picture of the internal workings of a patient – but identifying each organ through each minutely-differing layer, and being able to identify anomalies within that data, can take even the most skilled oncologist many hours. They use 3D modelling tech to highlight and identify healthy organs, separating them from any aberrations, but must build that picture pixel by pixel, or voxel by voxel, before deciding on a treatment plan.

But what takes an oncologist hours to do by hand, InnerEye can achieve (with a little human help) in just around 30 seconds. As such, much more time can be given to the treatment plan for each individual patient, with solid data (in the case of radiation therapy) as to which healthy areas of the body to avoid hitting.

“To train the software we have hundreds of images of CT scans from patients, all anonymised and consented”, explains Criminisi.

Get daily insight, inspiration and deals in your inbox

Get the hottest deals available in your inbox plus news, reviews, opinion, analysis and more from the TechRadar team.

“They come from different regions in the globe, not just the UK, which is important to capture the variability in ethnicity, body shape and the disease state. Each image is confirmed by an oncologist to have, for example signs of prostate cancer, and we refine it down through segmentation.”

InnerEye’s performance is not devoid of input from a doctor however. In fact, it’s improved by it. While InnerEye is incredibly capable of identifying different body zones, its margin of error is not perfect. An oncologist can fine tune the resulting report, and the data fed back to the cloud can be used to refine InnerEye’s algorithms wherever they are in use, in any practice anywhere around the globe.

“It’s not ‘reinforcement learning’, it’s a different type of machine learning called ‘supervised learning’,” said Criminisi. It’s a more traditional type of learning as it is refined through professional input, in this case from a doctor.

“We’re never going to replace doctors,” says Criminisi. “But this is a tool to help them do their jobs more efficiently and effectively.”

From gaming to medicine

For any patient with an interest in gaming, they may be surprised to hear the roots of the software now being used on their journey to recovery.

“It’s using the same technology that was first developed for game playing with Xbox,” explains Criminisi.

“What we’re doing uses Random Forest, a very special sort of machine learning algorithm, which interestingly came out of Microsoft’s Cambridge lab many years ago and lead to the invention of Kinect.

“That technology was looking at a user, not a patient, from the outside in, and was capable of recognising movements. So we thought, let’s turn it on its head, and use it on images where you look at a patient from the inside out. It’s the same technology evolving over time.”

It’s not just the scanning tech’s history in Kinect that links Microsoft’s AI ambitions back to gaming, but the underlying chip hardware carrying out the computational tasks too. Its Field Programmable Gate Array (FPGA) silicon architecture is founded in the work done on gaming GPUs.

“A CPU is a rather generic processor,” explains Bishop.

“It has the advantage of being very flexible and programmable in software, but the actual architecture is fixed. Then there is more specialized architecture, such as graphical processing units, or GPUs. They’re designed for fast graphics, primarily for the games industry. But it turns out they are very well suited for a very specific type of machine learning called a ‘deep neural network’, which really is the thing that’s underpinned the whole excitement around AI.

“GPUs are a very specific fixed architecture, really very good at doing a lot of the same thing in parallel. But they lack flexibility. An FPGA is a little bit different – you can think of it like a LEGO set of gates that can be configured in software to be very specific architecture. It won't be quite as efficient as a purpose-designed chip on a specific algorithm, but you can very quickly configure it to work on any one of a number of different algorithms, or if a new algorithm comes along.

“This is what’s fuelling the AI revolution, along with an enormous amount of research around new machine learning techniques. The flexibility means that, when a new and improved algorithm comes along, we can just reconfigure that FPGA supercomputer to deal with it. We no longer need to develop a new bespoke chip. ”

Progress vs privacy

As an example, Bishop claims that FPGA chips could be used to translate the entirety of the epic novel ‘War and Peace’ from Russian into English in the time it’d take you to pick it off your bookshelf.

“Imagine a future where we deploy this tool in hospitals, as we are already doing,” says Criminisi.

“As an oncologist fixes some mistakes, or makes stylistic choices, that data goes back into the Microsoft Cloud, Azure, and we use it to retrain the algorithm. There’s continuous learning. The next day, when the doctor goes back into his surgery, the performance has been improved. That’s where we want to go next.”

But reaching this exciting next stage in everyday diagnose will present challenges removed from silicon, admits Criminisi.

“One thing is to talk about the technology, the other is to talk about the legal hurdles and the privacy hurdles. Enabling a mechanism where you can do continuous learning, will be from a governance point of view a lot more complicated than even what we’re doing right now.”

It may not quite be a resurrection for the Kinect, but in preventing a potentially untimely death in patients, perhaps the technology, through this evolution, has finally found its true calling.

Gerald is Editor-in-Chief of iMore.com. Previously he was the Executive Editor for TechRadar, taking care of the site's home cinema, gaming, smart home, entertainment and audio output. He loves gaming, but don't expect him to play with you unless your console is hooked up to a 4K HDR screen and a 7.1 surround system. Before TechRadar, Gerald was Editor of Gizmodo UK. He is also the author of 'Get Technology: Upgrade Your Future', published by Aurum Press.