3 things I love about the Nvidia RTX 4070, and one I (irrationally) hate

I never claimed to be rational, alright?

If you're looking to snap up an RTX 4070 for yourself, be sure to check out our stock-tracking liveblog, which we'll be updating regularly to help you score the graphics card you want.

Well, the RTX 4070 is finally here – and what a great card it is. I’ve long been one of Nvidia’s biggest critics (don’t worry though, Team Green – I can be equally mean to AMD), but it’s impossible for me to deny that the 4070 is just excellent.

It’s actually my favorite graphics card in the current Lovelace lineup from Nvidia, in fact – because it’s the GPU gamers actually need. Sure, the RTX 4090 is incredibly powerful, even allowing us to play Cyberpunk 2077 in glorious 8K resolution, but it’s far too expensive for the average consumer.

In fact, this is a big problem with high-end GPUs right now: they’re not accessible. AMD’s competing flagship Radeon RX 7900 XTX costs a thousand dollars in the US, and more in many regions outside America. We’re also seeing worrying reports that powerful GPUs will be hoovered up for AI development – something Nvidia certainly seems to support.

That’s not a bad thing, to be clear. I have my doubts about ChatGPT, for sure, but AI technology has huge potential in the gaming space: Nvidia’s nifty Deep Learning Super Sampling (DLSS) tech is powered by AI, after all. But high-end GPUs remain inaccessible – so the RTX 4070 is the one to watch right now.

With that in mind, I’d like to share three things I simply adore about Nvidia’s latest GPU – and one that I really, really don’t like. I’ll be honest, it’s kind of an irrational hatred. Call it a pet peeve… you’ll see. But first, let’s take a look at the good stuff.

DLSS 3 is absolutely bonkers

I’ve waxed lyrical about how amazing Nvidia’s DLSS upscaling tech is in the past, but DLSS 3 takes that awesome performance boost and makes it even better – more than earning it a space among the best graphics cards.

With the new ‘Frame Insertion’ feature, DLSS 3 can use machine-learning AI to not just upscale your games to a higher resolution with minimal loss of framerate, but actually generate entire frames of gameplay and insert them on the fly to boost your fps.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

This is the key factor that separates the RTX 4070 from its previous-gen counterparts; it naturally dumpsters the already-pretty-great RTX 3070, but with DLSS 3, it can even beat the more expensive RTX 3080 at 4K Ultra in some games.

It’s a (relatively) compact card

Thank the lord! I’m not a fan of GPUs that are so big and heavy that they require support struts inside your PC case, and steal all of your rear I/O slots with their massive, chunky coolers. I’m looking at you, RTX 4090.

No, the 4070 is a lithe little thing in comparison, using the standard two-slot layout that has been commonplace for generations (of GPUs, to be clear). Some third-party cards don’t even use the popular three-fan configuration, opting instead for a conventional two-fan design.

The length is a factor here too; the RTX 4070 Founders Edition requires less horizontal clearance inside a PC case than the previous-gen RTX 3080, meaning that you won’t have to buy a chunky ol’ desktop case in order to fit this GPU inside. Smaller is better, I always say. Don’t quote me on that, actually.

It’s not too power-hungry...

With a rated TGP of 200W (about 10% less than its predecessor the 3070), the RTX 4070 offers some serious performance-per-watt – and doesn’t guzzle down the insane 450W+ used by the flagship RTX 4090.

In a time of rising energy bills and shrinking bank accounts, I personally don’t want a GPU that will cost me a lot of electricity to run. A 200W TGP is excellent, as far as I’m concerned – and you probably won’t need to upgrade your PSU to use this card either.

...but it uses that blasted 16-pin power connector

And here we come to the part I don’t like. The RTX 4070 (the Founders Edition version from Nvidia, anyway) uses the rather controversial 16-pin ‘12VHPWR’ connector to draw power from your PSU. Why is this bad? Well, there are a few reasons.

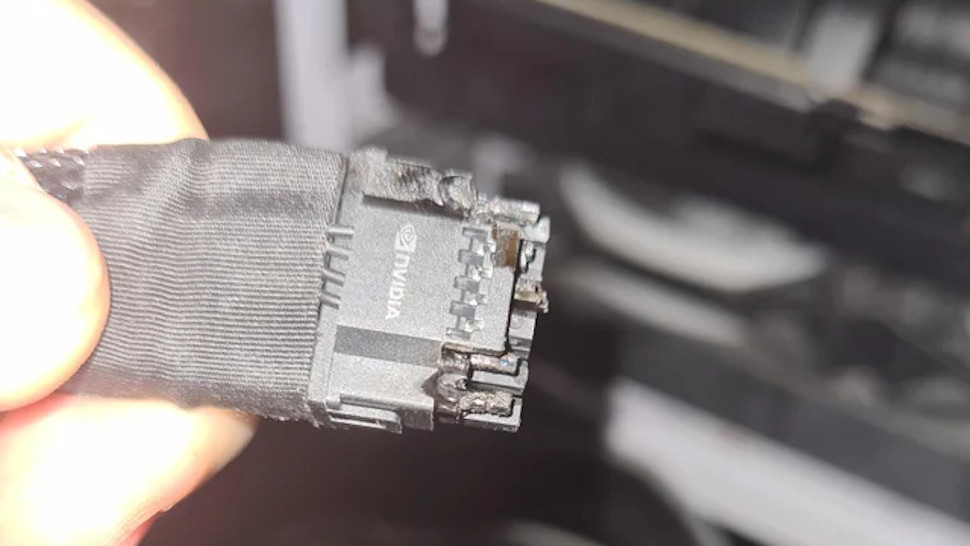

First, it was this power connector that sparked (ho ho) the ‘cablegate’ crisis back in October 2022, where some RTX 4090 cards using that 16-pin connector overheated and melted, damaging users’ GPUs and sometimes their entire PC. Although Nvidia took its sweet time addressing the issue, it did eventually confirm, after internal research, that the failure rate was only around 0.04%, and agreed to RMA any affected cards at no cost to the consumer.

I had my concerns around Nvidia’s use of the 12VHPWR connector, which was reportedly known to have potential ‘thermal variance’ issues when Team Green put it into production, but it’s at least not a fire risk here – the potentially incendiary result was caused by the high power flow of the 4090, so the 200W 4070 won’t have the same issues.

Still, it’s a contentious choice, with AMD notably not using the new power connection standard on its next-gen GPUs, opting simply for a pair of traditional 8-pin PCIe power connectors on its flagship Radeon RX 7900 XTX.

That’s great! The 8-pin connector is useable with basically any ATX power supply; the same type most of us will have in our PCs. If you’ve recently purchased a newly-made PSU, you might have an ATX 3.0 model – these directly support the 12VHPWR connector. If not, you’ll need an adaptor to hook up any of Nvidia’s new RTX 4000 cards.

Nvidia provides the adaptor in the box with the GPU so there’s no additional cost, which is good, but the introduction of a mandatory adaptor just means one extra element of your PC build that can potentially fail. Sometimes, simpler is better.

Here’s the most annoying thing, though: Nvidia didn’t even need to use the 16-pin connector here. Some third-party RTX 4070 models (like PNY’s one) just use the conventional 8-pin instead! Our own John Loeffler tested the PNY model against the FE version, and found the performance variance was virtually non-existent – less than 2%, in fact. The 4070 is great, but make it make sense, Nvidia!

Christian is TechRadar’s UK-based Computing Editor. He came to us from Maximum PC magazine, where he fell in love with computer hardware and building PCs. He was a regular fixture amongst our freelance review team before making the jump to TechRadar, and can usually be found drooling over the latest high-end graphics card or gaming laptop before looking at his bank account balance and crying.

Christian is a keen campaigner for LGBTQ+ rights and the owner of a charming rescue dog named Lucy, having adopted her after he beat cancer in 2021. She keeps him fit and healthy through a combination of face-licking and long walks, and only occasionally barks at him to demand treats when he’s trying to work from home.