Google adds eyes to AI Mode with new visual search features

Like a conversational Pinterest with some online shopping thrown in

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

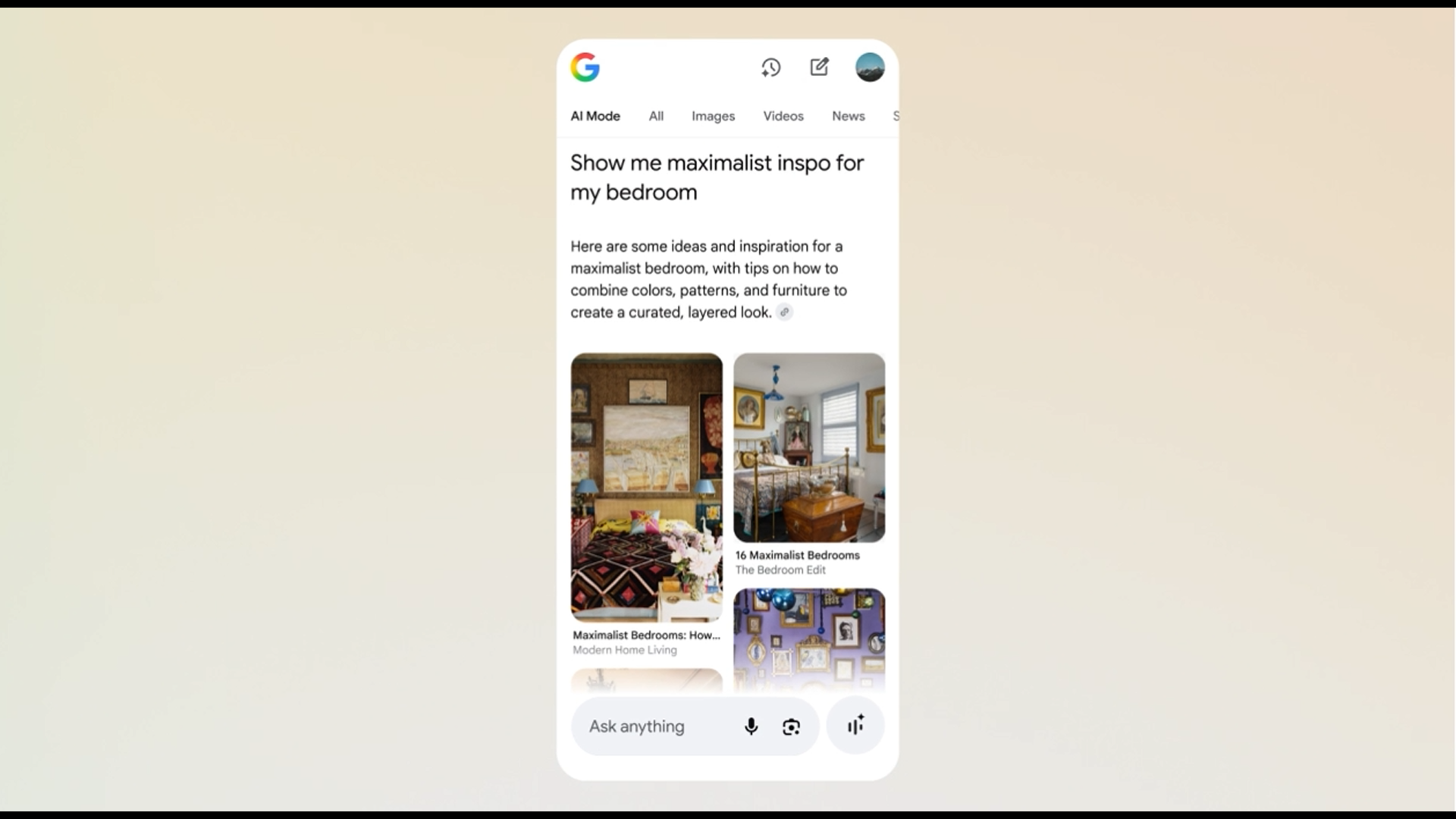

Google has added a whole new dimension to AI Mode with the addition of images to the text and links provided by the conversational search platform. AI Mode now offers elements of Image Search and Google Lens to go with the Gemini AI engine, letting you ask a question about a photo you upload or see images related to your queries.

For instance, you might see someone on the street with an aesthetic you like, snap a picture, and ask AI Mode to “show me this style in lighter shades," Or ask for "retro 50s living room designs" and see what people were sitting on and around 75 years ago. Google pitches the features as a way to replace filters and awkward keywords with natural conversation.

The visual facet of AI Mode uses a “visual search fan‑out” approach on top of its existing fan-out way of answering questions used by AI Mode. When you upload or point at an image, AI Mode breaks it down into elements like objects, background, color, and texture, and sends multiple internal queries in parallel. That way it comes back with relevant images that aren't restricted to repeating what you've already shared. Then it recombines results that best match your intent.

Of course, that also means Google's search engine must decide what results retrieved by the AI to highlight and when to suppress noise. It may misread your intent, elevate sponsored products, or favor big brands whose images are better optimized for AI. As search becomes more image-centered, sites that lack clean visuals or visual metadata may vanish from the results, making the experience less useful than ever.

AI window shopping

On the shopping side, all of this taps into Google’s Shopping Graph, which indexes over 50 billion products and refreshes every hour. So a picture of a pair of jeans might net you details on current prices, reviews, and local availability all at once.

AI Mode turning your vague prompts and visuals into real options for shopping, learning, or even discovering art is a big deal, at least if it performs well. Google’s size allows it to meld search, image processing, and e-commerce into one flow.

There will be plenty of concerned rivals watching closely, even if they were ahead of Google with similar products. For instance, Pinterest Lens already lets you find similar looks from pictures. Microsoft’s Copilot and Bing visual search let you start from images in some fashion.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

But few combine a global product database and live price data with conversational searching through images. Specialized apps or niche areas of focus could get ahead, but Google's size and ubiquity mean it will have a massive head start with broader attempts to search for information with images.

For years, we've typed queries and parsed results. Now, the direction of online search is toward sensing, pointing, and describing, allowing AI and search engines to interpret not just our words, but also what we see and feel. More aesthetic and design thinking means systems that natively understand visuals are becoming increasingly critical. AI Mode’s evolution suggests the baseline may soon be that search tools should see as well as read.

Should missteps creep in, though, all bets are off. If the AI Mode visual results misinterpret intent, mislead users, or show major bias, users may revert to brute force filtering or more specialized options. The success of this visual AI leap hinges on whether it feels helpful and not unreliable.

You might also like

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.