Gemini Live could soon get this useful Google Maps upgrade – and it looks perfect for smart glasses

More info on the places you're seeing

- A new Google Maps feature has been spotted for Gemini Live

- It would pull up place information from Google Maps

- We're not sure when the feature might go live for everyone

Google continues to build new features on top of its Gemini Live experience – where you can chat naturally to the AI, and show it the world around you through your phone's camera – and it seems a useful Google Maps upgrade is on the way.

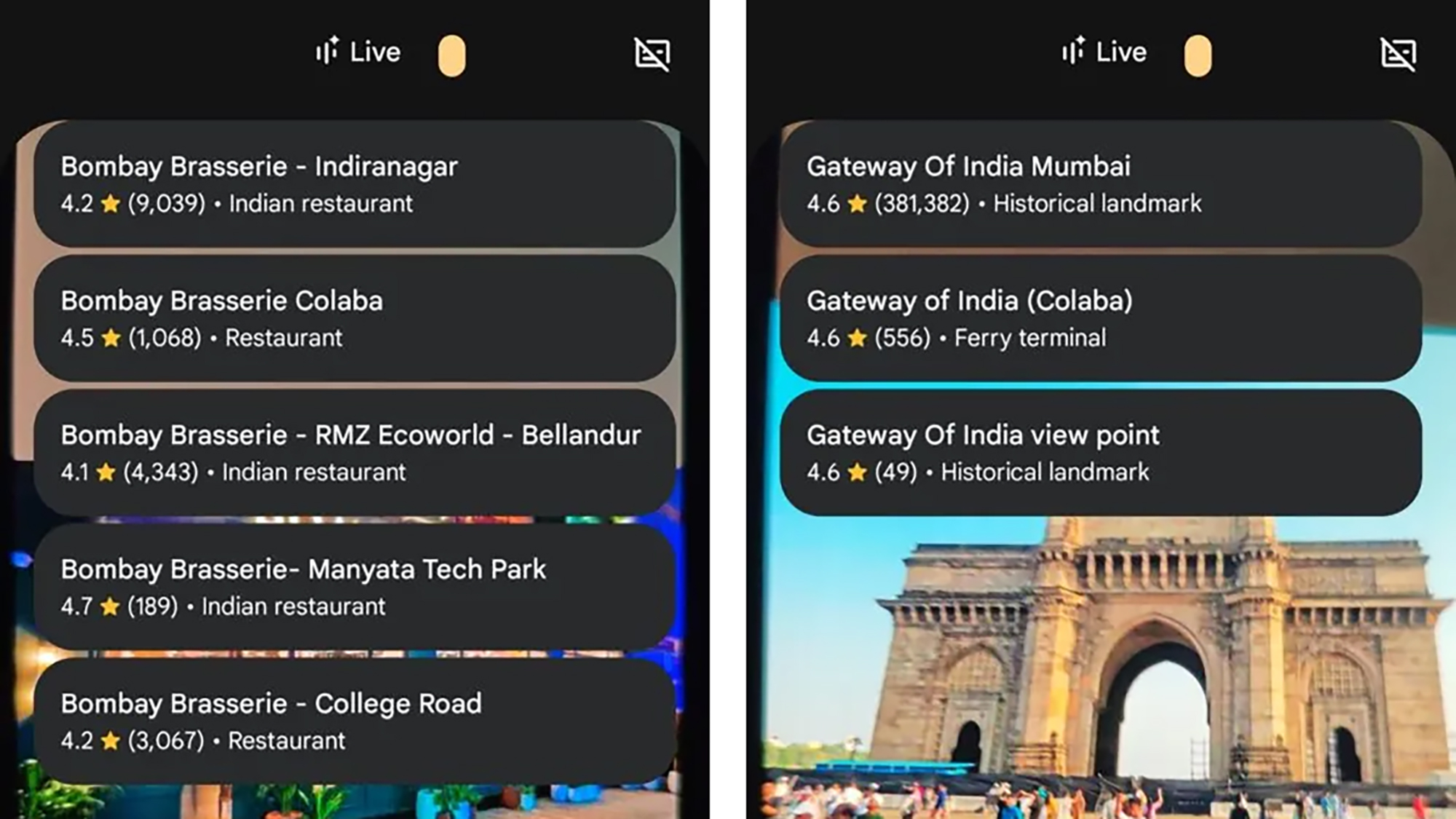

The team at Android Authority has spotted code hidden in the latest Google app for Android that lets you overlay information from Google Maps on top of the camera viewfinder, so you get more details about what you're looking at.

So, for example, you might see the name of a restaurant and its Google Maps rating pop up if your phone's camera is looking down a street. It sounds similar to the Google Lens augmented reality (AR) feature that's already available inside the Google Maps app.

At the moment, you can already use Gemini Live to point your camera at places in front of you, whether they're landmarks or businesses, and ask questions about them. Once this new feature arrives, you'll get Google Maps data added in as well.

Smarter device

The Android Authority team has managed to get the feature up and running, and it looks pretty basic at the moment. You can also get the Google Maps information up in audio mode, but you need to be specific about the places you want the details of.

This kind of AR-enabled overlay would be useful for smart glasses too of course: imagine being able to bring up a Google Maps info card right in your line of vision. We may see a new Samsung-made pair of smart specs arriving before the end of the month.

It also builds on top of the new visual guidance feature for Gemini Live that Google demoed at the Pixel 10 launch event, which highlights specific objects in the camera viewfinder – like buttons, parking spots, tools, or anything else that's useful.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

There's no indication of when this feature might roll out to the masses, but considering the necessary code is already in place – and that Google has been keen to push out AI features as quickly as possible – it shouldn't be too long before we're all seeing it.

You might also like

Dave is a freelance tech journalist who has been writing about gadgets, apps and the web for more than two decades. Based out of Stockport, England, on TechRadar you'll find him covering news, features and reviews, particularly for phones, tablets and wearables. Working to ensure our breaking news coverage is the best in the business over weekends, David also has bylines at Gizmodo, T3, PopSci and a few other places besides, as well as being many years editing the likes of PC Explorer and The Hardware Handbook.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.