When will our smart speakers become conversation starters?

On-device AI and push notifications could put voice assistants at the 'intelligent edge'

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

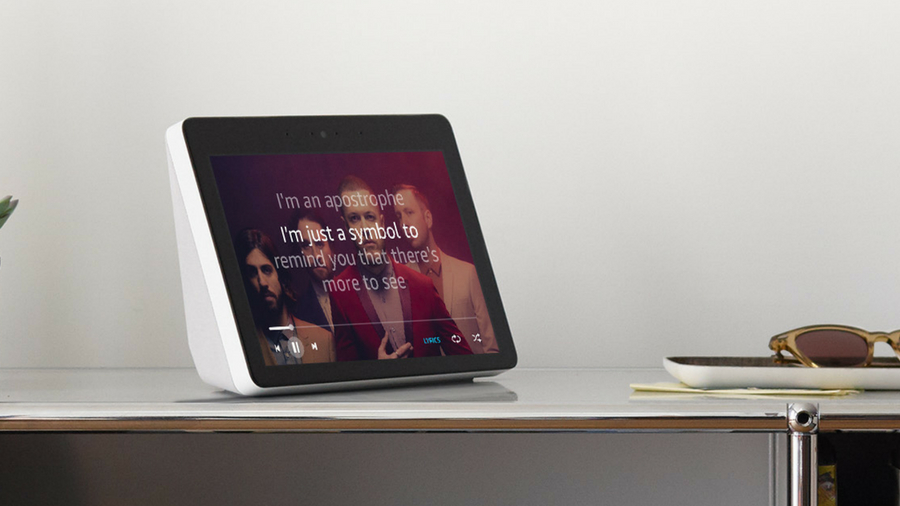

Amazon's new Echo Plus has on-device AI built-in. Credit: Amazon

Alexa is dumb. You probably saw Amazon’s recent device refresh, which includes a new Echo Dot and Echo Show, some multi-room audio gear, and even a microwave. Are they the future of the smart home? No, but some of the new devices do contain a germ of something that is: on-device AI.

By talking less to the cloud and doing more offline, some Echo gadgets are entering a brave new world that could see voice assistants like Alexa abandon the online world altogether to live at the 'intelligent edge'.

Alexa goes local

In September, Amazon announced Local Voice Control, a new feature for the Hub-enabled Echo-family devices: the current and next-gen Echo Plus and next-gen Echo Show. ”This enables them to conduct a few smart home functions without an active connection to the Alexa Voice Service (AVS),” explains Emerson Sklar, Senior Solutions Consultant at crowd testing company Applause.

Technically speaking, this move by Amazon takes Alexa's Automated Speech Recognition (ASR) and Natural Language Understanding (NLU) from the cloud and puts it on the devices. ”The embedded ASR ensures the device can locally translate some of your speech to text, while the embedded NLU locally enables mapping of the translated speech to select intents and slots,” says Sklar.

Essentially, it means that the Echo Plus and Echo Show allow Alexa to do some of her work without going online.

What else does on-device AI do?

In short, this is about on-device identity detection. Alexa can now recognize a voice automatically, which means you don't have to set up a profile on the Alexa app. So what?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

”You don’t have to repeat phrases for Alexa to learn who’s talking,” says Mark Lippett, CEO at embedded voice and audio company XMOS, ”and now, when you speak to Alexa, she’ll address you by name – which gives a far more familiar touch to the Alexa experience”.

Ask Alexa to add milk to your shopping list and it'll know it’s you that asked. ”Alexa recognizes the characteristics of your voice and responds in context,” says Lippett. ”It offers a more personalized experience – for example, 'Alexa play music' would play your music, not the last person that asked.”

However, for now, there's a limit to how far on-device AI goes. ”Upon release, the Echo Plus and the new Echo Show will only support local voice control for switches, plugs, and lights,” says Sklar.

How important is on-device AI?

That Alexa can identify you without checking with the cloud has advantages. “Cloud connectivity is no longer a pre-requisite for AI, data processing and decision making,“ says Dr Chris Mitchell, CEO and Founder of Audio Analytic. “There are obvious benefits like a rapid response, offline capabilities, reduced server costs, and increased privacy … by running on the edge you reduce the burden on the battery and eliminate the need for a constant internet connection.“

However, on-device AI by itself is not the game-changer. “All it really does is tailor existing services to you as a person, as opposed to just having those services available generally,“ says Lippett. “It isn’t a game-changer in the way that something like push notifications will be.“

Ah yes… push notifications.

What are push notifications?

This is when Alexa and other voice assistance will become truly impressive, transforming dumb speakers that answer dumb questions to true personal assistants capable of playing active roles in people’s lives. For now, when you ask Alexa to do something – such as choose a film – she looks at your history and gives you a customized response. Push notifications are very different.

“The real opportunity for this technology is when these devices can detect when you’re present and push relevant, trusted ‘context aware’ voice notifications to you,“ says Lippett. Think personalized, customized reminders and advice, such as ‘Jamie, don't you think it's time you went to the gym? It's been two weeks since your last visit and your resting heart-rate is increasing’.

So voice assistants could one day become active, rather than passive. “At its most powerful, voice can deliver a user experience like a ‘digital twin’, delivering ‘just in time, personalized, relevant content,“ says Lippett.

The drawback could be that Alexa starts trying to sell you stuff – expect ‘voice spam’ to become a thing.

What about privacy?

Everyone thinks voice assistants are constantly listening to them, but if they're not connected to the cloud, who cares? On-device AI is not, for now, going to be used by Amazon to address privacy, but it really could – and perhaps should – help.

The public's lack of trust in voice assistants is a real concern for Amazon, Google, Apple, and Microsoft. A recent Accenture survey of 1,000 users of voice control devices found that most people using voice assistants have trust issues with their devices. In fact, 48% believe the likes of Alexa are always listening, even when they've not been given a command, and more than one in five admit that they don't use their voice assistant more because they don't trust it.

“AI, and the voice assistants that use it are examples of technology that should serve humanity,“ says Emma Kendrew, Artificial Intelligence Lead for Accenture. “So as the developers of these services, we need to bridge that trust gap.“

How on-device AI could help improve privacy

On-device AI could bring some significant privacy-boosting features. “Audio and contextual processing will have to be moved away from the cloud and onto the device itself,“ says Lippett. “By embedding this intelligence closer to the user environment, users will enjoy a greater degree of privacy, along with increased awareness and context from the device.“

It could even become a selling point. “Companies could offer an option for a totally cloud-disconnected experience, which may alleviate some users' concerns over the privacy of their data,“ says Sklar.

What is sound recognition?

For now, on-device voice AI has no context awareness. “A smart speaker of any size is passive, and doesn’t do anything unless woken up or instructed,“ says Mitchell. “If there is a fire and your smoke alarm is going off, the smart speaker will melt just like the rest of your possessions.“

Mitchell's company, Audio Analytic, has developed on-device sound recognition tech that uses a database of real-world sounds that can be used for machine learning. It gives devices the ability to ‘hear’ environmental sounds like breaking windows, smoke or carbon monoxide alarms, and dogs barking. “By giving machines a sense of hearing we can make products more intelligent and helpful,“ adds Mitchell, whose company's tech is used in the Hive Hub 360.

Another example is Wondrwall, an intelligent home system built into light switches that, as well as being controlled using Alexa, adds sound recognition for listening out for the sound of smoke detectors and smashing windows. In total it has 13 sensors, including detectors for motion and humidity.

Where is all of this going?

For now, on-device AI is merely about devices responding in a more familiar way, but the technology will move on immeasurably in the next few years. “A truly active experience requires identity and context awareness, as well as sophisticated privacy and intelligence at the edge,“ says Lippett. “That’s ultimately where this technology is heading.“

A lot will depend on business models. “I think Amazon will ultimately provide AVS-enabled device manufacturers with the ability to have certain parts of Alexa embedded in the device,“ says Sklar, suggesting that Echo Auto doesn't need the same local voice control capabilities as Echo Plus. “There's also necessarily a limit as to how much 'Alexa' Amazon can embed into a device.”

TechRadar's Next Up series is brought to you in association with Honor

Jamie is a freelance tech, travel and space journalist based in the UK. He’s been writing regularly for Techradar since it was launched in 2008 and also writes regularly for Forbes, The Telegraph, the South China Morning Post, Sky & Telescope and the Sky At Night magazine as well as other Future titles T3, Digital Camera World, All About Space and Space.com. He also edits two of his own websites, TravGear.com and WhenIsTheNextEclipse.com that reflect his obsession with travel gear and solar eclipse travel. He is the author of A Stargazing Program For Beginners (Springer, 2015),