How to protect your website from hackers

Previously, we saw how hackers spend a lot of time surveying websites they want to attack, building up a detailed picture of their targets using information found in DNS records, as well as on the web and from the site itself.

This information helps hackers learn the hardware and software structure of the site, its capabilities, back-end systems and, ultimately, its vulnerabilities. It can be eye-opening to discover the detail a hacker can see about your website and its systems.

The way the internet works means that nothing can ever be entirely invisible if it's also to be publicly accessible, and anything that's publicly accessible can never be truly secure without serious investment, but there's still plenty you can do.

Now we're going to examine some of the steps you can take to ensure that any hacker worth their salt will realise early on that your web presence isn't the soft target they assumed it was, and to get them to move on.

Robot removal

Many developers leave unintentional clues as to the structure of their websites on the server itself. This tells the hacker a lot about their proficiency in web programming, and will pique their curiosity.

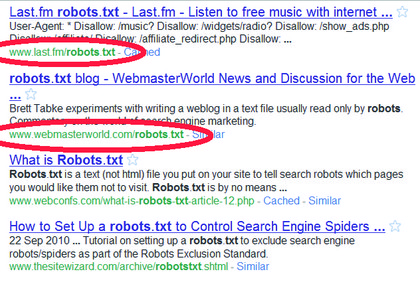

Many people dump files to their web server's public directory structure and simply add the offending files and directories to the site's 'robots.txt' file.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

This file tells the indexing software associated with search engines which files and directories to ignore, and thereby leave out of their databases. However, by its nature this file must be globally readable, and that includes by hackers.

Not all search engines obey the 'robots.txt' file, either. If they can see a file, they index it, regardless of the owner's wishes.

GIVEN UP BY GOOGLE: 'Robots.txt' files are remarkably easy to find using a Google query

To prevent information about private files falling into the wrong hands, if there's no good reason for a file or directory being on the server, it shouldn't be there in the first place.

Remove it from the server and from the 'robots.txt' file. Never have anything on your server that you're not happy to leave open to public scrutiny.

Leave false clues

However, 'robots.txt' will also give hackers pause for thought if you use it to apparently expose a few fake directories and tip them off about security systems that don't exist.

Adding an entry for an intrusion detection system, such as 'snort_data' for example, will tell a false story about your site's security capabilities. Other directory names will send hackers on a wild goose chase looking for software that isn't installed.

If your website requires users to log into accounts, ensure that they confirm their registrations by replying to an email sent to a nominated email account.

The most effective way of preventing a brute force attack against these accounts is to enforce a policy of 'three strikes and you're out' when logging in. If a user enters an incorrect password three times, they must request a new password (or a reminder of their current one), which will be sent to the email account they used to confirm their membership.

If a three strikes policy is too draconian for your tastes, or you feel that it may lead to denial of service attacks against individual users by others deliberately trying to access their accounts using three bad passwords, then it's a good idea to slow things down by not sending the user immediately back to the login page.

After a certain number of failed attempts, you could sample the time and not allow another login attempt until a certain number of minutes have passed. This will make a brute force attack very slow, if not practically impossible to mount.