DLSS and FSR are the future of PC games, whether you like it or not

Opinion: games are getting harder to run, so upscaling is key

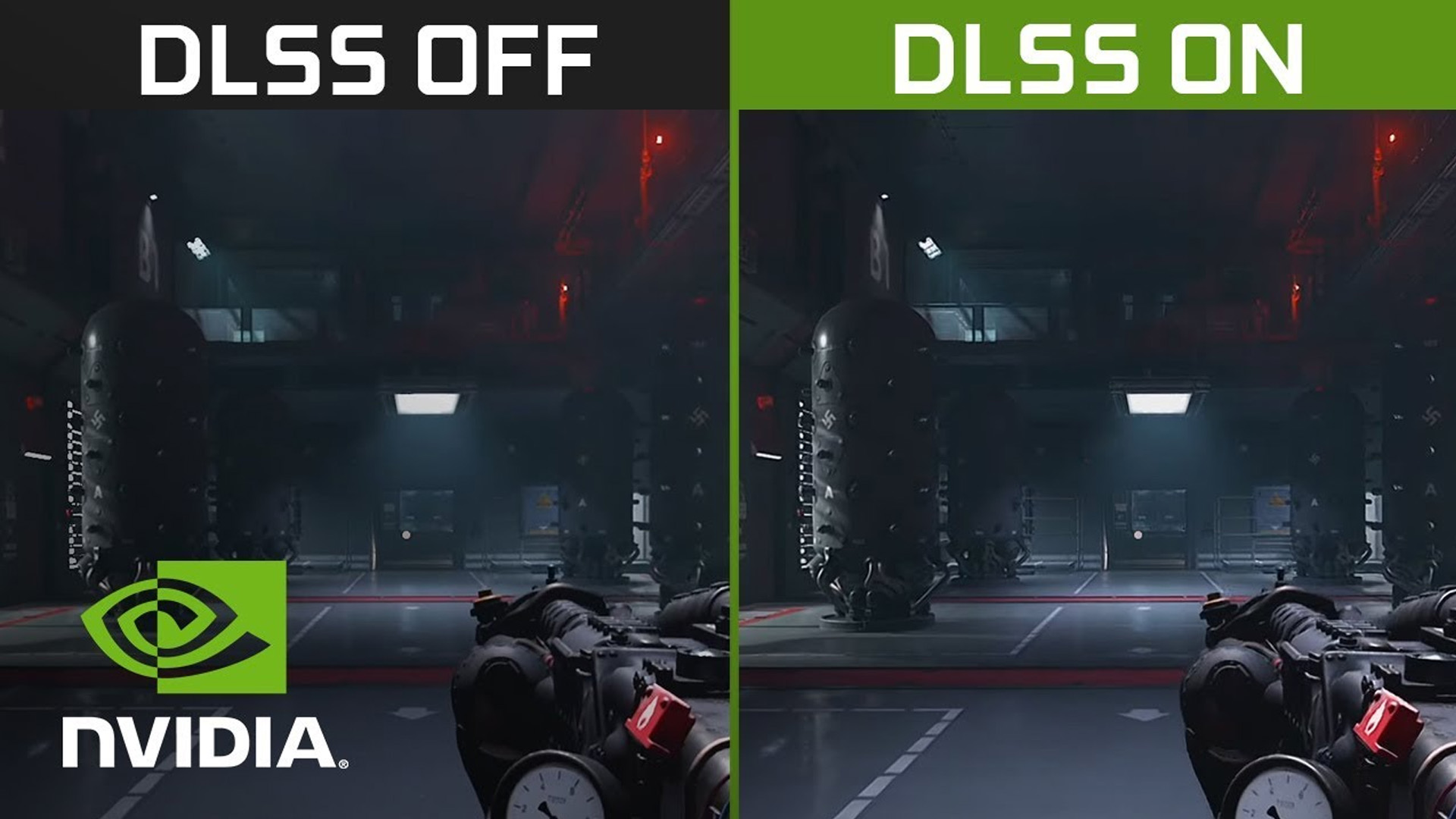

Back when Nvidia first revealed the GeForce RTX 2080 and showed the world what DLSS is and what it would do, it seemed like a good way to get more budget-oriented systems able to use the new-fangled ray tracing tech it debuted at the same time. Especially because the first iteration of Nvidia's AI upscaling tech wasn't exactly phenomenal it seemed to play second fiddle to a lot of other tech the company was pushing.

However, with the advent of the games generation brought about by the PS5 and Xbox Series X, there's a greater demand for visually rich games, loaded with ray tracing and otherwise complicated visuals. There's nothing I like more than a gorgeous gorgeous video game, but games have become way harder to run in just the last couple of years.

Even the RTX 2080 Ti, a graphics card that was an unstoppable 4K behemoth a few years ago, has become a 1080p GPU in most modern games that support ray tracing. And as the best PC games continue to get more complicated it's becoming more essential for them to include either DLSS or AMD's alternative - FidelityFX Super Resolution, or FSR.

Remember the 8K graphics card?

Every single time a high profile AAA game like the recently released Dying Light 2 comes out, I can't help but think back on the initial sales pitch for the Nvidia GeForce RTX 3090, and how Nvidia swore that it was an 8K graphics card.

And while that's still technically true, you have to lower all the settings down to low and turn DLSS to performance mode to hit 60 fps at 8K. That's no way to live your life when you're spending thousands of dollars - especially at today's graphics card prices - on a GPU.

But even in other games, this remains true. Cyberpunk 2077, for instance, even with the RTX 3090, can't be maxed out at 4K without relying on DLSS to get a playable framerate. It's just a blessing that at the same time, Nvidia has been able to improve the DLSS technology so much that I usually just turn it on by default in any game that offers it these days.

Even the mightiest graphics card on the market, then, needs DLSS to hit a solid frame rate at the resolution it's marketed for in the most demanding games.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Upscaling for everyone

I've been primarily a PC gamer for most of my life, and I still remember when Sony announced the PS4 Pro and showed off its checkerboard upscaling to hit 4K. Generally it worked quite well, but like any other PC gamer with access to a PC that could do 4K gaming, I scoffed. These consoles were advertising 4K gaming, but weren't actually playing games at a native 4K.

But guess what? Now that's most games, even on PC. Upscaling has become so good that it really doesn't matter what base resolution the game is being rendered at, because you really won't notice most of the time - especially if you're running FSR or DLSS at a "balanced" or "quality" preset.

This really struck me when I first started playing Dying Light 2, because I tried running it at 4K max with ray tracing effects enabled with the RTX 3090 and an Intel Core i9-12900K and it only got 40 fps. That's technically "playable" I guess, but I've been playing games at 60 fps for too long to settle for 40 fps in anything.

It's actually wild to me when I sit down and think about that too, because the rock-solid 60 fps standard that we as PC gamers have all subscribed to hasn't always been there, and it's only recently that I at least started to expect every game to hit it.

A link to the past

Going back 10 years or so, when we were trying to get our PCs to play Crysis or Metro 2033 or The Witcher 2, there were so many times that I just settled for 40 fps - and that was at 1080p. Playing at a rocky frame rate was just something you accepted because in order to even theoretically get 60 fps at high settings you'd have to start toying with multiple GPU setups or lowering the resolution and deal with a fuzzy image.

Even then, when you had the resources for a sick Crossfire or SLI setup and were able to hit a solid 60 fps, you were at the mercy of jittery frame times, as the connection between the two graphics cards didn't have enough bandwidth to seamlessly and smoothly play games without a ton of work from developers and the graphics card manufacturers themselves.

Back in the days when a lot of games were coming out as PC exclusives and were able to really reach for the skies in terms of graphics without having to worry about console compatibility, settling for sub-par performance was just a fact of life. And trying to push as close to 60 fps as you could and brag to your friends about how well you were able to run a game with the new graphics card you just bought.

And, with how hard games are to run right now, we could be in another era just like that. Especially with how hard 4K gaming is marketed right now - even though many people haven't moved beyond 1080p - there are so many games out right now that no one would be able to max out until the next generation of graphics cards came out.

But now that upscaling has blown up in such a huge way, no one has to suffer through the low framerates and weird jittery frame times that we had to deal with in the early 2000s and 2010s. It's made PC gaming a lot easier to deal with in general. It's just a shame that the increased accessibility this generation has been met by inflated prices for hardware.

Will it continue?

Both the GeForce RTX 3000 and Radeon RX 6000 series of graphics cards are the first generations to come out in this "next generation" of games. It's only natural for these cards to start to struggle as games are designed to take on more advanced hardware, and it's likely that the next generation of PC hardware is going to be able to hit high frame rates at high resolutions without necessarily needing upscaling to do it.

That's likely why Nvidia has started pushing tech like DLDSR as well as DLSS. DLDSR, or Deep Learning Dynamic Super Resolution, is the tensor-core powered version of DSR, something that already exists in the Nvidia Control Panel, where you can render a game at a higher resolution and then scale it down to your native resolution. This makes your game prettier and smoother-looking but will absolutely decimate performance.

The deep learning version of this is more efficient than brute forcing it through your regular shaders but it's still going to impact performance. So, it doesn't make much sense now, in a few years once, say, the RTX 4080 or RTX 5080 comes out, playing around with tech that makes games harder to run but prettier might start to make a lot of sense.

That's the scenario I'm hoping for. The last thing I want is for game developers or the GPU manufacturers themselves rely on upsampling tech as a crutch to push expensive and decadent graphics effects at all costs. That's the feeling I've been getting recently, but we're still early on in this gaming generation, so there's still time to prove me wrong.

Jackie Thomas is the Hardware and Buying Guides Editor at IGN. Previously, she was TechRadar's US computing editor. She is fat, queer and extremely online. Computers are the devil, but she just happens to be a satanist. If you need to know anything about computing components, PC gaming or the best laptop on the market, don't be afraid to drop her a line on Twitter or through email.