Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

For as long as most of us care to remember, the battle for the mainstream processor market has been fought between two main protagonists, Intel and AMD, while semiconductor manufacturers like Sun and IBM traditionally concentrated on the more specialist Unix server and workstation markets.

Unnoticed to many, another company has risen to a point of dominance, with sales of chips based on its technology far surpassing those of Intel and AMD combined.

That pioneering company is ARM Holdings, and while it's not a name that's on everyone's lips in the same way that the 'big two' are, indications suggest that this company will continue to go from strength to strength.

Here we put ARM in the spotlight, investigating its past and heritage but, more importantly, also looking at the future for this unsung hero of the micro-electronics revolution.

While most of the semiconductor industry is, and always has been, based in California's Silicon Valley, it makes a refreshing change that ARM's headquarters are here in the UK in the so-called Silicon Fen area around Cambridge.

Despite ARM not being a household name, the company is no Johnny-come-lately. Indeed, if we trace ARM back to its roots we find a company that was a major force in the personal computing boom of the early '80s.

Back in 1980, the IBM PC was still in development and those personal computers that did exist were hugely expensive, costing the equivalent of several thousand pounds in today's terms. The UK had just made its mark on personal computing with the launch of the Sinclair ZX80. This was the first computer to sell for less than £100 - something that helped the UK to lead the world in home computer ownership throughout the 1980s.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

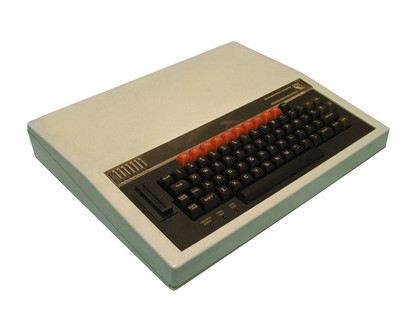

One of the most influential companies to follow in Sinclair's footsteps was Acorn Computers. Just a year later, Acorn brought out the BBC Micro, which found its way into just about every school in the UK and went on to sell about one and a half million units.

Acorn's successor to the BBC Micro, the Archimedes, wasn't nearly as successful as a computer, but was far more influential because of Acorn's choice of processor. While the BBC Micro used an off-the-shelf 8-bit 6502 from MOS Technology, for the Archimedes Acorn decided to design its own high performance 32-bit RISC (reduced instruction set computer) chip, which it called an Acorn RISC Machine or ARM processor.

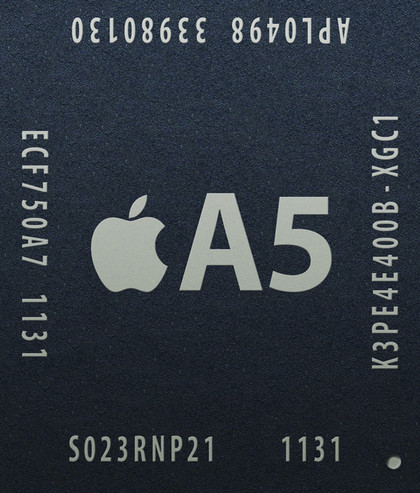

In 1990, in a joint venture with Apple and VLSI Technology (a company that designed and manufactured custom and semi-custom chips), Acorn span off its research division as a separate company called Advanced RISC Machines. In due course, this offshoot would evolve into the ARM Holdings we know today.

The RISC philosophy

Having used the term RISC to describe the ARM chip that powered the Archimedes, and because the same tag can be applied to today's ARM technology, it makes sense to start by investigating this approach to the design of a microprocessor. To do that, we need to begin with a brief history lesson.

Early 8-bit microprocessors like the Intel 8080 or the Motorola 6800 had only a few simple instructions. They didn't even have an instruction to multiply two integer numbers, for example, so this had to be done using long software routines involving multiple shifts and additions.

Working on the belief that hardware was fast but software was slow, subsequent microprocessor development involved providing processors with more instructions to carry out ever more complicated functions. Called the CISC (complicated instruction set computer) approach, this was the philosophy that Intel adopted and that, more or less, is still followed by today's latest Core i7 processors.

This move to increasingly complicated instructions came at a cost. Although the first microprocessors could execute most of their instructions in just a handful of clock cycles, as processors became more complicated, significantly more clock cycles were required.

In the early 1980s a radically different philosophy called RISC (reduced instruction set computer) was conceived. According to this model of computing, processors would have only a few simple instructions but, as a result of this simplicity, those instructions would be super-fast, most of them executing in a single clock cycle. So while much more of the work would have to be done in the software, an overall gain in performance would be achievable.

Many RISC-based processor families adopted this approach and exhibited impressive performance in their niche application of Unix-based servers and engineering workstations. Some of these families are now long gone but the fact that several - including IBM's POWER, Sun's SPARC and, of course, ARM - are giving the x86 architecture a run for its money rather suggests that less can indeed be more.

We really are talking about a minimalist approach here. In a classic RISC design, all arithmetic and logic operations are carried out on data stored in the processor's internal registers. The only instructions that access memory are a load instruction, which writes a value from memory into a processor register, and the store instruction that does the opposite.

A simple example will illustrate how this results in more instructions having to be executed. If you've ever tried your hand at programming using a high-level language like BASIC, you'll surely have written an instruction like A = B + C that adds together the values in variables (memory locations) A and B, and writes the result to another variable called C.

With a CISC processor, this one instruction would become three as shown in the following example, which is for a typical processor:

LOAD A

ADD B

STORE C

In this example, the LOAD instruction writes the value from memory location A into the processor's accumulator (a special register used for arithmetic and logic operations), the ADD instruction adds the value from memory location B to the value in the accumulator, and the STORE instruction writes the value from the accumulator into memory location C.

In a RISC processor, the following instructions would be needed. It's important to note that a RISC processor has several registers, not just the accumulator, so these have to be specifically referred to (as R1 and R2 for example) in the instructions.

LOAD A, R1

LOAD B, R2

ADD R1, R2

STORE R2, C

Architecture development

Because today's ARM processors and cores (we'll differentiate between these two terms later) are direct descendants of the original ARM chip that was designed for Acorn's Archimedes back in 1987, they are referred to as ARM architecture devices.

This is similar to today's Intel and AMD chips, which grew out of the Intel 8086 and its successors, and are therefore described as adhering to the x86 architecture. Here we'll look at what was unique about the ARM architecture when it appeared 25 years ago, how it has evolved over the years, why that evolution hasn't paralleled that of the 86 architecture, and what remains distinctive about it today.

Those who have followed the development of the PC over the years will no doubt be familiar with the concept of processor generations within the x86 architecture. In recent years the dividing lines have become somewhat less distinct, but in the early days we saw the first generation 8086 (or 8088) give way to the 80286 and subsequently to the 80386, 80486, the Pentium and so on.

The same is true of the ARM architecture, but with one important difference. In the realm of x86, new generations have, on a couple of occasions, been associated with the introduction of a new headline figure for the width of the data pathways - something that has a significant impact on performance.

So the x86 architecture launched with the 16-bit 8086, and subsequent developments have brought us 32 bits and eventually the 64-bit architecture we enjoy today. In total contrast to this, the ARM architecture made its debut at 32 bits and has yet to make the transition to 64 bits.

However, that shouldn't be interpreted as a lack of innovation, as a few facts and figures will reveal.

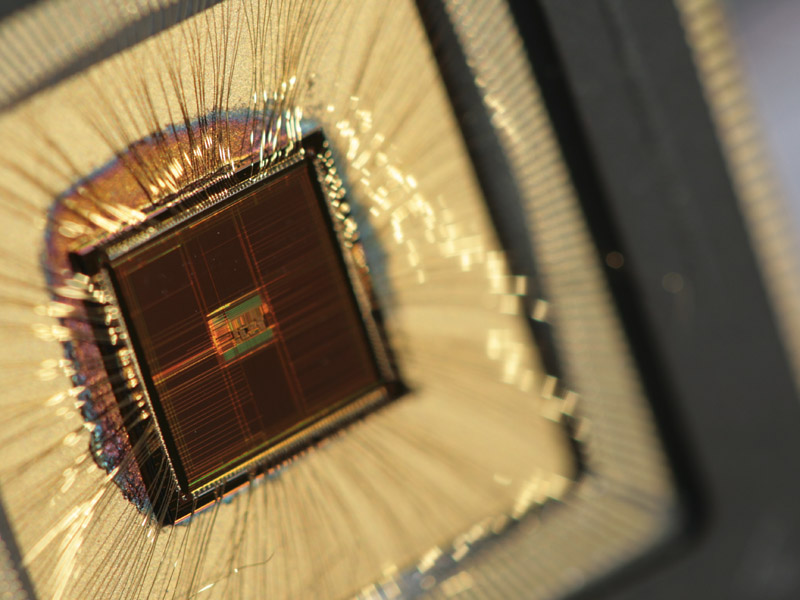

The first ARM processor saw the light of day in 1985, and while it was never used commercially, the ARM 2 that followed it, and which powered the first Archimedes PC, wasn't too dissimilar. Although it was based on a 32-bit architecture, it had a 26-bit address bus, which meant that it could address 64MB of memory. Although not a lot by today's standards, this was a huge amount in the mid-'80s.

The clock frequency of 8MHz also sounds rather pedestrian although, as a result of the RISC design, it was able to provide a speed of 4 MIPS (million instructions per second). To put this into context, the Intel 80386, which appeared on the scene a year later, was just a touch faster at 5 MIPS, but to achieve this it had to be clocked at 16MHz.

However, to see the advances the ARM architecture has enjoyed in a quarter century of development, we really need to draw some comparisons with today's offerings.

In terms of raw performance, today's top-end core is the Cortex-A15, which is based on the seventh generation architecture, known as ARMv7. Although the clock speed depends on the manufacturer (ARM Holdings itself doesn't manufacture any silicon), a figure of 2.5GHz is considered a likely ceiling, and at this speed it would clock up a performance of around 35,000 MIPS.

While this comes nowhere near the latest Intel Core i7, we're not comparing like with like. When expressed in terms of MIPS per core per MHz, although it still doesn't overtake the Core i7, the Cortex-A15 comes much closer - but even this is missing the point.

Indications are that the Cortex-A15 will consume less than a watt per core compared to tens of watts per core for the Core i7. In this respect it comes closer to the Intel Atom, but with much greater performance.