I compared GPT-5.1 to GPT-5 on ChatGPT, and now I don’t want to go back

The latest upgrade is what GPT-5 should have been

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

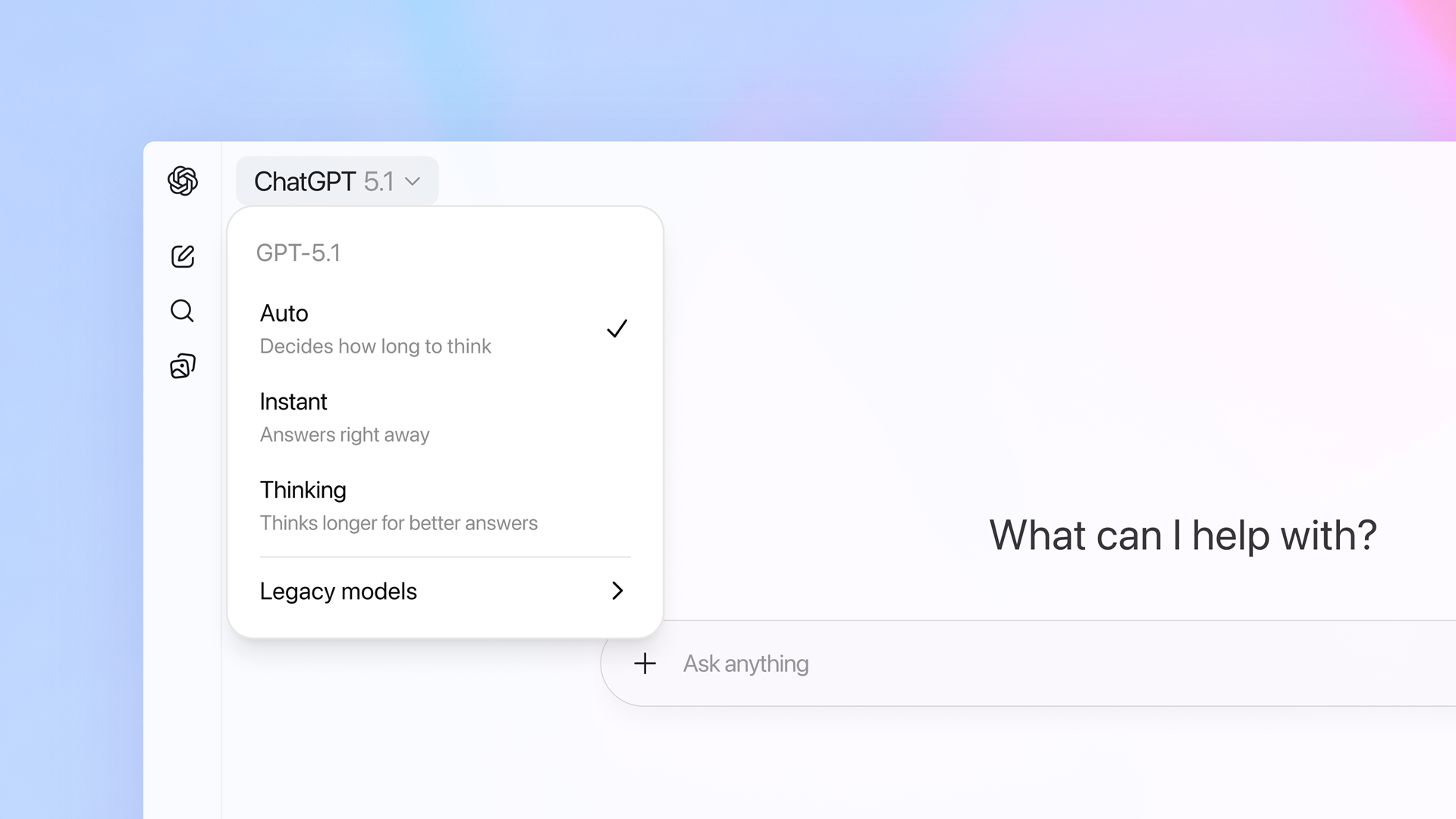

Some ChatGPT users greeted the introduction of GPT-5.1 as the default model for the chatbot with some skepticism – after all, more than a few users vocally complained only a few months ago when GPT-5 debuted. But there's been no comparable furor this time around, probably because GPT-5.1 seems calculated to address those earlier complaints, which might never have come up if GPT-5 had anticipated them.

GPT-5.1 isn't claimed to be a quantum leap in AI as GPT-5 was, but it does upgrade some key elements of the AI model, and ultimately is more responsive and better at imitating a pleasant personality. That much was clear from some impromptu use. I then decided to do a slightly more formal test of the areas where OpenAI claimed GPT-5.1 outperformed GPT-5.

I ran prompts crafted to test those upgraded capabilities through both GPT-5.1 and GPT-5. Here's how they did…

1. Instruction precision

GPT-5.1 is supposed to be excellent at following instructions, even more so than GPT-5. So I came up with some arbitrary rules to go with a request for a summary of the plot of The Lion King. It had to be exactly four sentences, and clear enough for a seven-year-old but not indulging in baby talk. And none of the sentences could begin with 'Simba' or 'The.'

GPT-5 almost got it right, but missed out on the rule about starting a sentence with 'The' when it wrote: "A young lion prince runs away from home after his father dies and he believes it’s his fault. The prince's evil uncle takes over the pride, treats the land and the other animals badly, and lets everything fall apart. With help from new friends, the prince grows up, learns he was tricked, and finds the courage to return. He faces his uncle, tells the truth, wins the fight, and becomes the new king who takes care of his family and their home."

GPT-5.1 nailed it, however. Not only did it follow the rules, but it managed to get some character names in, and more details without being more complex or longer.

It came up with: "In the Pride Lands of Africa, a young lion prince is learning from his father Mufasa how to be a good and brave king. After Mufasa is killed in a trap set by his jealous brother Scar, the cub is tricked into thinking it is his fault and runs away. With help from new friends like Timon and Pumbaa, he grows up, finds courage, and learns he cannot hide from his past forever. At last he returns home to face Scar, save his family, and take his place as the rightful king of the Pride Lands."

Sign up for breaking news, reviews, opinion, top tech deals, and more.

2. Warmth and clarity

There is a whole range of new personality options for ChatGPT with GPT-5.1, but even without that option, the new model is supposed to reflect a warmer, more human communication style than its predecessor. It was one of the more common complaints about GPT-5, in fact. So, for the next test, I asked ChatGPT to: “Explain why people get motion sickness in a way that feels like a normal conversation, not a science textbook. Keep it under 150 words and avoid talking down to me.”

The idea was to find out if GPT-5.1 would understand the informal but informative response I was asking for.

GPT-5 didn't go over the word limit, but while accurate, its response over-explained a lot of the medical details of motion sickness, and did feel a lot like a textbook. GPT-5.1 went for a much more conversational answer, including a description of how your eyes and inner ears detecting conflicting things led to nausea, despite its origin as "just your brain trying to make sense of conflicting data and not loving the experience."

3. Show your work

Despite being more human in its answers, GPT-5.1 is also supposed to be clearer and better than GPT-5 in explaining the logic underlying its answers. I came up with a variation on a classic math logic problem to see how they would perform. I asked both models to calculate how many gallons of gas I would use on a 142-mile trip in a car that gets 27 miles per gallon, along with the approximate cost at $3.79 per gallon.

GPT-5 computed correctly but took longer to do so than I'd asked for, and had a very formal style that implied the questions were more than very basic arithmetic

GPT-5.1 solved the task with sharper discipline. It not only correctly worked out the math, it placed it in the context of the real world where rounding numbers and close approximations are how most people approach small amounts of money or questions about filling up a gas tank, saying, "You can figure out the fuel by dividing the miles by the mileage: 142 ÷ 27 comes out to a little over 5.2 gallons. Multiply that by the price per gallon and you get about $19.70 in gas. Round it a bit for real-world wiggle room and you’re looking at roughly 5¼ gallons and around twenty dollars total."

4. Facial consistency

I looked to the image side of things for GPT-5.1 next, addressing how well ChatGPT would adhere to a prompt for an image-related request. I wanted the AI to produce alternate versions of a photo while keeping the person’s face absolutely identical. I asked the models to produce two edits of the photo of me on the left. I asked for "a different hairstyle" and to be put in "a full ringmaster costume,

" but to keep my face and everything else exactly the same.

GPT-5.1's production is on the left, and GPT-5 is on the right. You can see how, while both models went for a kind of mohawk, GPT-5 didn't keep too close to my own face. It's essentially someone else in a suit similar but not identical to mine, and a bow tie of another color entirely.

GPT-5.1 was much closer and managed to keep the clothes and body the same, as well as the face. The mohawk's realism is more debatable, but the AI did seem to follow the face request.

GPT-5 did better with my face for the ringmaster costume, but made some odd choices like keeping my shirt the same and a slightly cartoony jacket. GPT-5.1 kept my face mostly the same, and at least did a better job replacing my clothes with a full costume.

5. Fashion sense

GPT-5.1 is not only supposed to be better at producing images that match requests, but at understanding pictures. So, I used the same photo and asked both models to classify the outfit as casual, business-casual, or dressy, and to explain their reasoning using only details visible in the image.

GPT-5 approached the task with caution. It correctly noted the blazer, the dress shoes, and the coordinated shirt-and-bow-tie combination, and it leaned toward calling the outfit business-casual. The model hesitated internally, though, and its description suggested uncertainty as it tried to decide where the bow tie landed on the spectrum. It produced a defensible answer, but one that felt like it was second-guessing itself.

GPT-5.1, by contrast, delivered a clearer and more confident interpretation. It identified the structured jacket, the formal footwear, the tailored fit, and the polished nature of the bow tie. From the image alone, it classified the outfit as dressy, emphasizing the formal signals present throughout the clothing. It respected the rule about not assuming anything invisible and stayed firmly within the boundaries of what the photograph revealed. The explanation was detailed but concise, and GPT-5.1 showed a more focused visual reasoning style that made its conclusion feel grounded.

The most striking improvement from GPT-5 to GPT-5.1 was its consistency. It adhered to word counts, honored sentence limits, respected image-based constraints, and navigated tone in unstated but noticeable finesse. GPT-5 did well, but GPT-5.1 did better, and it did so in ways that accumulated across tasks.

Still, it's mostly what might be termed incremental improvement. These are meaningful steps, but not a leap into the uncanny or the surreal. It raises questions about what comes next. If GPT-5.1 is the model that tightens screws and calibrates nuance, GPT-6 might be a whole new engine. In that sense, GPT-5.1 is a reassuring signpost: OpenAI is getting ready for something larger.

Still, GPT-5.1 stands as the better choice, and one fewer person, including me, is likely to abandon it for its earlier iteration as people did when GPT-5 came out. It doesn’t reinvent the wheel; it simply rolls the wagon along more smoothly. And sometimes that’s the upgrade that matters most.

These differences don’t mean GPT-5 is obsolete. It is still a remarkably capable model that delivers strong performance across a variety of tasks. But GPT-5.1 builds on that foundation with refinements that make it feel like a better choice for real-world uses.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

➡️ Read our full guide to the best business laptops

1. Best overall:

Dell Precision 5690

2. Best on a budget:

Acer Aspire 5

3. Best MacBook:

Apple MacBook Pro 14-inch (M4)

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.