I get why some people are suddenly freaking out about AI agents in Windows 11 – I'm worried, too, but let's not panic just yet

Yet more AI controversy is on the boil...

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Windows 11 is in the firing line once again, and this time some recent updates to documentation around AI agents have provoked fresh concerns about how these entities will work in the OS – and what threats they might pose.

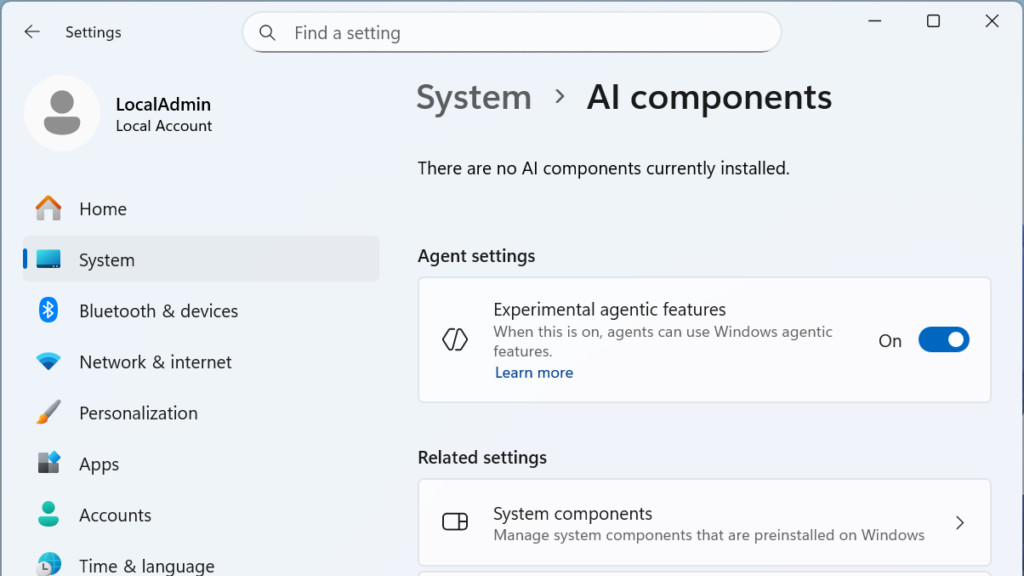

This latest controversy actually stems from an old support document about 'Experimental Agentic Features' (well, not that old – it was published in October 2025), which Microsoft updated a couple of weeks ago.

As Windows Latest highlighted (via PC Gamer), the documentation was refreshed when Microsoft rolled out the switch to turn on experimental agentic features in Windows 11 test builds (which happened in preview version 26220.7262 in the Dev and Beta channels). This was when the deployment of the first agent, Copilot Actions, was kicked off in testing (this can work with files on your PC to, say, organize a collection of photos and delete duplicates, for example).

The reality of Copilot Actions officially going live seems to have got people looking more closely at the fine print, some parts of which are vaguely ominous, while others are more alarming.

Notably highlighted in the media coverage are warnings that AI models "occasionally may hallucinate and produce unexpected outputs", meaning they can get things wrong, or indeed talk absolute garbage. We knew that already, though; it's just the nature of AI – specifically an LLM (large language model) – as a clearly fallible entity.

Also flagged is the warning about AI agents potentially introducing "novel security risks, such as cross-prompt injection", and this is where it gets a lot more worrying.

Of course, Microsoft has been banging on about these possible new attack vectors that might be leveraged via such AI systems since last year. Which is to say that the systems it has been creating for Windows 11 have very much been built with these threats in mind – so hopefully its defenses are going to be tight enough to deflect any such attempted intrusions.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

As Windows Latest points out, the way AI agents are boxed away in Windows 11 seems pretty watertight. They live in an 'agent workspace' effectively as a separate local user, with a distinct account completely walled off from the user's account, and limited file access based on permissions granted (aside from a handful of default folders).

This should keep these agents contained, and even if compromised, they should theoretically only have limited means of exploiting the system. Of course, the proof will be in the pudding of this system being used in the real world, and the trouble is if we look at the collapsed cake that was Recall – or at least this AI feature's initial design – that doesn't give us much confidence.

Of course, Microsoft has learnt from that episode, right? Well, I certainly hope so, and the security planning behind agent workspaces does seem to be suitably thorough, expansive and much more convincing overall.

Read it and weep?

However, as PC Gamer notes, the biggest issue is that when talking about those novel security risks (cross-prompt injections) and potential nastiness that could be leveraged therein, like data exfiltration – stealing your files – Microsoft has added a new caution in its recent revision of this document. Namely that: "We recommend you read through this information and understand the security implications of enabling an agent on your computer."

That's the most sinister sentence in this document when it comes to the content relating to security. What is this saying? That this is some sort of get-out clause for Microsoft, and you've got to weigh up the risks on your own by poring through documents?

Now, you may think that's reading too much into this, and that's fair enough, but it has certainly sent alarm bells ringing in the articles – and online comments – that are now popping up around this.

It certainly doesn't feel very comforting to read that, but then again, this is early testing for AI agents. Copilot Actions is in a purely experimental phase right now, in fact, so another way of looking at this would be: what do you expect? Sign up now and there probably are some very real risks involved. Just imagine you were using an 'experimental' operating system, and it went down in flames, taking your files with it in the ensuing fireball – you'd only have yourself to blame, wouldn't you?

So, the message is to proceed at your own risk, which at this experimental stage is fair enough really. However, my actual worry here is when these AI agents come to a full implementation in the finished version of Windows 11, can we trust that Microsoft will have realized that in a watertight way?

What if there's a hole in this system somewhere? Given that Microsoft is seemingly breaking even basic things in Windows 11 with some regularity, I can see why folks might be concerned here. I'm nervous, after all, and if something does go wrong, it could be disastrous for the involved users who are running AI agents – and for Microsoft's reputation, too.

The software giant can't afford an episode where AI goes rogue in some wild way, as it will be difficult to recover the trust in Windows 11's agents if an unfortunate episode occurs along these lines.

➡️ Read our full guide to the best computers

1. Best Windows:

Dell Tower Plus

2. Best Mac:

Apple Mac mini M4

3. Best Mac AIO:

Apple iMac 24-inch (M4)

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

Darren is a freelancer writing news and features for TechRadar (and occasionally T3) across a broad range of computing topics including CPUs, GPUs, various other hardware, VPNs, antivirus and more. He has written about tech for the best part of three decades, and writes books in his spare time (his debut novel - 'I Know What You Did Last Supper' - was published by Hachette UK in 2013).

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.