This robot knows the gentle caress of a human touch

Well, 60 percent of it...

A soft stroke of the upper arm. A nudge under the table. A punch in the face. There are many ways that humans communicate complex emotions with a simple touch; but robots have always been mostly oblivious to these social cues.

Now, however, engineers at the University of Twente in the Netherlands are developing a system designed to recognise different types of social touch, and figure out what they might mean. They hope that one day it could help robots perform better in social situations.

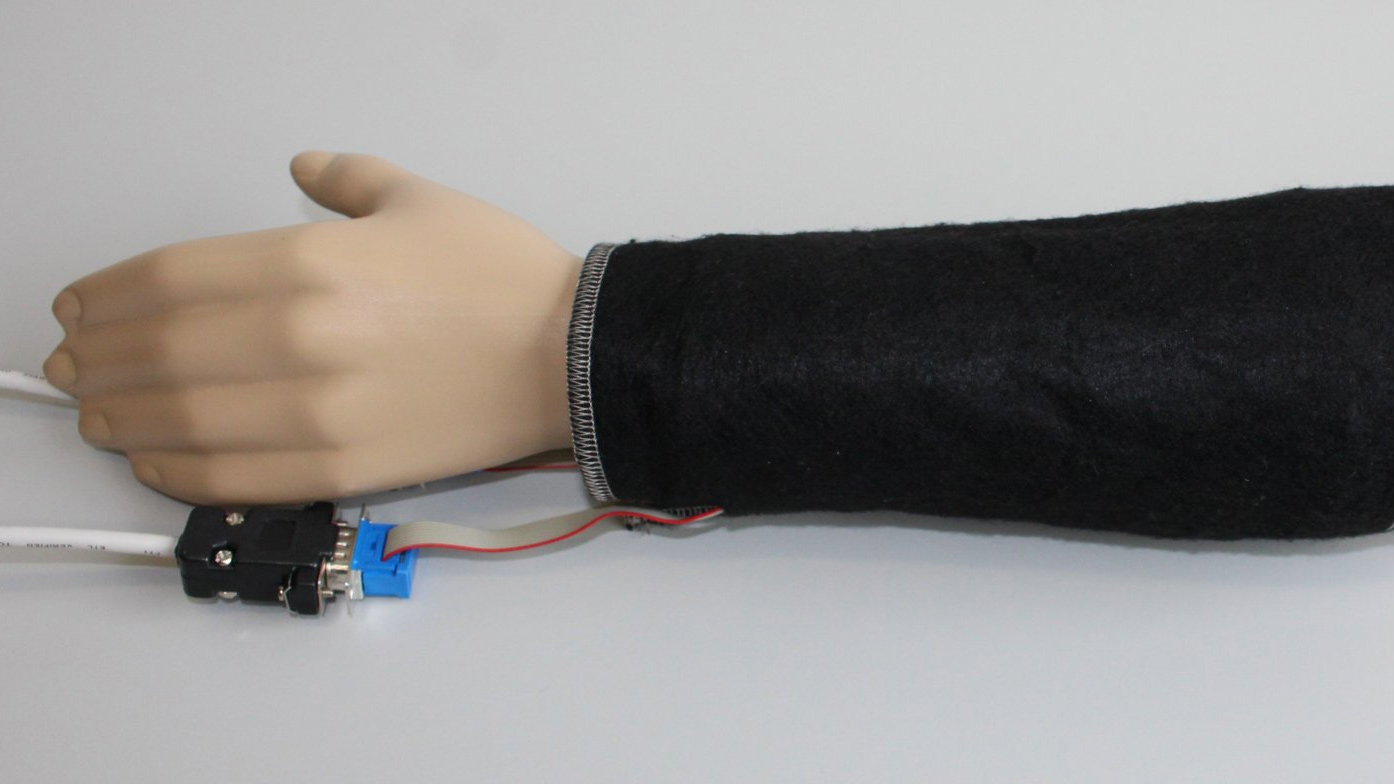

Using a prototype that combines a mannequin's arm with 64 pressure sensors, Merel Jung and her team have identified four stages necessary for the robot to respond in the correct manner. It must perceive a touch, recognise it, interpret it, then respond in the correct way.

Rubbing gently

So far, most of the work has been on the first two of those stages – perceiving and recognising. In testing, the disembodied arm was able to recognise 60 percent of almost 8000 different touches. Those touches were distributed among 14 different kinds of touch, at three different levels of intensity.

"Sixty percent does not seem very high on the face of it, but it is a good figure if you bear in mind that there was absolutely no social context, and that various touches are very similar to each other," wrote a spokesperson from the University of Twente.

"Possible examples include the difference between grabbing and squeezing, or stroking roughly and rubbing gently."

A slap in the face

Another hurdle for the robot was that the touchers weren't given any instructions on how to 'perform' their touches, and the robot wasn't able to learn how they operated. "In similar circumstances, people too would not be able to correctly recognize every single touch," added the spokesperson.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Jung has now moved on to the next stage of the project – figuring out how the robot should interpret touches in a social context. Eventually, it's hoped that by looking at the context of the situation, robots will be able to figure out the difference between a stroke of the cheek and a slap in the face.

The full details of the research can be found in a paper in the Journal on Multimodal User Interfaces.