The future of 3D internet and computer interfaces

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

When we talk about a 3D internet, we don't mean HTML web pages designed in 3D - designers are doing that already.

Examples like White Void's portfolio add an eye-catching third dimension to an ordinary menu, the Dasai Creative Engineering website features core navigation options mapped onto a rotatable sphere, and the Swell 3D website is rendered in anaglyphic 3D and requires a set of red/cyan glasses to view properly.

Whether this approach is effective is up for debate. The effects on the White Void and Dasai sites need a hefty dose of Flash to function. A 2D website wouldn't be as pretty, but it would load quicker and be much simpler to navigate.

Augmented reality

Perhaps the future of a 3D internet is augmented reality. AR is currently a novelty. It describes applications that use a device's built-in camera to calculate your location and augment what you see with relevant web data. In other words, it's a glimpse into an internet that overflows into the real world.

AR applications deliver real place data in real time, tapping into existing databases and assets on the web. Wikitude and Cyclopedia, for example, let you see London Bridge through a camera and read the relevant Wikipedia entry onscreen. Star Walk annotates the night sky for you, while Quest Visual's Word Lens visually translates written languages as you watch, in a way that feels suspiciously like magic.

"AR is nothing more than a user interface," says Octavio Good, founder of Quest Visual. "In the case of Word Lens, everything that's being done could be done with a dictionary if you had time, but Word Lens uses AR to make looking up words effortless and fun."

Point a phone running the Acrossair browser at a high street, and it will show you the nearest restaurants and highlight those with the best reviews. It doesn't take much imagination to see where this technology is going. In the future, you might be able to see whether a shop has the product you want, or which pub your friends are in.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Google Goggles

It's no surprise that Google has thrown its weight behind AR with its Goggles and Shopper apps. Snap a photo of a product or object, and Google Goggles will attempt to identify it and return relevant search results. Do the same in Google Shopper for a fast price comparison.

The technology can be hit-and-miss. In fact, the process can often take longer than typing a query into a search box. Google Goggles is certainly handy for products, shops and some places, but it's unlikely to be useful if you want to find information about concepts or ideas. For example, how would you use augmented reality to search for information on augmented reality?

While the technology is often used for visual searches, it's not a replacement for search. Instead, it can change the way we interact with real-world objects and places.

"Augmented reality is a very natural user interface for some tasks," adds Octavio Good. "The next steps for AR will be to more seamlessly integrate the real word with information people care about."

Autonomy's Aurasma project promises more integration of the real and digital worlds. If its YouTube promo is any indication, it will let you point your phone's camera at an advert or still image and see it come to life with an animation or a video.

"The technology needed to make AR apps useful has arrived in the last year, in the form of capable smartphones," explains Octavio Good. "This year, phones will get dual-core CPUs, more powerful GPUs, and more capable sensors. AR apps can use these to make more creative apps and to improve the quality of existing ones."

3D controls

The way we interact with computers hasn't changed for almost 30 years. That's not to say that inventors haven't tried to revolutionise PC interaction. We've seen data gloves, VR helmets, 3D mice, trackballs, squeezable balls and even brainwave-powered headsets. Few devices have genuinely threatened the keyboard and mouse though, both of which are perfectly suited to today's 2D interfaces.

But things are changing. The introduction of the touchscreen on mobile devices has given rise to new UIs that are almost invisible to users. "On a traditional desktop PC you move the mouse to move the pointer and highlight the photo you want to see," explains Gabriel White of specialist device user experience consultancy Small Surfaces. "With a natural user interface (like a touchscreen), the user simply reaches out and touches the photo they want."

We're now seeing laptops and desktop PCs with touch-sensitive displays, and Windows 7 supports touch as standard. Microsoft has expanded the multi-touch idea with Surface, one of the world's most expensive coffee tables.

Beyond touch, we need to look to games consoles to see the ideal controllers for future 3D interfaces. Thanks to the PlayStation Eyetoy and Move, Nintendo Wii and Microsoft Kinect, millions of people have been introduced to the concept of spatial or gesture-based control - and they like it.

The advantage of the Wii's system is that it needs little explanation. You just swing an invisible tennis racket or chop with an imaginary sword. There are no complicated combos to learn, and the interface is practically invisible. We say 'practically' because current systems are still fiddly when using menus or entering data.

Remember the slick, gesture-based interface in the film Minority Report? What Tom Cruise and a fat special effects budget faked, MIT scientists actually built with an Xbox 360 and a hacked Kinect camera.

Gesture control seems the ideal replacement for the ageing mouse. As for the keyboard, whether the future is a physical peripheral or a virtual projection, there's life in QWERTY yet.

3D computer interfaces

Two-dimensional interfaces have proved their worth from the earliest punched cards through to the DOS prompt and the Windows desktop. 2D works - it's fast and effective. But it hasn't stopped the development of 3D UIs like Meego, SPBshell3D for Android and Bumptop, even though many are simply 2D systems with a 3D sheen.

Is the next phase of UI development purely cosmetic? UI specialist Gabriel White doesn't think so. "As 3D interfaces evolve, there will be new paradigms for interaction," he says. "Once depth is represented in a UI, it's possible to do fascinating things: organising UI elements spatially (rather than in categories and lists), and tangible manipulation of objects allow us to continue to make user interface more natural (pushing and pulling objects, not just swiping and zooming)."

Beyond the desktop

While original filesystems used a tree-like organisational structure, we're now locked into the idea of a desktop with files organised visually on top of it. This setup feels familiar; it's a structured environment that we can identify with, because it resembles a real-world desk.

But like any desk, a virtual desktop can get cluttered, which is why BumpTop introduced the idea of stacking files on top of one another to create a 3D desktop. Google bought the company in 2010, and has plans to incorporate parts of the 3D UI into Android 3.0.

Again, do we actually need a 3D interface? If so, what do we need it for? There's an argument that a 3D UI would enable us to do things that aren't possible in 2D environments.

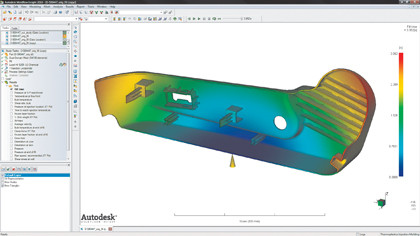

James McRae, an Autodesk researcher at the University of Toronto, points to large-scale data visualisation. "Across many disciplines, you have datasets emerging whose geometry is 3D by nature," he says. "Examples might be the solar system, or the human body."

Windows 8

With increased computer power, rendering a 3D display is easy, but interacting with it is hard. Rumours suggest that Windows 8 could have a 3D element - an optional graphical interface called Wind. It could include Kinect support for gesture tracking, or to enable logins via facial recognition. It might even feature colour-coded info-bubbles, which are scaled according to their importance.

Microsoft's Chief Research and Strategy officer Craig Mundie showed off such a 3D concept UI earlier this year. Watch the video here.

"There have been many failed attempts to bring the third dimension to UI experiences," says Gabriel White. "Think of the 1990s, when VRML was all the rage. What we learned from all this is that making 3D interfaces isn't about simulating the real world inside a PC. Rather it's about leveraging our real-world cognitive abilities to create compelling, natural and direct interactions that rely less on explicit reasoning and more on intuition and spatial memory."

-------------------------------------------------------------------------------------------------------

First published in PC Plus Issue 310. Read PC Plus on PC, Mac and iPad

Liked this? Then check out 3D processors, memory and storage explained

Sign up for the free weekly TechRadar newsletter

Get tech news delivered straight to your inbox. Register for the free TechRadar newsletter and stay on top of the week's biggest stories and product releases. Sign up at http://www.techradar.com/register