iPhone 14 camera explained: Photonic Engine, quad-pixel sensors and more

Explaining this generation's big camera upgrades

What’s new in the iPhone 14 family for photography fans? The top line is the iPhone 14 and iPhone 14 Pro phones both get updated primary sensors. Plus, there’s a new processing pipeline called the Photonic Engine that promises better low light results.

Front cameras have been changed and there’s an ultra-stabilized Action video mode for particularly tricky shoots too.

We’re take a closer look at all the new features across the iPhone 14 family's cameras, so let’s start with the iPhone 14 Pro’s new primary snapper.

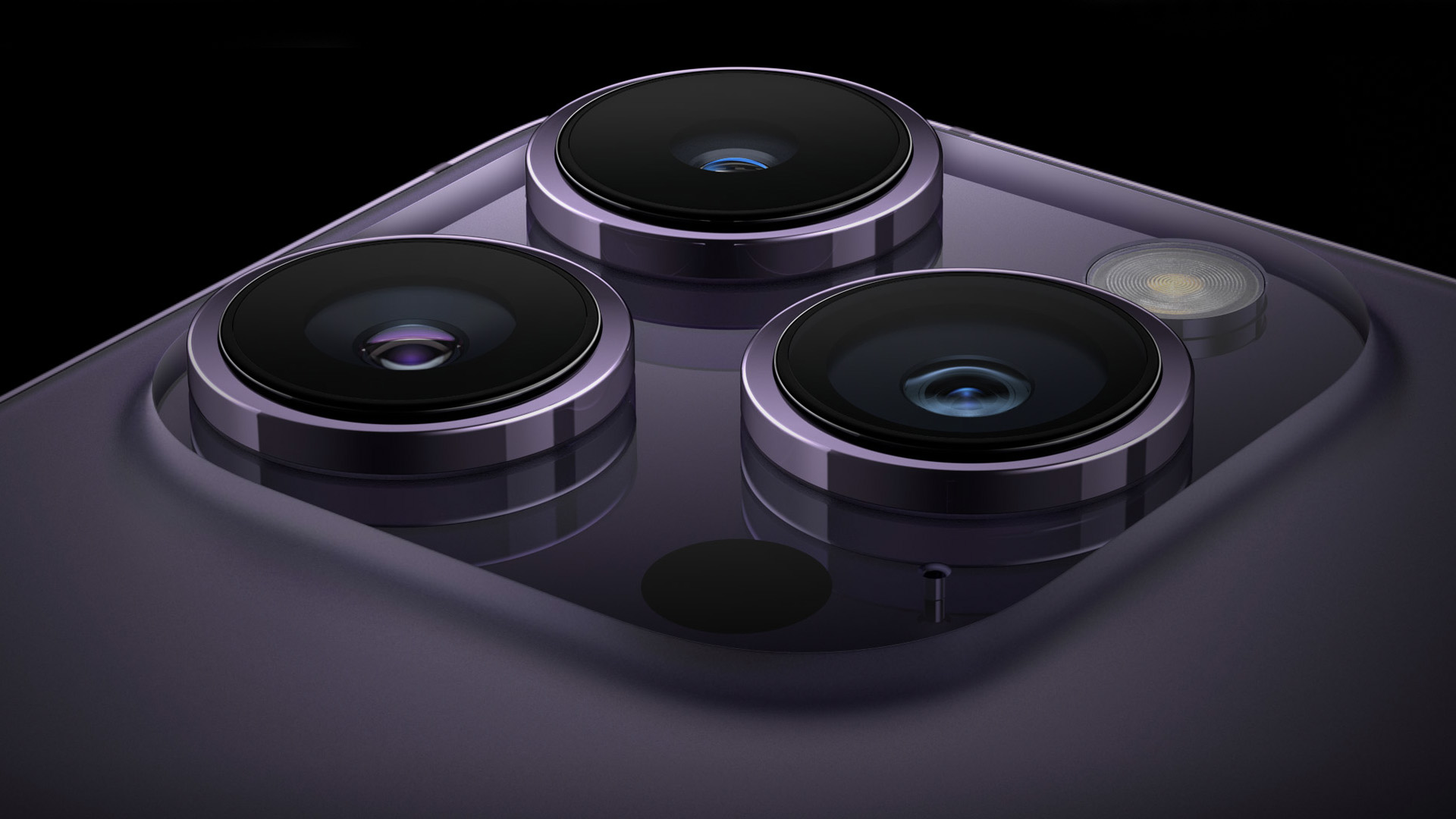

More pixels for the 14 Pros

The iPhone 14 Pro and Pro Max feature a 48-megapixel sensor with an f/1.78 lens. This is the first time an iPhone has used a pixel-binning sensor, meaning as standard it will shoot 12MP images, just like other iPhones.

Combine four pixels and they effectively act as a larger 2.44 micron pixel. It works this way because the color filter above the sensor groups four pixels in red, green and blue clusters.

Pixel binning sensors have been around in Android phones for years. The first we used was not an Android, though. It was the Nokia 808 PureView, from 2012.

The quad pixel arrangement of the iPhone 14 Pro means this is not a “true” 48MP sensor in one sense, but you can use it as such. Apple’s ProRAW mode can capture 48MP images, using machine learning to reconstruct an image and compensate for the fact we’re still dealing with 4x4 blocks of green blue and red pixels.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

A similar method is used for the iPhone 14 Pro’s 2x zoom mode, for “lossless” 12MP images. This complements the separate 3x optical zoom sensor, which shares its hardware with last year’s models.

A primer on the iPhone 14's camera

The standard iPhone 14 series is starting to look like one of the best value high-end smartphones around. It starts at $799 but gets you some of the most important camera traits of the pricier iPhone 13 Pro Max from last year, plus some new stuff besides.

The iPhone 14 and iPhone 14 Plus – the new larger model – have two rear cameras. As in the iPhone 13, they are both 12MP sensors.

While Apple has not confirmed this, it looks like the iPhone 13 gains the primary camera used in the iPhone 13 Pro Max. It has large 1.9 micron sensor pixels, a super-fast f/1.5 lens and sensor-based stabilization.

A little previous digging revealed this sensor to be the Sony IMX703, a 1/1.65-inch sensor. It’s a straight upgrade over the iPhone 13’s IMX603, and this upgrade path follows a familiar pattern. The iPhone 12 Pro Max used the IMX603 a year before the more affordable iPhone 13 got it.

The second camera is a 12MP ultra-wide with an f/2.4 lens. This is, unfortunately, the same spec as the iPhone 13’s secondary camera.

Photonic Engine

However, this does not mean the iPhone 14 ultra-wide will take the same kind of images as the iPhone 13's. Apple is relying on a different way to upgrade image quality. It’s called the Photonic Engine.

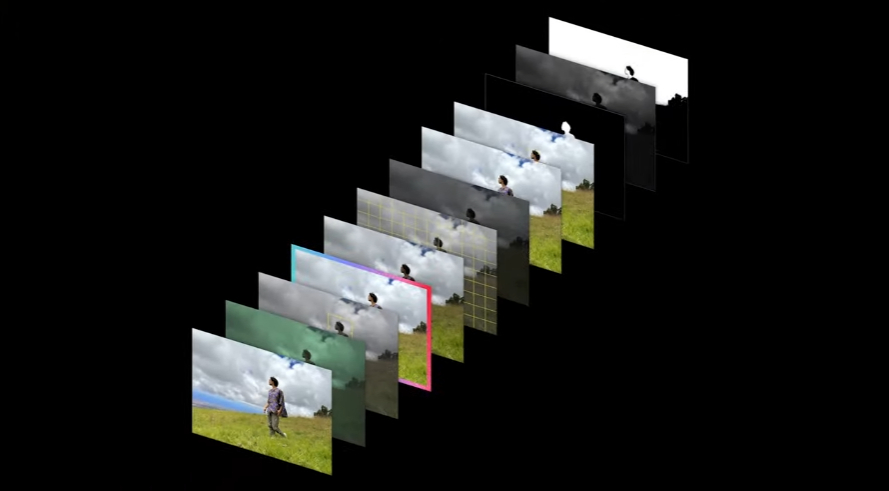

This is Apple’s name for an evolution of the Deep Fusion process it introduced in 2019. To understand the changes made, we have to pay close attention to the words Apple uses to describe it.

'We’re applying Deep Fusion much earlier in the process, on uncompressed images,' says Kaiann Drance, VP of iPhone Product Marketing.

This suggests the original version of Deep Fusion deals in compressed exposures, to allow the process to work more quickly, and reduce strain on the processing pipeline. In this case we’re not talking about Deep Fusion working with RAW files versus JPGs, but more likely the bit depth of the images.

That thesis is supported by another thing Drance says, that it “enables rendering of more colors.” If Deep Fusion images are 10-bit, their palette consists of 1.07 billion colors. At 12-bit, this extends to 68 billion colors. Of course, the final perception of an image’s actual color is going to rely far more on tuning than such a palette extension, as impressive as it sounds on paper.

Drance also claims the Photonic Engine has a massive impact on low-light photo quality, claiming a “2x” improvement for the wide and front cameras and a “2.5x” boost for the primary camera.

Of course, we don’t know if that last figure also includes the improvements caused by a faster lens and larger sensor, or what metric is used for this comparison but it promises to be an improvement, regardless.

Apple’s visual representation of the Photonic Engine pipeline shows 12 stages, only four of which are the Deep Fusion part (although 2019 reporting on Deep Fusion suggested it actually involves 9 exposures).

What are the rest? Apple did not go into that much detail, but the graphic clearly shows stages where the subject is identified and isolated. This allows for separate treatment of, say, a person and the background behind them.

Second-generation sensor-shift OIS

The previous iPhone 13 already had sensor stabilization. This is an excellent piece of phone camera technology. Earlier iPhones already feature OIS (optical image stabilization), where a motor counters camera movements by tilting the lens slightly to compensate.

Sensor-shift stabilization moves, you guessed it, the entire sensor, rather than just the lens. And tests show it’s particularly good at eliminating the kind of motion you get when, for example, shooting inside a moving car.

Common sense suggests the iPhone 14 relies on the same sensor-shift stabilization hardware as the iPhone 13 Pro Max. It appears to have the same camera, after all. However, it does have a new feature called Action mode, also found in the Pro models.

This is intended for activities you might otherwise use a GoPro or gimbal for. Apple showed off some guy running during its launch to demonstrate and, as expected, it footage looked reassuringly smooth.

Action mode appears to use a combination of sensor-shift stabilization and a very significant crop. This crop is the fuel for software stabilization. When the camera “sees” more than is in the final video, the phone can move that cropped section around the full sensor view to negate actual motion in the final clip. This is why GoPro footage looks so smooth — a super-wide lens with plenty of spare image information to work with.

Autofocus selfies & that new flash

All four iPhone 14 models also get an upgraded front camera. It’s a 12MP sensor with an f/1.9 lens, one that's faster than the f/2.2 lenses of the iPhone 13 family.

The big change is in focusing, though. Most selfie cameras use a fixed focus lens, designed to appear sharp at arm’s length. The iPhone 14 selfie snapper now has autofocus, allowing for much closer selfies and more consistent sharpness at more distances.

Apple describes it as a hybrid focus system, meaning it uses either phase detection focus pixels or classic contrast detection, based on the situation.

The flash on the back has had a redesign too. Apple made a lot of noise when its true tone flash was created in 2013. The idea was that the color of the flash’s light could be changed to suit the scene.

This new version has nine LEDs, allowing for more gradations in the flash behaviour and intensity to suit subjects that are nearer, or further away. It sounds smart, and powerful, but won’t avoid those harsh unflattering shadows you get with a small LED flash.

You can pre-order the iPhone 14 and iPhone 14 Pro phones today.

Andrew is a freelance journalist and has been writing and editing for some of the UK's top tech and lifestyle publications including TrustedReviews, Stuff, T3, TechRadar, Lifehacker and others.