Is multi-core the new MHz myth?

How many cores do we really need?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

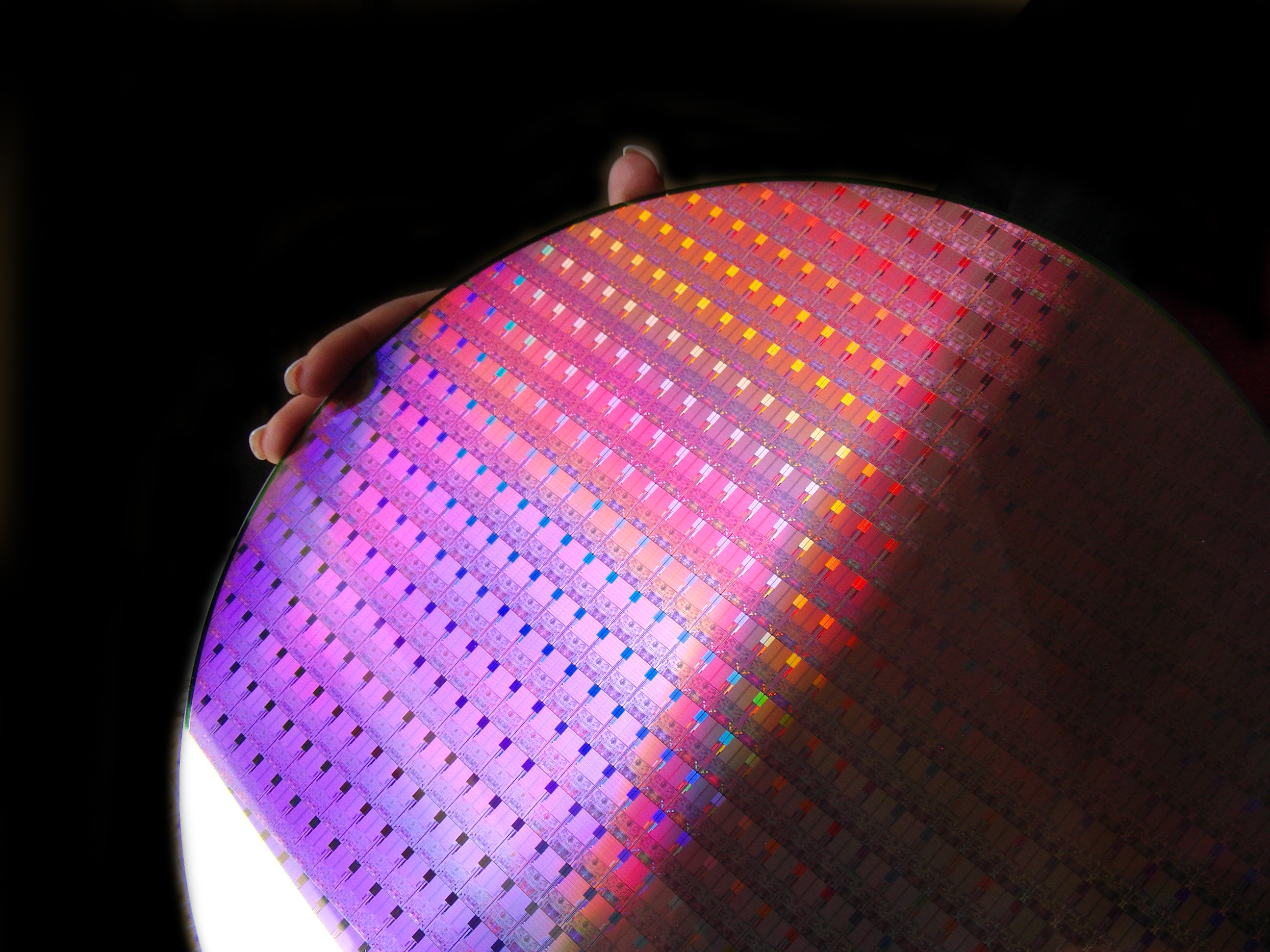

The PC industry is betting big time on a future built on multi-core PC processors. AMD recently launched its own affordable quad-core chip, Phenom.

Intel is already cranking out second generation 45nm quad-core CPUs. But do multi-core CPUs deliver real-world performance benefits? Or are they merely the latest marketing ruse, a respin of the age old MHz myth that crashed and burned with Intel's Pentium 4 processor?

These are important questions. Not just because quad-core processors have become much more affordable. But also because the momentum towards massively multi-core computing now looks unstoppable.

It was as recently as April 2005 that Intel wheeled out the world's first dual-core desktop processor, the Pentium D. AMD swiftly followed the Pentium D with its own dual-core Athlon 64 chip. Then Intel raised the bar again in November 2006 with the release of the Kentsfield revision of its Core 2 CPU, the first quad-core PC processor.

By early 2009, and perhaps sooner, Intel is expected to roll out eight-core CPUs based on its upcoming Nehalem architecture. What's more, each of those cores will have the capability to process two threads per core for a grand total of 16 logical processors. Whatever the truth about the performance benefits, there's no question the industry in general is in the process of betting its very future on multi-core processor technology.

With all this in mind, what are the pros and cons of the move to multi-core? In this first part of the article, we discuss the upsides to multi-core technology.

1. Instant multi-tasking performance boost

Without doubt, multi-core chips are killer for multi-tasking. It's virtually impossible to run out of CPU resources with the latest quad-core processors. A quad-core chip won't even break its stride if an application like Firefox completely hangs, soaking up 100 per cent of the CPU time of an entire processor core. The remaining three cores will be plenty for keeping that HD video decode running smoothly, in other words. In the future, the PC's role as a general-purpose digital workhorse is only going to expand.

2. Multi-threaded software is already quite common

Sign up for breaking news, reviews, opinion, top tech deals, and more.

With one or two notable exceptions, including gaming, many applications that require the most computational grunt are already coded to run in parallel on more than one processor core. Popular HD video codecs like VC-1 and H.264 are highly parallel, for instance. Likewise, several popular productivity applications including Adobe Photoshop offer multi-threading support.

3. Future applications will be increasingly parallel

If you don't believe us, take it from one of Intel's leading reasearch engineers, Jerry Bautista. As he recently explained to Tech.co.uk, the future of desktop computing will be all about compute-intensive parallel workloads. "Natural language queries, gesture-based interfaces, media mining, health monitoring and diagnostics - there are a huge range of upcoming applications that all lend themselves to parallel processing," Bautista says. And for that, Bautista confidently asserts, you're going to want a grunty multi-core processor.

4. New tools will ease multi-threaded software development

Today's computer science students are being trained to think and code in a more parallel fashion. For serial-minded dinosaurs, however, tools are being developed to help automate the process of producing threaded software. As well as conventional compilers, Intel is researching a new technology known as speculative multi-threading which could allow existing single-threaded code to be split across many cores.

5. Multi-core is the long term only option for increasing performance

Improved silicon process technology has allowed AMD and Intel to keep packing more and more transistors into CPU dies. However, chip frequencies levelled out below 4GHz over two years ago. Adding cores is therefore the only realistic way to keep the PC performance progressing and leverage the increasing transistor densities made available through process shrinkage.

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.