Google's AI editing tricks are making Photoshop irrelevant for most people

Opinion: Google's robot retouching is bad news for Photoshop

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

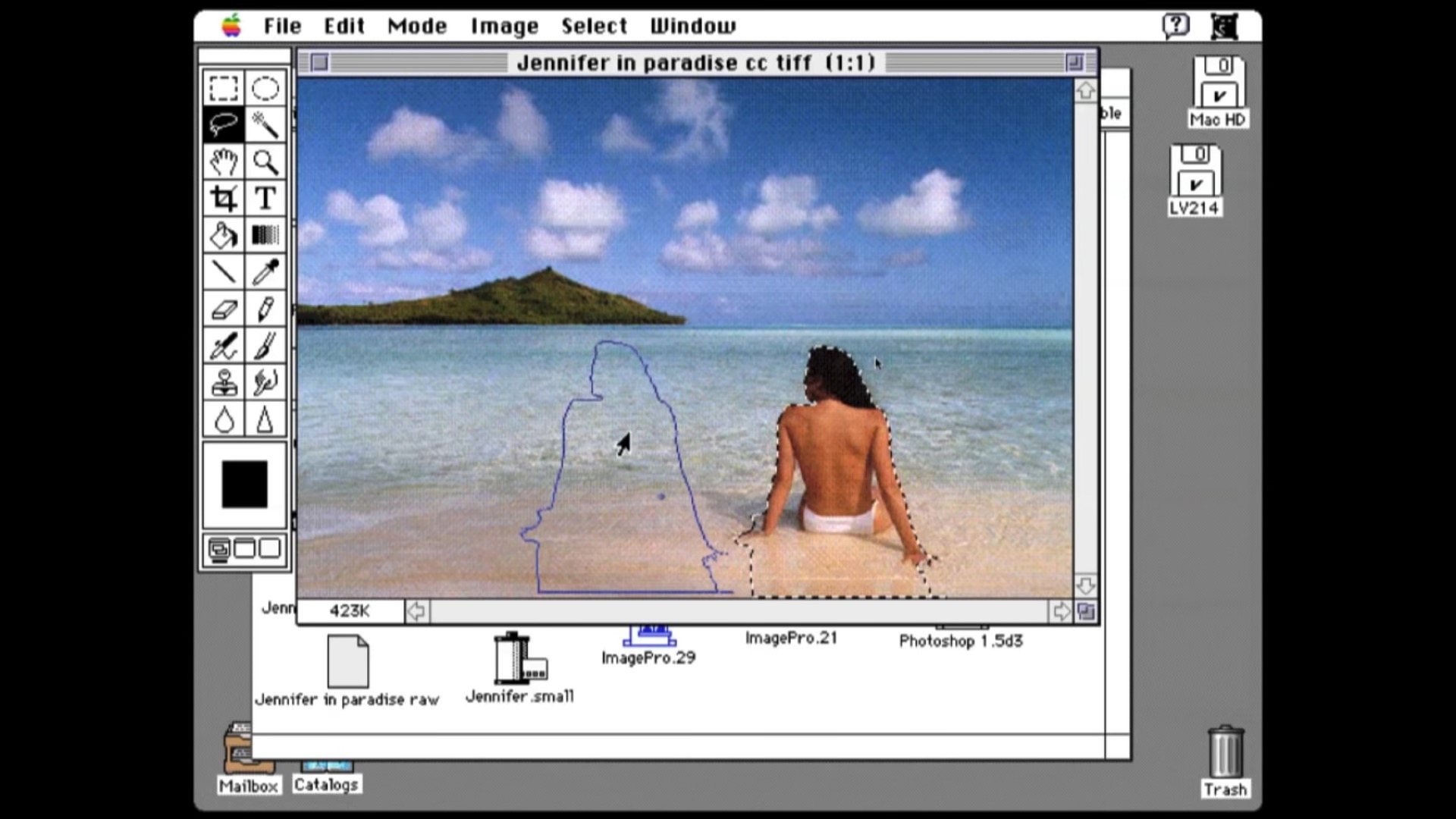

Back in 1987, one of the most significant photos of the last century was shot on a Tahiti beach. It was taken by John Knoll, who'd later co-create Adobe Photoshop – and his 'Jennifer in Paradise' snap (below) would be used to demo the amazing tools that would soon democratize photo retouching.

35 years on, we're seeing the next stage of that photo editing revolution unfold – only this time it's Google, rather than Adobe, that's waving a powerful new Magic Wand. This week we saw the arrival of its new Photo Unblur feature, the latest in an increasingly impressive line of AI editing tools.

Just like 90s Photoshop, Google's tricks are opening up image manipulation to a wider audience, while challenging our definition of what a photo actually is.

As its name suggests, Photo Unblur (currently exclusive to the Pixel 7 and Pixel 7 Pro) can automatically turn your old, blurry snaps into crisp, shareable memories. And it's the latest example of Google's desire to build a robot Photoshop into its phones (and ultimately, Google Photos).

This time last year, we saw the arrival of Magic Eraser, which lets you remove unwanted people or objects from your photos with a flick of your finger. Parents and pet owners were also treated to a new 'Face Unblur' mode, which works in a different way to Photo Unblur, on the Pixel 6 and Pixel 6 Pro.

Collectively, these modes aren't yet as revolutionary as the original Photoshop. Adobe also has enough of its own machine-learning wizardry to ensure its apps will remain essential among professionals for many years to come. But the big change is that Google's tools are both automatic and live in the places where non-photographers shoot and store their photos.

For the average user of Google's phones or its cloud photo apps, that'll likely mean never having to dabble with a program like Photoshop again.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Robot retouchers

Photo Unblur and Magic Eraser may look simple, but they're built on some powerful machine learning. Another related feature, Face Unblur, is so demanding that it can only run on Google's custom Tensor processor.

Both modes do way more than crank up a sharpening slider. The complex process of building noise maps and applying 'polyblur' techniques was explained recently by Isaac Reynolds, Google's Product Manager for the Pixel camera, in a chat on the company's new 'Made by Google' podcast.

When asked about Photo Unblur and Face Unblur, Reynolds said: "I think you've probably seen in the news all these websites now where you can type in a sentence and it produces an image, right?". If you haven't, those sites include the likes of Dall-E and Midjourney – and beware, they're a hugely dangerous weekend time-sink.

"There's a category of machine learning models in imaging that are known as generative networks," Reynolds explained. "A generative network produces something from nothing. Or produces something from something else that is lesser than, or less precise than, what you're trying to produce. And the idea is that the model has to fill in the details."

Like those text-to-image AI wizards, Google's new photo-rescuing tools fill in those details by crunching together a ton of connected data – in Google's case, an understanding of what human faces look like, how faces look when they're blurred, and an analysis of the type of blur in your photo. "It takes all those things and it says 'here's what I think your face would look like after it's been unblurred," said Reynolds.

If that sounds like a recipe for warped faces and uncanny valleys, Google is keen to stress that it doesn't go overboard on attacking your mug.

"It never changes what you look like, it never changes who you are. It never strays out of the realm of authenticity," claims Reynolds. "But it can do a whole lot of good to deblur photos when your hands were shaking, if the person you're photographing is just slightly out of focus, or things like that," he adds.

We haven't yet been able to fully test Photo Unblur, but the early demos (like the one above) are impressive. And alongside Google's growing batch of tricks, it's all starting to make Photoshop look unnecessarily complex for photo edits. Why bother with layer masks, pen tools and adjustment layers when Google can do it all for you?

Restoring order

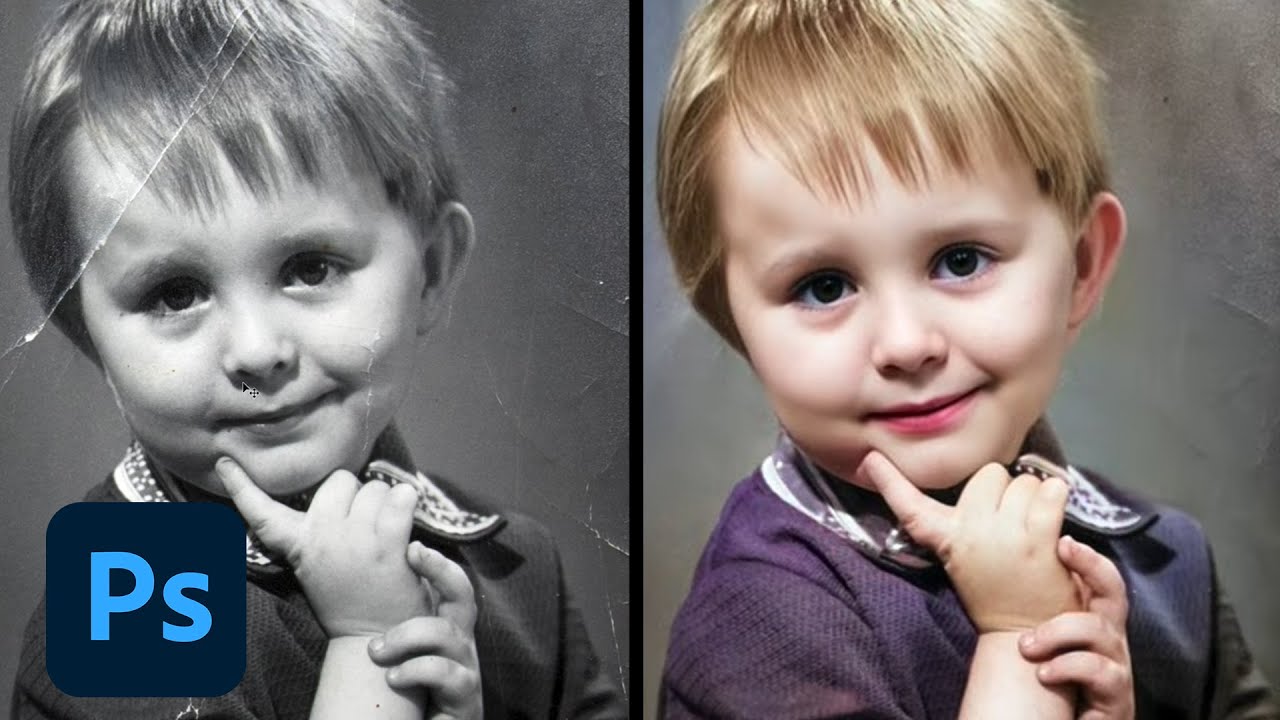

Of course, Google doesn't have a monopoly on machine-learning and Adobe has plenty of its own powerful tools. Adobe Sensei is a machine-learning tool that powers Photoshop 'Neural filters' like Photo Restoration, which automatically restores your old family photos.

Because the likes of Google and Samsung (which has its own 'Object eraser' tool) are now building these features into their phones, Adobe's machine learning is being pushed further towards professional creators. Google's Photoscan app already retouches your old photos while it digitizes them, so an automatic restoration tool for Google Photos is surely around the corner. Let's just hope it steers clear of slightly creepy 're-animation' effects like Deep Nostalgia.

This increasingly wide distinction between Photoshop and new Google tools like Photo Unblur was neatly summed up nicely earlier this year by Adobe VP and Fellow Marc Levoy, who was previously the driving force behind Google's Pixel phones.

In the Adobe Life Blog interview, Levoy said that while his role at Google was to "democratize good photography", his mission at Adobe is instead to "democratize creative photography". And that involves "marrying pro controls to computational photography image processing pipelines".

In other words, Google and others have now taken the baton of "democratizing good photography", while Adobe's programs use machine-learning power to improve the workflows of amateurs and pros who need the finer craft of manual photo edits. The death of Photoshop is a long way off, then, even if many of its mass market tools are being automated by phones.

AI openers

These are still early days in the AI photo editing revolution and Google's new tools are far from the only ones out there. Desktop editors like Luminar Neo, ImagenAI and Topaz Labs are all impressive AI assistants that can enhance (or rescue) your imperfect photos.

But Google's Photo Unblur and Magic Eraser go a step further by living in our Pixel phones – and they'll surely migrate to our Google Photos libraries, too. This step would really take them to a mass audience, who'll be able to turn their holiday snaps or family photos into polished masterpieces without needing to know what an adjustment layer is.

As for Adobe and Photoshop, we'll likely see some new Sensei announcements at Adobe's Max 2022 conference from October 18. So while its recently updated Photoshop Elements app is starting to look a little dated, the full-fat Photoshop should continue to be at the forefront of photo trickery.

That's an impressive run considering it's now 35 years since Photoshop co-founder first started dabbling with his 'Jennifer in Paradise' snap. But given the rapid development of text-to-image generators like Dall-E, and Google's photo-fixing tools, it looks like we're on the cusp of another revolutionary leap for the humble photo edit.

Mark is TechRadar's Senior news editor. Having worked in tech journalism for a ludicrous 17 years, Mark is now attempting to break the world record for the number of camera bags hoarded by one person. He was previously Cameras Editor at both TechRadar and Trusted Reviews, Acting editor on Stuff.tv, as well as Features editor and Reviews editor on Stuff magazine. As a freelancer, he's contributed to titles including The Sunday Times, FourFourTwo and Arena. And in a former life, he also won The Daily Telegraph's Young Sportswriter of the Year. But that was before he discovered the strange joys of getting up at 4am for a photo shoot in London's Square Mile.