YouTube could be getting a real-time translator in the future

Google is testing a real-time dubbing service

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Seamlessly integrating AI capabilities from PaLM 2 across the Google ecosystem, including Bard, has been a major theme at the Google I/O 2023 event. Although Google believes there are some features that shouldn’t be released instantly.

During the Google I/O keynote, the company’s senior vice president of technology and society, James Manyika, raised concerns about the potential tensions between misinformation and some AI capabilities, namely the technology that’s behind deep fakes.

What he’s referring to are the language models that deepfakes use to dub voices in videos – you know the ones, where a famous actor’s monologue from one of the best TV shows or best films is suddenly swapped for a synthetic voice that alters what it the original script said.

Because Google sees the potential for bad actors to misuse this technology, it is taking some steps to set up what it referred to as “guardrails”. In order to prevent the misuse of some of these new features, the company is integrating artefacts into photos and videos, such as watermarks and metadata.

One new tool that will be massively useful and beneficial, but could easily be misused, is a prototype that Google is rolling out to a set number of partners, called "universal translator".

Google’s universal translator is an experimental AI video dubbing service that translates speech in real-time, allowing you to instantly see and read what someone is saying in another language while watching a video.

The prototype was showcased during the event through videos from a test that was part of an online college course created in partnership with Arizona State University. Google says that early results have been promising, with university students from the study showing a higher number of completions in course rates.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

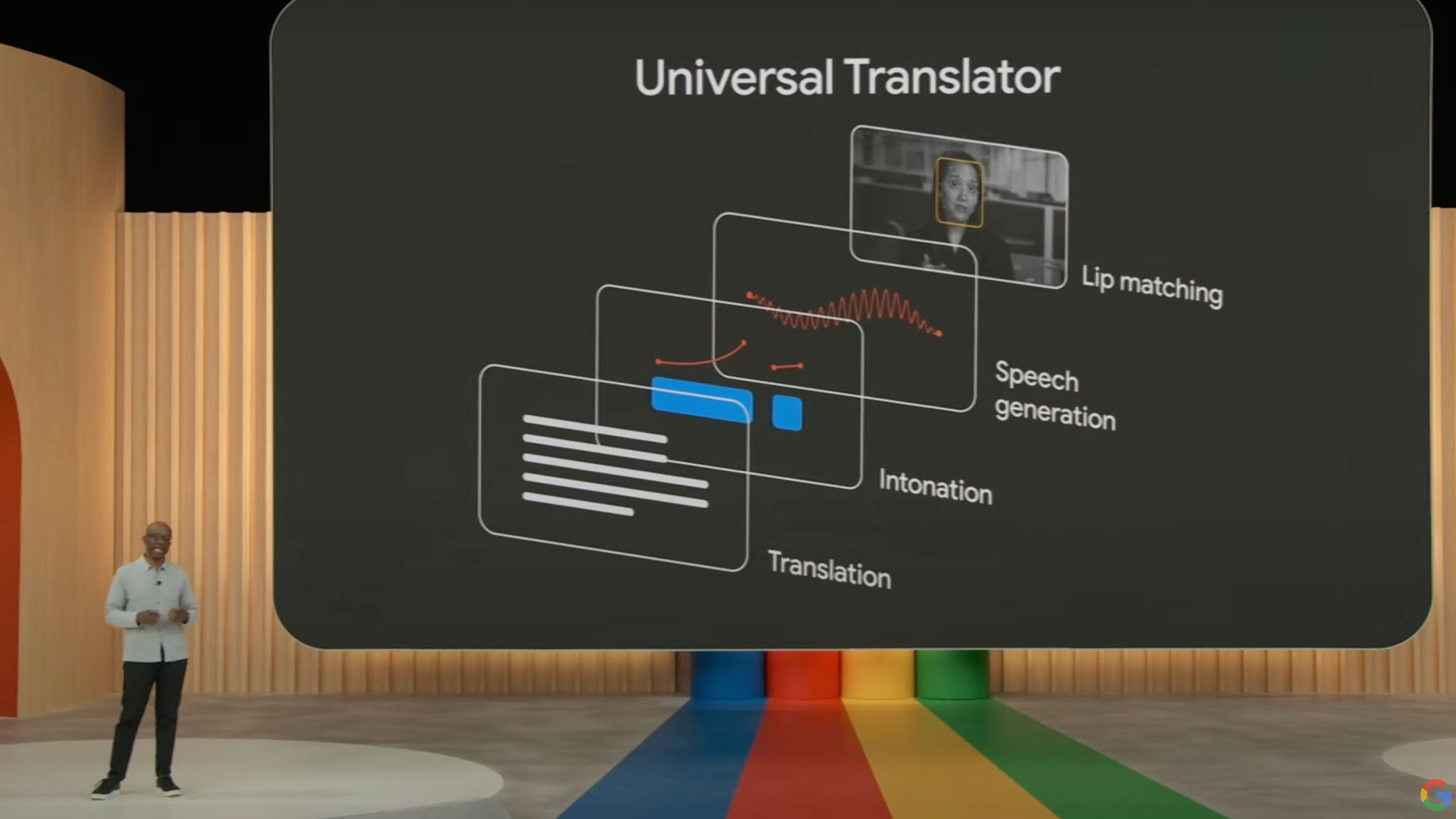

The model works in four stages. In the first stage, the model matches lip movements in a video to words it recognises. The second step triggers an algorithm that provides instant speech generation. The third stage of the model uses intonation, which measures the rise and fall in the natural pace of someone speaking, to aid the translation. Finally, once it has replicated the style and matched the tone from a speakers’ lip movements, it brings it all together to generate the translation.

Where will the universal translator feature?

While the universal translator feature isn't yet available outside of a small testing group, it might be that once Google has tested numerous safeguards it will roll it out to services such as YouTube and its video conferencing service Google Meet, for example.

After all, being able to translate live videos in real-time into multiple languages could be an incredibly useful tool. Not only could a universal translator expand a YouTube channel's global viewership but it could allow for more collaborative projects across countries.

We'll certainly be watching and waiting to hear more about this feature and where it could be used in the Google ecosystem.

Looking for more about the biggest news from Google I/O? Check our Google I/O 2023 live blog to get a play-by-play run down of what was announced at the event.

Amelia became the Senior Editor for Home Entertainment at TechRadar in the UK in April 2023. With a background of more than eight years in tech and finance publishing, she's now leading our coverage to bring you a fresh perspective on everything to do with TV and audio. When she's not tinkering with the latest gadgets and gizmos in the ever-evolving world of home entertainment, you’ll find her watching movies, taking pictures and travelling.