Meta is building the world’s fastest AI supercomputer - Here's why

All for its metaverse vision

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Meta says it has built a research AI supercomputer that is among the fastest in the world.

"Today, Meta is announcing that we’ve designed and built the AI Research SuperCluster (RSC) — which we believe is among the fastest AI supercomputers running today and will be the fastest AI supercomputer in the world when it’s fully built out in mid-2022," the company announced.

The supercomputer will be used to train large models in natural language processing (NLP) and computer vision for research with the aim of training models with trillions of parameters.

The number of parameters in neural network models have been rapidly growing. For example, Microsoft's natural language processor GPT-3 has 175 billion parameters.

What will Meta use this supercomputer for?

Basically, Meta’s supercomputer will be able to process huge amounts of data, and will be used in applications that are in sync with its plans for metaverse.

As it noted: "Ultimately, the work done with RSC will pave the way toward building technologies for the next major computing platform — the metaverse."

Facebook CEO Mark Zuckerberg too said: “The experiences we're building for the metaverse require enormous compute power (quintillions of operations / second!) and RSC will enable new AI models that can learn from trillions of examples, understand hundreds of languages, and more."

Sign up for breaking news, reviews, opinion, top tech deals, and more.

RSC is expected to help build cutting-edge AI models "that can learn from trillions of examples; work across hundreds of different languages; seamlessly analyze text, images, and video together; develop new augmented reality tools; and much more."

Meta is working towards creating new AI systems that can, say, power real-time voice translations to large groups of people, each speaking a different language, so they can seamlessly collaborate. That could advance fields such as natural-language processing for jobs like identifying harmful content in real time.

Largest customer installation of Nvidia

Supercomputers, which feature many interconnected processors clumped into what are known as nodes, are used for AI research.

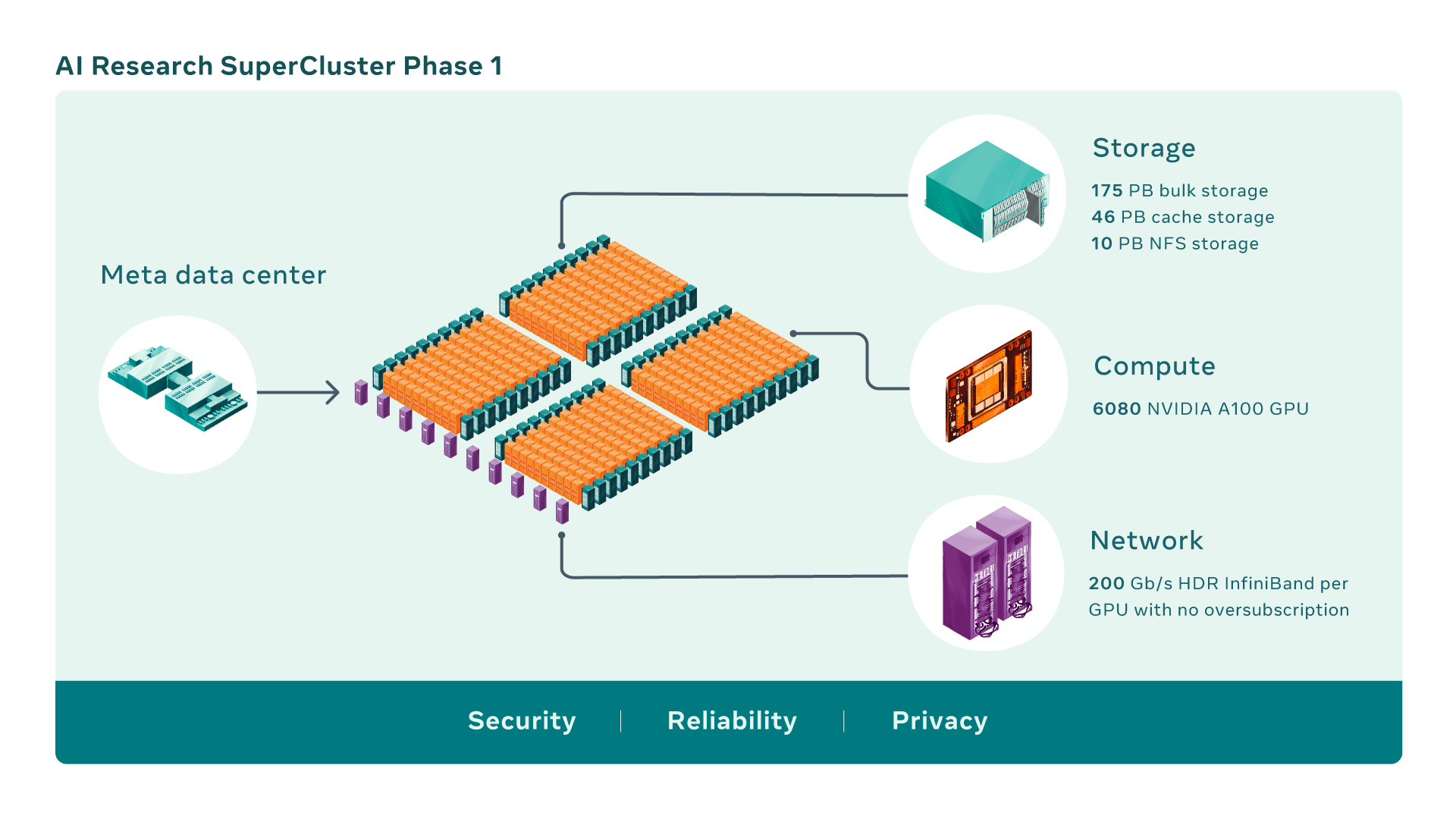

Giving the under-the-hood details of the supercomputer, Meta said RSC comprises a total of 760 NVIDIA DGX A100 systems as its compute nodes, for a total of 6,080 GPUs — with each A100 GPU being more powerful than the V100 used in its previous system.

It should be noted AI supercomputers are built by combining multiple GPUs into compute nodes, which are then connected by a high-performance network fabric to allow fast communication between those GPUs.

Meta said RSC runs computer vision workflows up to 20 times faster, runs the NVIDIA Collective Communication Library (NCCL) more than nine times faster, and trains large-scale NLP models three times faster than the company's older research setup.

"That means a model with tens of billions of parameters can finish training in three weeks, compared with nine weeks before," Meta said

For its part, Nvidia said: "Once fully deployed, Meta’s RSC is expected to be the largest customer installation of NVIDIA DGX A100 systems."

RSC will dip into data from Meta's platforms

RSC’s storage tier has 175 petabytes of Pure Storage FlashArray, 46 petabytes of cache storage in Penguin Computing Altus systems, and 10 petabytes of Pure Storage FlashBlade.

At its current size, the new supercomputer is on par with the Perlmutter supercomputer at the National Energy Research Scientific Computing Center, which ranks fifth in the world. When completed later this year, however, Meta said it will have 16,000 GPUs, and it will be capable of nearly five exaflops of computing performance — that’s 5 quintillion operations per second.

For the record, Meta has been involved in AI research for more than a decade. It established the Facebook AI Research lab (FAIR) in 2013. Meta launched its first dedicated AI supercomputer in 2017, built with 22,000 Nvidia V100 GPUs.

Unlike its previous supercomputer, which used only open source and publicly available data sets, Meta’s new machine will tap into real-world training data obtained directly from the users of the company’s platforms.

Meta also said the supercomputer project was a completely remote one (due to the pandemic) that the team took from a simple shared document to a functioning cluster in about a year and a half.

Want to know about the latest happenings in tech? Follow TechRadar India on Twitter, Facebook and Instagram!

Over three decades as a journalist covering current affairs, politics, sports and now technology. Former Editor of News Today, writer of humour columns across publications and a hardcore cricket and cinema enthusiast. He writes about technology trends and suggest movies and shows to watch on OTT platforms.