Meta’s weirdly limited Ray-Ban Smart Glasses are the worst kind of vision of the future

Why 'Hey Meta'?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

We're back in the middle of a smart glasses arms race but the new ammunition is AI and a proliferation of watchwords. "Hey Meta" is the latest and I'm not sure I can take it.

I like the look of the new Ray-Ban Meta Smart Glasses that Meta CEO Mark Zuckerberg unveiled on Wednesday during Meta Connect. Thanks to the Ray-Ban partnership, they're already the best-looking smart glasses on the market, and the new color options, including one that looks like tortoiseshell, are spiffy.

A better camera, more storage, and improved audio were all expected, as was the upgrade to Qualcomm's AR1 Gen 1 Platform.

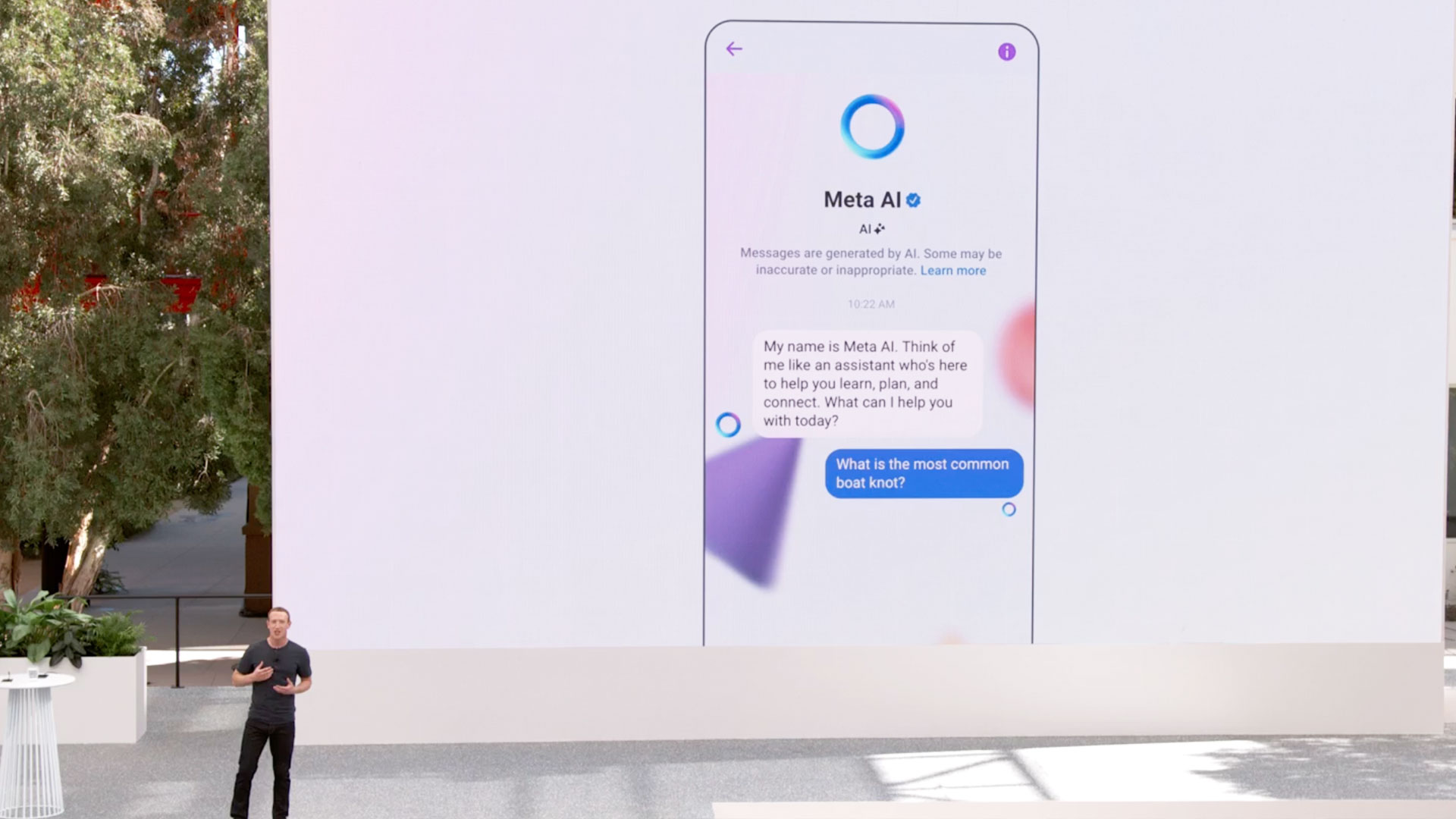

What I didn't expect was the unveiling of Meta AI, a generative AI chatbot (based on Meta's large language model Llama 2) that is already integrated into the new smart glasses.

So now, along with all the voice commands you can use to ask the Ray-Ban Meta Smart Glasses to take a picture or share a video, you'll be able to write a better social prompt and ask Meta AI questions about, I guess, whatever.

In Zuckerberg's demo, they asked Meta AI about how long to grill chicken breast (5-to-7 minutes per side), the longest river in New York (The Hudson), and about some arcane pickleball rule that I won't get into here.

While the demo showed text bubbles, that's not how Meta's smart glasses and Meta AI will work. There is no integrated screen (thank God). Instead, this will be an aural experience.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You will say, "Hey Goo..*"...er..."Hey Meta" and then ask your question. Meta AI will respond with its voice. Zuckerberg didn't offer details on how long you'd wait between watch phrase and Meta AI waking up or how long it might take to get answers, many of which are sourced from the internet thanks to the partnership with Microsoft's Bing Chat.

Next year a free update will take the Ray-Ban Meta Smart Glasses multi-model. Remember that new camera? Well, it'll be used for more than just posting photos and videos. The update will let the camera "see" what you're seeing and if you engage Meta AI, it can tell you all about it.

After the demos were over and Zuckerberg left the stage, I tried to imagine what this all might look like. You wearing your smart Ray-Bans and talking to them. Turns out I didn't have to imagine it.

In an interview with The Verge, Zuckerberg described how businesses and creators might use its new AI platform. Then he added:

"So there’s more of the utility, which is what Meta AI is, like answer any question. You’ll be able to use it to help navigate your Quest 3 and the new Ray-Ban glasses that we’re shipping. We should get to that in a second. That’ll be pretty wild, having Meta AI that you can just talk to all day long on your glasses."

Let me repeat that last bit: "...having Meta AI that you can just talk to all day long on your glasses."

Instantly my mind filled with images of hundreds of people wearing their stylish Ray-Ban Meta Smart Glasses while also muttering to themselves (actually to the glasses):

"Hey Meta, how do I make a mocha latte?"

"Hey Meta, what's the best way to get from the Grand Central Station to Soho?"

"Hey Meta, how should I talk to my parents about my inability to pay off my student loan?"

It will go on and on and on, and it's not necessarily a future I like.

When Google Glass first launched we had a few ways of interacting with it. We could use touch, we could use head nods, and then we could also use "Hey Google."

Of all of them, the least ridiculous was touch. A touch-sensitive panel on the side let you tap and swipe to change settings, open messages, and take pictures and videos.

The worst of them was surely the head nod. It just made you look unhinged.

Voice wasn't terrible when you were alone but I never liked shouting, "Hey Google," on a crowded NYC street. At least I was one of the few idiots wearing Google Glass. I can't imagine a world where everyone is talking to their eyeglass-based AIs at the same time.

This, though, is the magical world Mark Zuckerberg envisions. It may be the only option for Ray-Ban Meta Smart Glasses. While the original Ray-Ban Stories has a basic touch panel for volume and playback, I don't know if, in the new smart glasses, it's been extended to Meta AI interaction, and I don't think there's any kind of motion sensitivity. "Hey Meta" will, I think, be the primary way you interact with the integrated Meta AI.

In recent weeks, Apple and Amazon have introduced ways to both remove the "Hey" part from their AI watchwords and Amazon's experimental Alexa will soon carry on conversations without a watchword at all.

Is this better than what Meta is proposing? Slightly. At least people using Amazon's Echo Frames might eventually look like they're on a call with a trusted friend.

Meta AI will, in the early going, at least, require a watch phrase for each query. If the glasses become bestsellers, I see a nightmare in the making.

The good news is that it's all software and it's clear that Meta will be delivering regular updates. By the time Meta AI goes multi-model in 2024, Meta may be deprecating "Hey Meta" or at least cut it down to one unlock utterance followed by normal conversation.

Maybe. If not, Zuckerberg's vision will come true in the worst way possible.

You might also like

A 38-year industry veteran and award-winning journalist, Lance has covered technology since PCs were the size of suitcases and “on line” meant “waiting.” He’s a former Lifewire Editor-in-Chief, Mashable Editor-in-Chief, and, before that, Editor in Chief of PCMag.com and Senior Vice President of Content for Ziff Davis, Inc. He also wrote a popular, weekly tech column for Medium called The Upgrade.

Lance Ulanoff makes frequent appearances on national, international, and local news programs including Live with Kelly and Mark, the Today Show, Good Morning America, CNBC, CNN, and the BBC.