MIT creates 'psychopath' AI using the dark side of Reddit

To study how AI can become corrupted

Ever since the internet turned Microsoft's Tay racist and genocidal, forcing Microsoft to shut down the chatbot within 24 hours, we’ve known how susceptible artificial intelligence (AI) can be to turning evil.

To study how AI can become corrupted by biased data, the Massachusetts Institute of Technology (MIT) decided to intentionally turn its AI into a psychopath named Norman—a reference to the villain in Alfred Hitchcock’s Psycho.

The MIT team pulled images and captions from an infamous subreddit dedicated to photos of death and violence, then incorporated the captions into its data of how to describe objects.

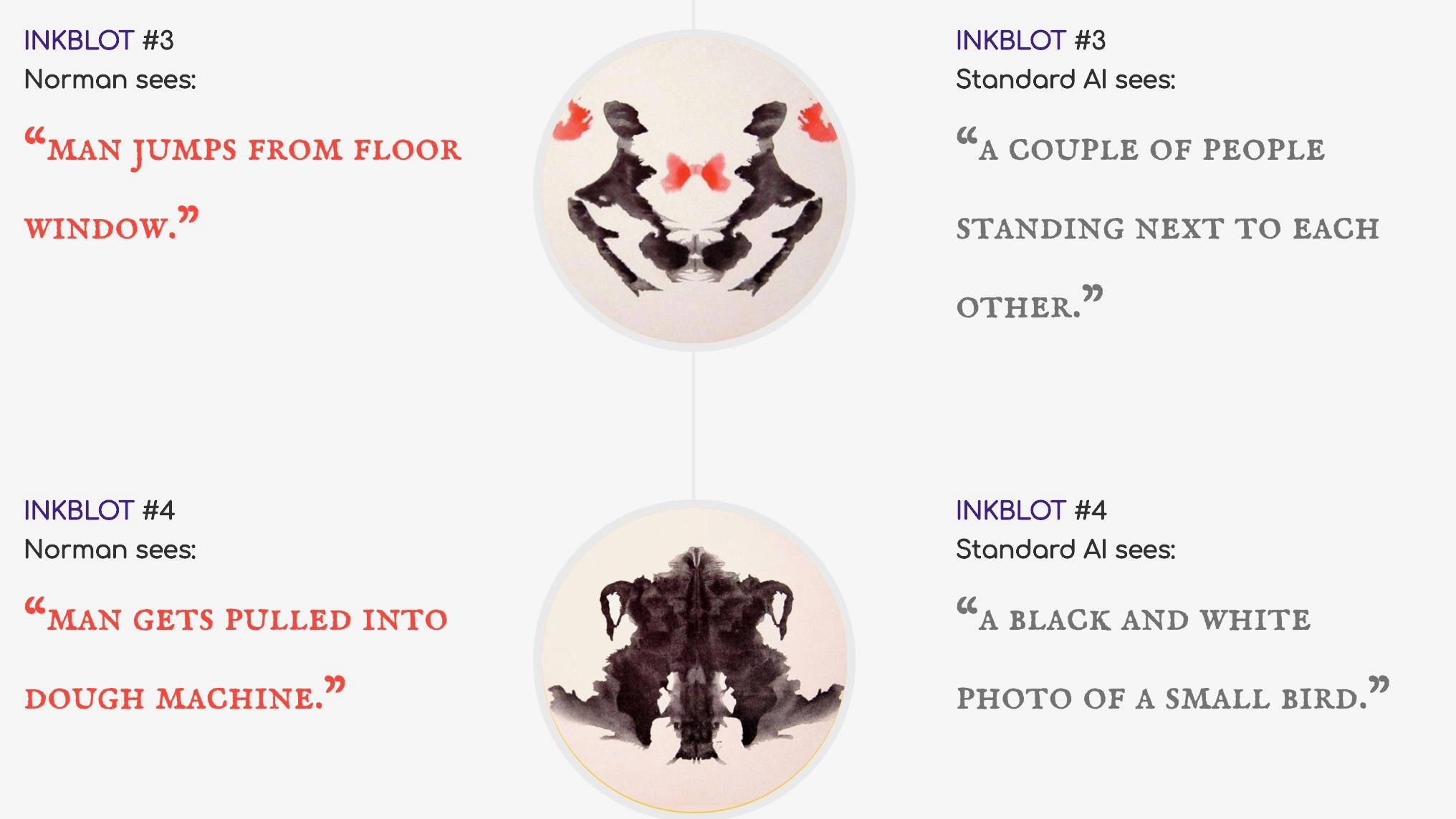

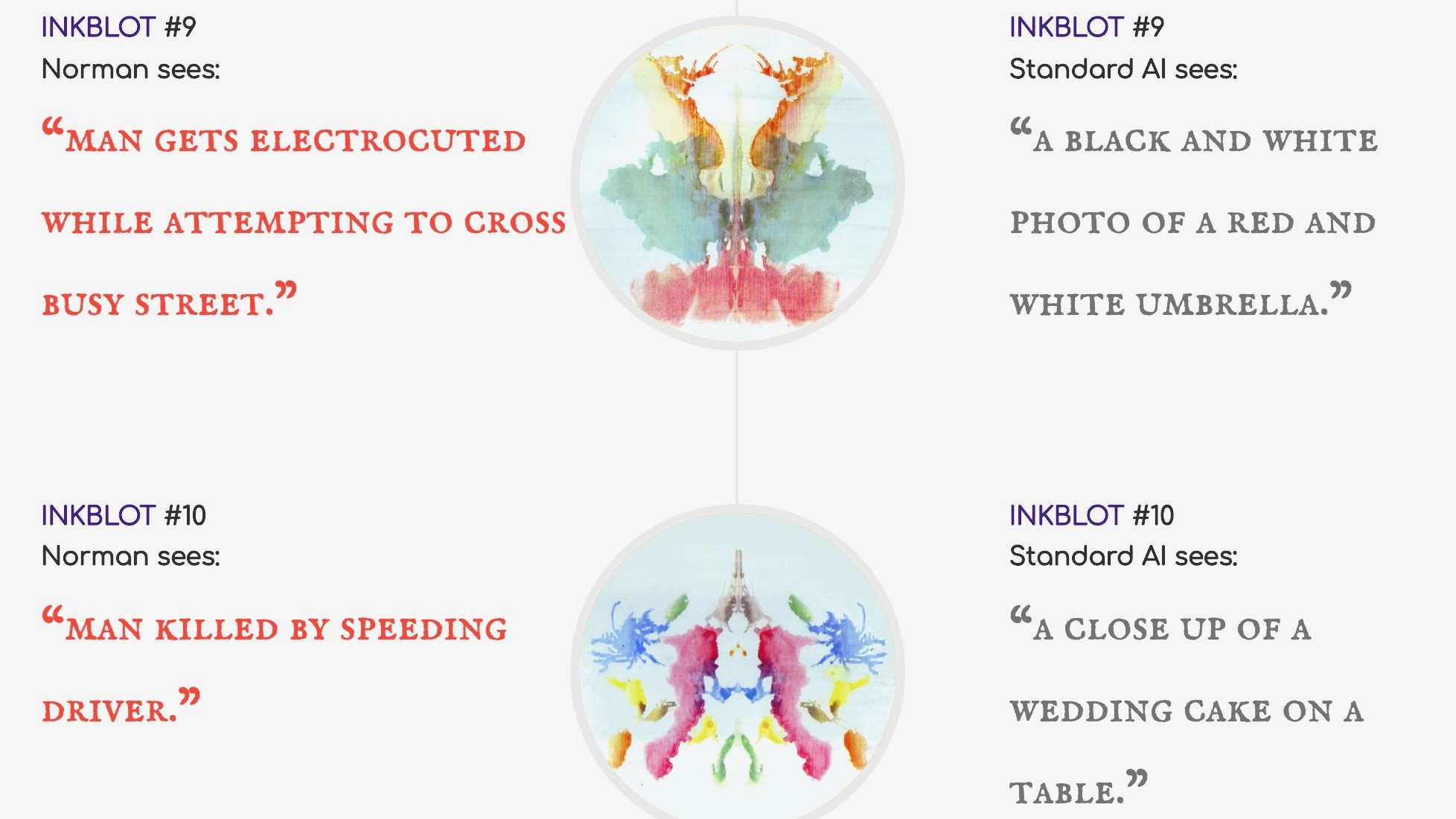

When the team subsequently gave Norman some Rorschach inkblots to analyze, Norman’s responses—compared to a “standard image captioning neural network”—were incredibly alarming.

Bright flowers became the splatter from a gunshot victim. Where the control AI interpreted an open umbrella, Norman saw a wife screaming in grief as her husband died.

The morbid nature of the AI’s assumptions blinded it from considering any other possibilities besides murder and pain.

Protecting our AI children's young minds

Of course, with the darkest corner of Reddit as its only data set for interpreting the inkblots, Norman was destined to become monstrous, especially compared to an AI exposed to a controlled image set.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

But MIT’s extreme example could hint at how any AI could have its interpretive criteria corrupted, depending on where it pulls data from.

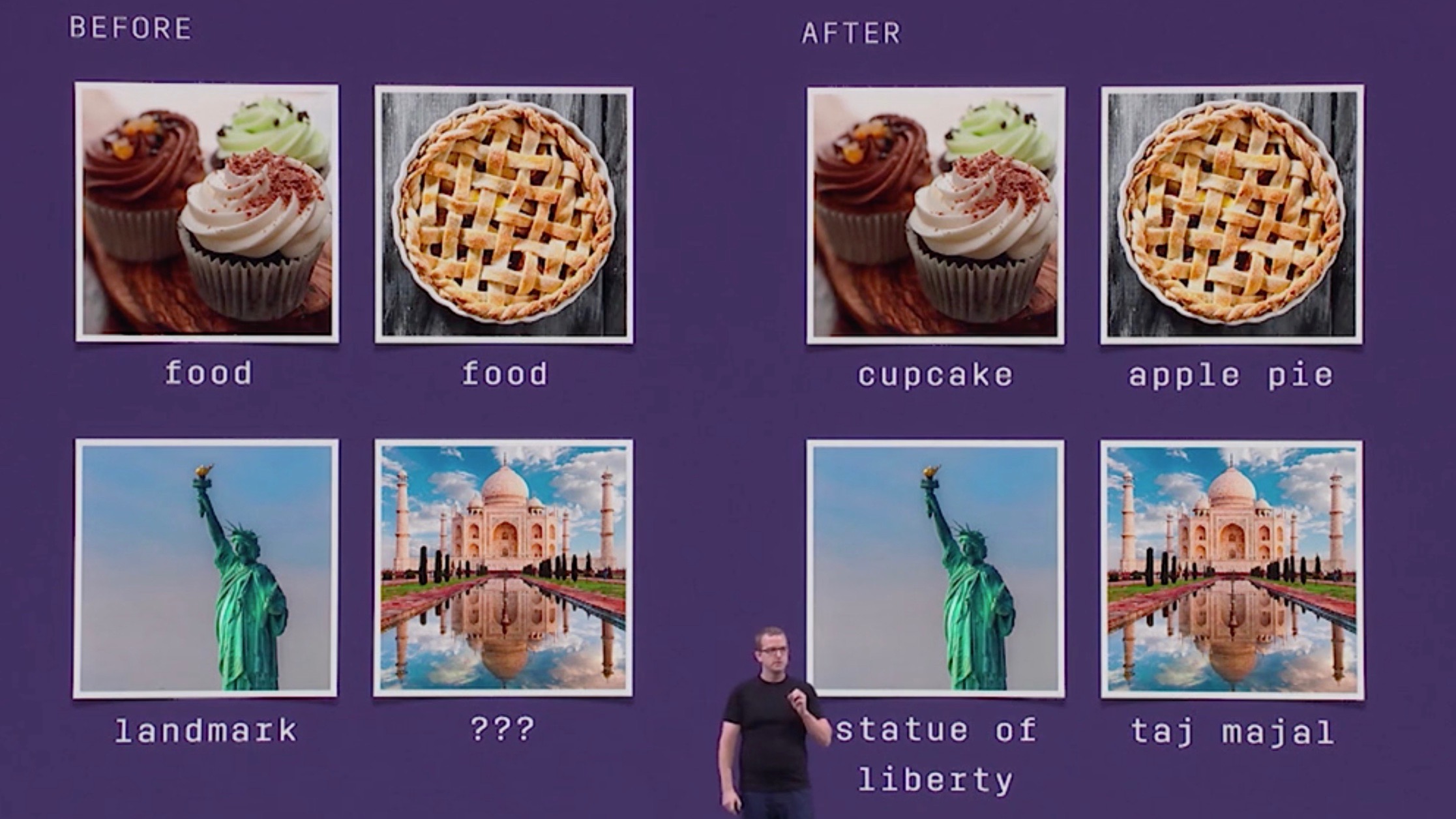

AI for image or facial recognition are being relied upon more and more by tech companies and various organizations. Facebook, for example, uses Instagram captions to teach its AI how to interpret images.

If a subset of Instagram users began using racial slurs or sexist language to describe their photos, then Facebook’s AI could internalize these biases.

Norman’s creators invited people to provide their own interpretations of the inkblots in a Google Doc, and “help Norman fix itself”.

Their research could help future AI creators determine how to balance out against potentially pernicious data sources, and ensure that their AI creations remain as untainted and impartial as possible.

- Driverless cars use AI for autonomous operation

Michael Hicks began his freelance writing career with TechRadar in 2016, covering emerging tech like VR and self-driving cars. Nowadays, he works as a staff editor for Android Central, but still writes occasional TR reviews, how-tos and explainers on phones, tablets, smart home devices, and other tech.