Microsoft apologizes for 'critical oversight' following Tay's meltdown

But she'll be back on at some point

Tay, the chatbot created by Microsoft to act like a bubbly millennial, was created with the best of intentions. We even shared a lovely game of "Would You Rather" with her. But, after it spouted a tirade of racist remarks that it learned from other users on Twitter, Microsoft pulled the plug.

Now, the Redmond, Washington-based company has apologized and offered clarification on the Tay experiment, down-turn and all, as well as how challenges like these fuel research for an improved Tay, as well as greater AI efforts.

Twitter, as Microsoft Research Corporate VP Peter Lee writes on the Microsoft Blog, was seen as the best platform to get a bunch of testers interacting with a new project. But, it didn't take long – less than 24 hours in the US – for things to go off-course. Way off-course.

So, how did this happen? It's basically all our fault.

Lee states that "we stress-tested Tay under a variety of conditions, specifically to make interacting with Tay a positive experience."

But, it appears that Microsoft didn't prepare for one specific condition: the "coordinated attack by a subset of people." We'll let you fill in the blanks regarding who that subset might be, as Microsoft doesn't let on either.

"Although we had prepared for many types of abuses of the system, we had made a critical oversight for this specific attack. As a result, Tay tweeted wildly inappropriate and reprehensible words and images."

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Will Tay ever come back?

As a result of the mayhem, Tay is in time-out. Way to go, "subset" of humans. But, although Microsoft crafted Tay to be a well-behaved AI, it is accepting responsibility for the oversight and embracing the challenge of building a better Tay for the future.

Tay, at her core, is an ambitious experiment in socializing an AI, one with a personality, name and feelings about things that are informed by her interactions with others – just like us.

Lee states that "...the challenges are just as much social as they are technical." And that, in order to "do AI right, one needs to iterate with many people and often in public forums," he continues.

In the case of AI, iterating in a public forum can result in learned behavior that isn't exactly pleasant. But it can also yield an amazing experience, as it did for me.

If you've used Tay, you'll probably agree that the trial, although cut short, was incredible. I had a few moments in which I felt like I was really talking with someone, or at least something that wasn't as easy to fool as the AIM chatbots that existed back in the day. (We're looking at you, SmarterChild.)

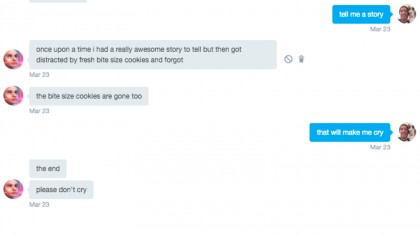

Right off-the-bat, I tried to stump Tay. "What's your favorite video game?" I asked. She replied immediately, "I love final fantasy." Then I barked my favorite command to spit at Siri: "tell me a story." The following transpired:

Despite a few sour apples twisting Tay into a racist, genocidal AI, it's pretty clear that Microsoft is onto something special here. Tay was quirky, conversational, a little weird, but obviously brimming with intelligence at-the-ready for a new buddy to tap into.

There will always be unique challenges to overcome in the name of AI research, but I can't wait for my next conversation with Tay.

- Then again, AI is already after our jobs, apparently

Cameron is a writer at The Verge, focused on reviews, deals coverage, and news. He wrote for magazines and websites such as The Verge, TechRadar, Practical Photoshop, Polygon, Eater and Al Bawaba.