'Society cannot function if no one is accountable for AI' — Jaron Lanier, the godfather of virtual reality, discusses how far our empathy should extend to AI in episode two of new podcast, The Ten Reckonings

It’s time for AI to take responsibility

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Whether we like it or not, we can’t ignore AI. What started as a fun, gimmicky chatbot on our desktops, albeit one that could talk a bit like a human, is already taking jobs, accessing medical records, and reshaping workplaces. We are rapidly approaching the point where the practical realities of building and governing advanced AI systems must be confronted.

As the recent furor over indecent Grok-generated images on X, and the use of Meta AI smart glasses to record women without their permission for social media clicks has shown, the guardrails meant to help society cope with the deluge of AI devices and new technologies seem seriously lacking.

Even before the latest controversies around AI-generated images, one of the biggest shocks to me was the way some AI companies decided it was perfectly acceptable to train their models on copyrighted material from authors and artists without permission – and the fact that, despite a few lingering lawsuits, they appear to have faced few consequences so far.

Zero accountability

Society cannot function if no one is accountable for AI

Technologist Jaron Lanier

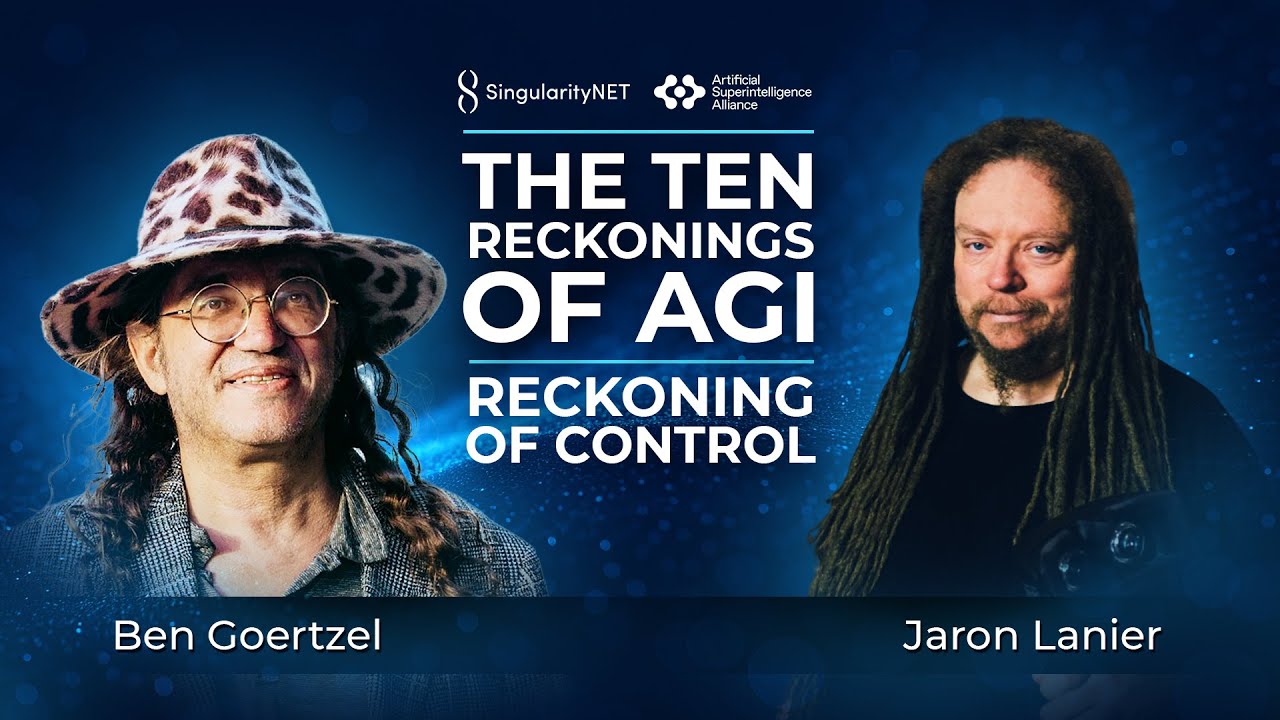

All of this makes me wonder whether we’re really ready for a world in which AI runs everything with zero accountability. Two people who have been grappling with similar questions are technologist Jaron Lanier and Dr Ben Goertzel, CEO of SingularityNET and founder of the ASI Alliance, in the next upcoming episode of The Ten Reckonings podcast.

“Society cannot function if no one is accountable for AI”, says Lanier, who is often described as the 'godfather of virtual reality'.

This new episode forms part of a series where these issues are explored in depth. According to Goertzel, “The ASI Alliance’s purpose is not to present a unified position, but to create space for the world’s leading thinkers to openly debate and, in doing so, help society reckon with the profound choices ahead.”

Lanier discusses the idea of AI sentience and its implications. He argues: “I don’t care how autonomous your AI is – some human has to be responsible for what it does, or we cannot have a functioning society. All of human society, human experience, and law is based on people being real. If you assign this responsibility to technology, you undo civilization. That is immoral – you absolutely can’t do it.”

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Shaping the future

I agree with him. While accelerating toward more autonomous, decentralized AGI could ultimately prove safer and more beneficial than today’s fragmented landscape of proprietary systems with weak guardrails, Lanier’s point about human accountability is exactly right. Right now, AI companies seem to be operating on the assumption that it’s better to beg forgiveness later than ask for permission now, and that approach cannot continue.

And while there appears to be little hope of meaningful AI regulation coming from the US at the moment, the rest of the world may be prepared to step in. The UK regulator Ofcom is launching an investigation into X over Grok, and Indonesia and Malaysia have banned Grok altogether.

At this point we all know that AI is going to shape our future, but the question of responsibility still lingers. Governments are going to have to be willing to step up because if they hesitate then the current lack of accountability edges us into even more dangerous territory. Whether that’s through images, or medical advice, or the protection of our rights. Progress without accountability isn’t innovation, it's recklessness.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

➡️ Read our full guide to the best business laptops

1. Best overall:

Dell Precision 5690

2. Best on a budget:

Acer Aspire 5

3. Best MacBook:

Apple MacBook Pro 14-inch (M4)

Graham is the Senior Editor for AI at TechRadar. With over 25 years of experience in both online and print journalism, Graham has worked for various market-leading tech brands including Computeractive, PC Pro, iMore, MacFormat, Mac|Life, Maximum PC, and more. He specializes in reporting on everything to do with AI and has appeared on BBC TV shows like BBC One Breakfast and on Radio 4 commenting on the latest trends in tech. Graham has an honors degree in Computer Science and spends his spare time podcasting and blogging.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.