This AI is learning how to watch football

He's blatantly offside

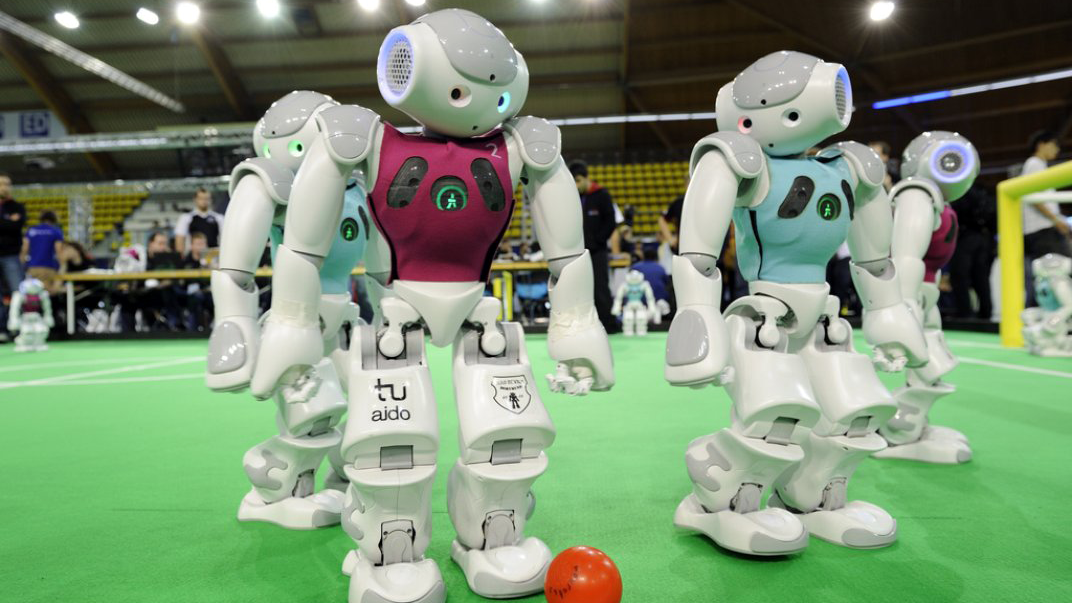

I have a confession to make, dear reader. I don't really follow football very closely. I'm only vaguely aware that there's some sort of big tournament on right now. But that's okay, because Disney Research and the California Institute of Technology are building an AI that does follow football -- and other sports, too.

Specifically, it's an automated camera system that's learning how best to film matches by watching how human camera operators behave at particular moments. Early testing shows that its shots are far smoother than other automated cameras.

Optical tracking right now isn't really good enough to reliably follow a ball on a pitch, so automated camera systems try to predict the flow of a game by detecting player positions instead. That system isn't perfect, resulting in jittery, jerky footage - especially when it guesses wrong about how a situation will unfold.

Human cameramen, on the other hand, are a lot better at guessing what's going to happen - having seen many similar situations play out in the past. So researchers developed machine learning algorithms that compare the movements made by a robot camera to those made by humans, analysing where the two deviate and learning from those differences.

Smooth and purposeful

"Having smooth camera work is critical for creating an enjoyable sports broadcast," said Peter Carr, a senior research engineer on the project and a co-author on a paper describing it. "The framing doesn't have to be perfect, but the motion has to be smooth and purposeful."

There were times that the robots did a better job than the humans. In one fast break in a basketball game, the human camera operator moved his lens in anticipation of a dunk, while the computer looked at the positions of players and predicted a pass instead. The computer turned out to be right.

In fact, the system was actually a little better at basketball than football in general. Carr said that this is because football players tend to hold their formation, and so their movements give less information about where the camera should look.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

"This research demonstrates a significant advance in the use of imitation learning to improve camera planning and control during game conditions," said Jessica Hodgins, vice president at Disney Research. "This is the sort of progress we need to realise the huge potential for automated broadcasts of sports and other live events."

- Your wearables could soon remember everyone you meet

- Duncan Geere is TechRadar's science writer. Every day he finds the most interesting science news and explains why you should care. You can read more of his stories here, and you can find him on Twitter under the handle @duncangeere.