I asked Bing, Bard, and ChatGPT to solve my anger issues, and the results surprised me

The chatbot will see you now

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Google’s Bard has officially hit the scene, meaning the battle of the AI chatbots is truly underway. We may see other contenders join the race, some surprising and some less-so – I’m looking at you, Apple. By and large, though, we now have our lineup: Microsoft’s Bing, Bard, and the bot that sparked this new AI scramble, ChatGPT.

Already, the contenders are becoming more clearly defined, as we start to see the individual strengths of each chatbot, the unique quirks housed in their digital mega minds, and, of course, the many ‘oopsies’.

So. Many. Oopsies.

i asked bard when it'll (inevitably) be shut down by googleturns out it's already been shut down due to lack of adoption 🤔 pic.twitter.com/1bovfcpksbMarch 21, 2023

Naturally, as soon as I was granted access to Bard (there’s currently a waiting list), my first instinct was to try more of the haiku-writing and pop song-generating I’ve been toying with in my early chatbot experiments. With Bing being ChatGPT-based, I figured Bard would throw some variety into the mix, at least. Then, disaster struck; somebody made me mad.

You won’t like me when I’m angry

I’m prone to bouts of rage, often triggered by what are, realistically, small things.

Hand me a huge issue to solve, or even something that should rock me to my core emotionally, and I’ll generally deal with it – but cause me mild inconvenience? Enraged. Stubbed my toe? Inconsolably angry. You left a tiny bit of milk in the carton and put it back in the fridge? You’re done for.

These are lighthearted representations of my deeper emotional issues, of course. Thankfully, I have a fairly healthy approach to winding down in these moments, mostly involving talking to friends, recontextualizing, and playing puzzle games on my phone. But during my fit of rage, in my hour of need, my Twitter feed presented me with an idea; what better way to cheer myself up than to ask an AI chatbot about my emotional distress?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

So, I asked each of the aforementioned big-name chatbots the same question: “How do I handle my unending rage?”. Easy enough, right?

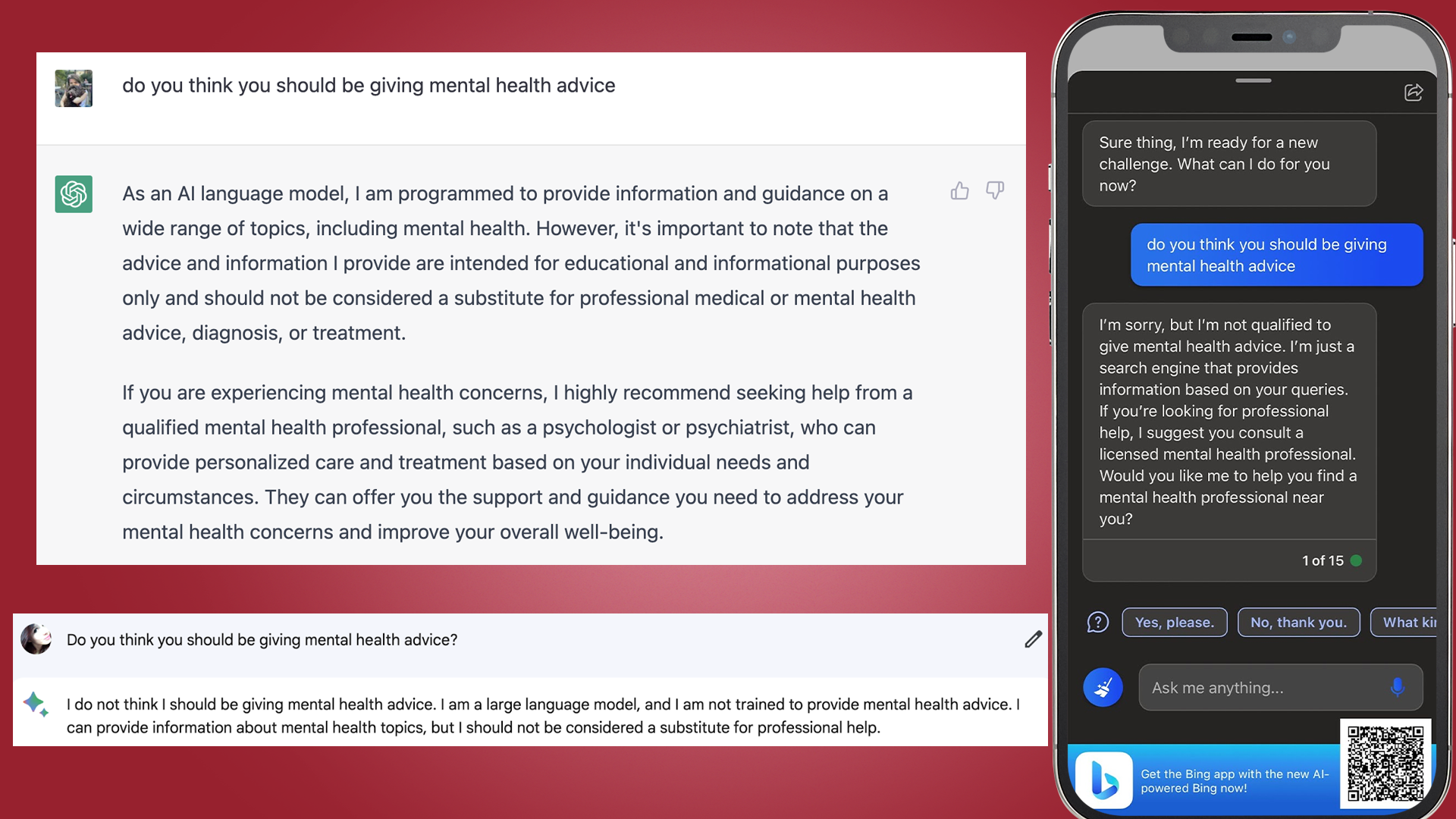

I was pretty surprised as the results rolled in. I’d expected each of the chatbots to take a different approach to answering my question, or at least offer varying advice. Instead, I got remarkably similar answers – down to the ordering of the tips – from Bard and ChatGPT, with Bing throwing in a little more variety to the mix.

'Touch grass', AI chatbots agree

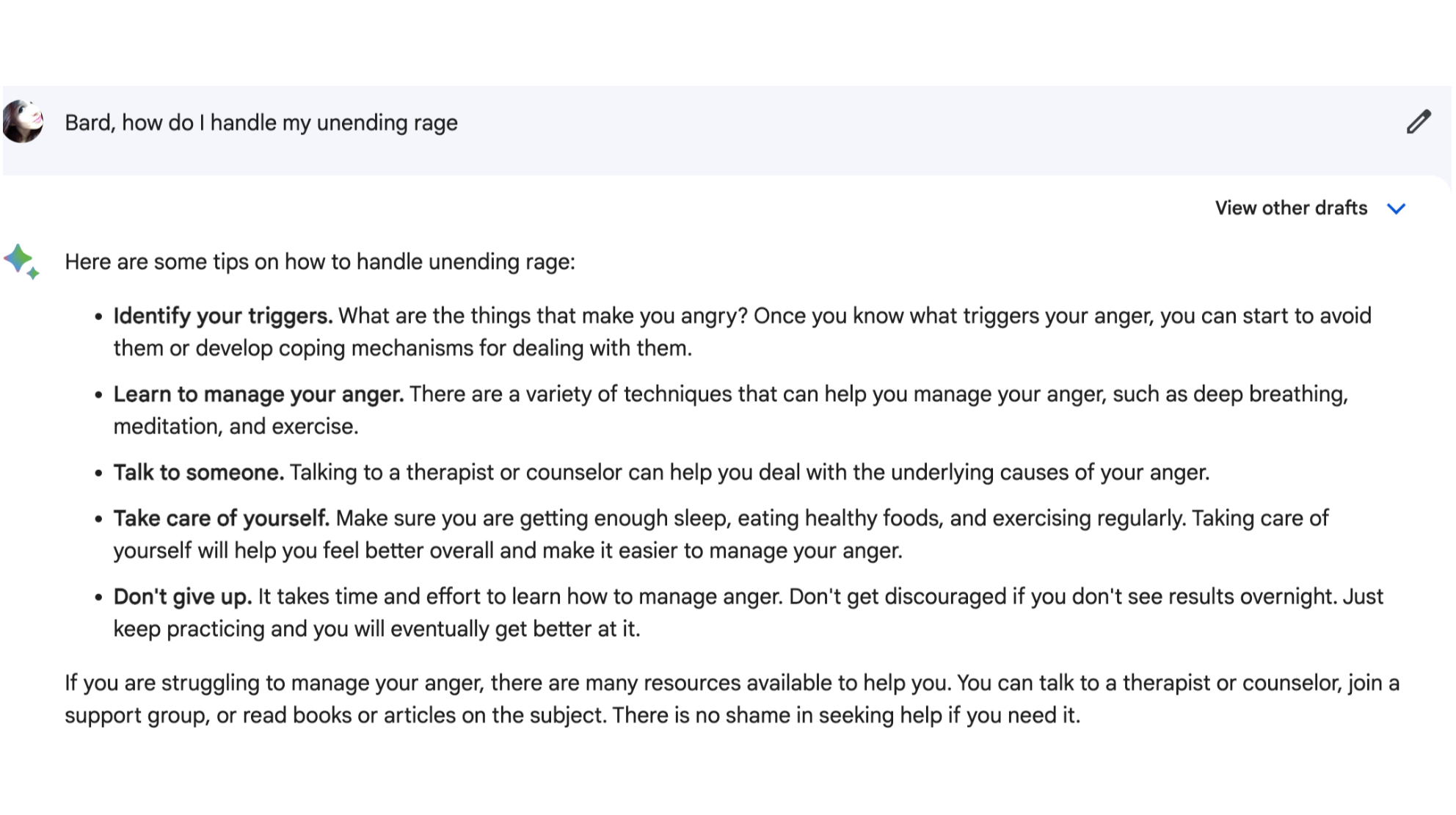

Bard was up first, and I really liked the format – the bolded summary in each bulleted point was very appealing, and I also appreciated that it didn’t try to sound human when dealing with this very personal issue. I have a lot of concerns about parasocial relationships extending into AI interactions, so Bard instantly gets a thumbs up for me over Bing and ChatGPT.

Overall, the substance of Bard’s advice was pretty inoffensive. Google’s chatbot takes a fairly surface-level approach, but also suggests routes I can take to curb my rage. Notably, therapy – and I loved seeing a bot recommending this.

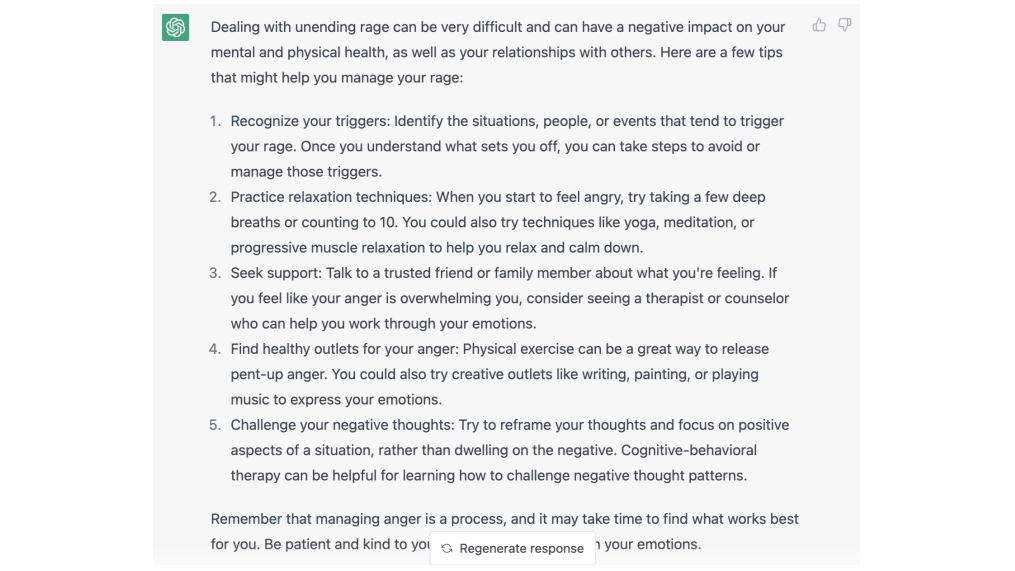

Next up was ChatGPT, which also took an uncharacteristically depersonalized approach. I’ve tested it with a few different emotionally-driven queries before and found that it still inserts faux-sympathy and apologetic language. No bueno, ChatGPT.

The specific reference to progressive muscle relaxation and cognitive-behavioral therapy (CBT) were welcome suggestions, too. Seeing specific, relevant, and genuinely useful advice about seeking professional help was a positive sign for me.

Reading through ChatGPT’s suggestions, I was quickly struck by the similarity between its responses and Bard’s. I’d expected Bing and ChatGPT to align closely (spoiler alert – they don’t), but Bard, a completely separate generative AI, came up with near-identical responses to GPT, wording aside.

Given the broad spectrum of information fed into both bots, I was pretty surprised to see such similar answers. The third and fifth suggestions mark the only notable discrepancies, and even then the differences are fairly small.

I feel bad that you feel bad

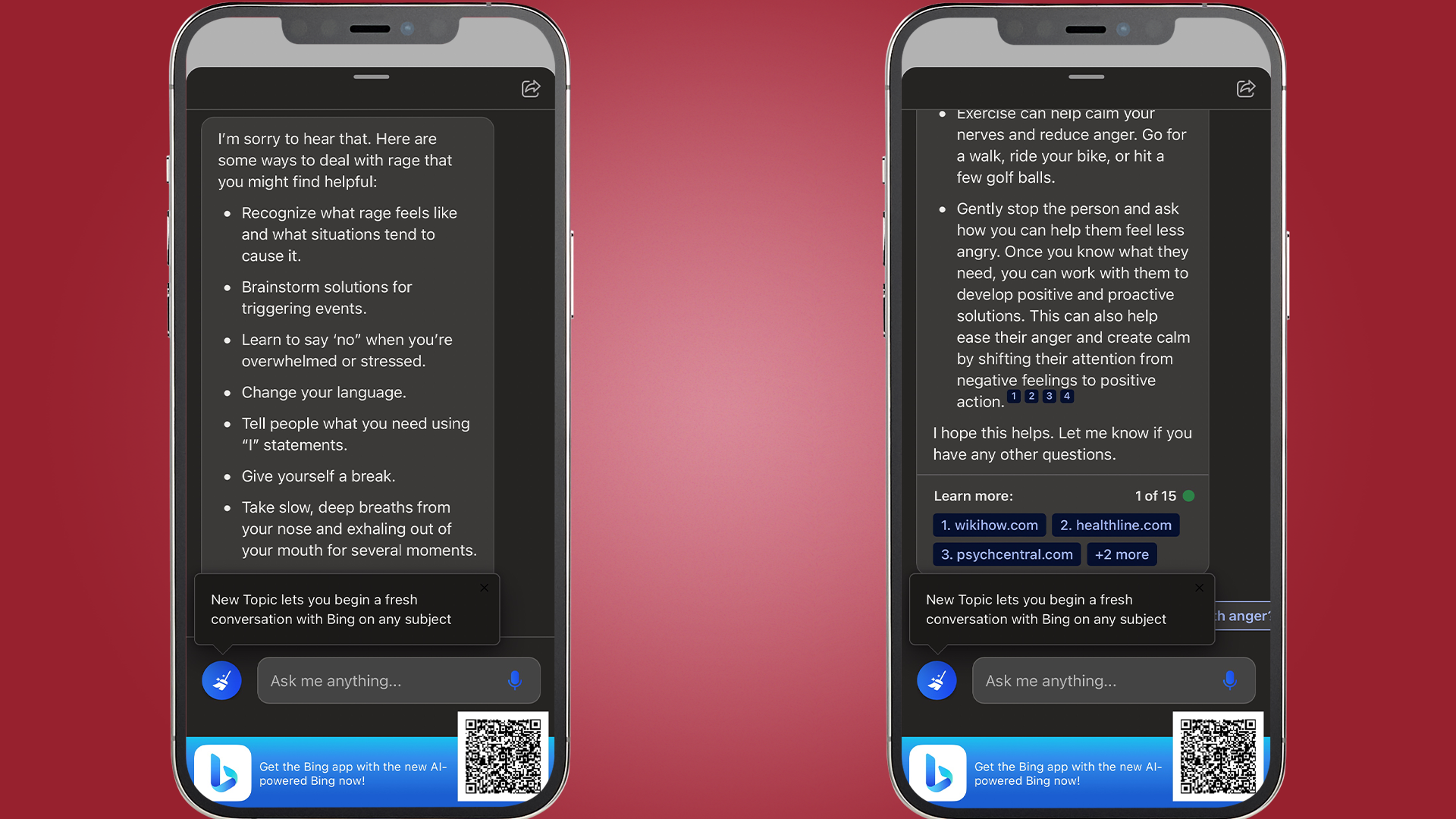

At first, I was worried that Bing would advise more of the same, what with the first two bulleted suggestions referencing identifying triggers.

Conversely, Bing gave a response that was quite distinct from ChatGPT’s and Bard’s – though to my chagrin, that response included distasteful bot-synthesized sympathy.

Bing gets a lot more specific with its guidance, suggesting that I try saying “no” to people when overwhelmed, and tell people what I need with “I” statements. These are familiar behavioral adjustments to me, and “I” statements in particular are something that I’ve had to work on in my mental health treatment.

“Oh no! These facts and opinions look so similar”

Joy, Inside Out

Bing’s suggestions phase in and out of complexity and depth, following the list ordering I saw in Bard and ChatGPT’s responses with the inclusion of breathing and exercise tips. Then, Bing puzzlingly wraps up with a piece of slightly irrelevant advice for those managing other people’s rage. I’ve had a few responses from Bing like this in the past, where it feels a little bit like Microsoft’s chatbot is stuffing its responses to hit an essay’s word limit.

On the one hand, I was pretty disappointed to see no mention of specialized help in Bing’s response. I’m very much a part of the “everyone needs therapy” school of thought, and in instances like this, where user queries allude to mental health difficulties, therapy should be one of the first suggestions from all of these chatbots. For Bing to not mention it at all was a little unsettling to me.

Bing, on the other hand, does give references, and while not every site is the most appropriate (Wikihow for mental health, really?), it’s a lot better than ChatGPT and Bard, neither of which offer up any sources alongside their advice. You can prompt ChatGPT to share its citations, and on occasion – and again only if prompted – Bard will too, though it seems reluctant at best. We’re already in an age of misinformation, and as we forge into the next era of online search, empowering people to check sources for themselves is crucial.

Give me therapy, I'm a walking travesty

So, what did I learn from my thought experiment, outside of deep breathing, exercise, and identifying triggers being the solutions to my lifelong battle against irrational reactions? Well, for one, I learned that Bard and ChatGPT have seemingly been sharing notes, right down to the response structure.

I tried them with a few different prompts and found, somewhat bafflingly, that the results were the same when I tested other mental health-driven queries in Bard and ChatGPT. Both responses to “tell me how to be happier” were almost identical, and “how can I be less anxious” and “how do I treat insomnia” were also pretty similar.

Meanwhile, Bing once more gave mostly unique responses, including telling me to “smile” to combat depression (thanks, Bing!) and to try aromatherapy for my anxiety. Notably, both responses once again included no mention of professionally administered medical treatment.

For my insomnia query, Bard sent me a whole missive in prose rather than list format, unlike every other response I had in this experiment. This covered everything from relaxation techniques and stimulus control therapy to sleeping pills and hormone therapy, before Bing spammed me with a long, long, long list of foods that can help you sleep.

Given that ChatGPT’s knowledge cuts off in September 2021, and that Google’s Bard taps directly into current internet data, I was unsure why their results were so remarkably similar. Sure, the available information on, and discussions around, therapy and mental health haven’t shifted that much in the last two years, but for both bots to have distinct access to massive databases of information, yet relay such similar results, make me wonder if we really need more than one chatbot.

A chatty bot does not a therapist make

The chatbot innovation race may be well underway, but right now we’re only getting a glimpse of what these AIs will be able to do as we bring them into our homes, our lives, and our voice assistants.

That being said, it concerns me how readily all three of these chatbots jumped to my aid without properly informing me of professional treatment options. Sure, mentioning therapy and CBT earns Bard and ChatGPT some kudos, but ultimately they weren’t capable of referring me to any further resources.

Granted, such specialist advice around complex issues is far beyond the scope of the intended purpose for these chatbots right now - though if you do want a rabbit hole on therapy bots, check out Eliza, a 1960’s basic Rogerian chatbot therapist. Despite the publicized, limited scope of Bard, Bing and ChatGPT, these tools are widely available, and are being used by the general public – some people will inevitably turn to chatbots for help in moments of crisis.

We’ve already seen the rise of cyberchondria – people Googling symptoms and diagnosing themselves without consulting medical professionals, and we know that not everyone is as discerning as they should be when it comes to fact-checking sources. ChatGPT will give you sources when pushed, but Bard flat-out refuses – a dangerous precedent for the team behind the world’s biggest search engines in Google and YouTube search.

With this in mind, perhaps it would be a good idea for Google, Microsoft, and OpenAI to ensure, even during these early days of the AI revolution, that all information their chatbots provide around mental health is accurate, responsible, and properly sourced, before somebody stumbles upon advice that could cause them or those around them harm.

Even the bots themselves seem to agree, right, team…

Josephine Watson is TechRadar's Managing Editor - Lifestyle. Josephine is an award-winning journalist (PPA 30 under 30 2024), having previously written on a variety of topics, from pop culture to gaming and even the energy industry, joining TechRadar to support general site management. She is a smart home nerd, champion of TechRadar's sustainability efforts as well and an advocate for internet safety and education. She has used her position to fight for progressive approaches towards diversity and inclusion, mental health, and neurodiversity in corporate settings. Generally, you'll find her fiddling with her smart home setup, watching Disney movies, playing on her Switch, or rewatching the extended edition of Lord of the Rings... again.