Robots are learning how to be stylish

Laurence Llewelyn-Robot

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Humans are pretty good at telling when two pieces of furniture "go" together, stylistically-speaking, but the same task is very hard for robots. That's changing, thanks to a team of computer scientists at the University of Massachusetts Amherst.

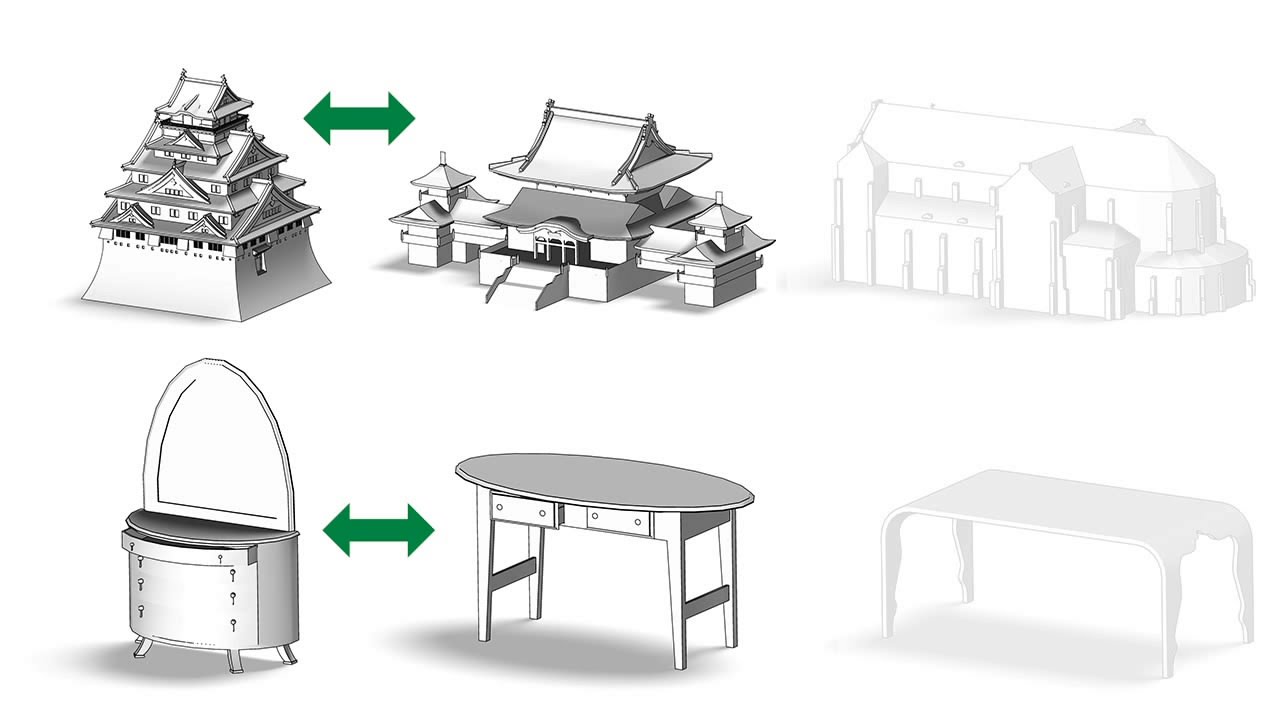

They've developed an algorithm that lets a computer recognise the style of an object, drawing on observations about style similarity in art history. It uses factors like shape, proportion, lines and distinct visual motifs to classify objects and see if they go together.

To test it, they compared the algorithm's classifications in a range of categories, including buildings, furniture, lamps, coffee sets, pillars, cutlery and dishes, with classifications from human respondents recruited on the web. They matched on average 90% of the time - better than the agreement level between different humans.

Aesthetically and Stylistically Plausible

Its creators, a team led by Evangelos Kalogerakis, hope that the algorithm could be used in image databases, in videogames, and in augmented reality applications. Similar algorithms are being created for fashion, too.

"We hope that future 3D modeling software tools will incorporate our approach to help designers create aesthetically and stylistically plausible 3D scenes, such as indoor environments," said Kalogerakis.

"Our approach could also be used by 3D search engines on the web to help users retrieve 3D models according to style tags. It will be exciting to see all the ways people will find to use it."

The team's algorithm was presented at the SIGGRAPH 2015 conference in Los Angeles. Or, if you like watching more than reading, there's a video summary of their work right here:

Sign up for breaking news, reviews, opinion, top tech deals, and more.