Where next for speech recognition on the Mac?

Voice recognition needs to get smarter to become popular

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Headsets with a push-to-talk or mute button are the most useful as they avoid accidental inputs if you happen to clear your throat or begin a conversation with a friend or co-worker.

The application is constantly working to learn your voice in order to provide a flawless experience, and you can return to the practice tests at any point to give it a clearer idea of the way you speak. You can also create profiles for different locations where there may be background noise, such as in an office or a coffee shop (although how many of us would want to be speaking out loud to a computer in a public place?).

Despite the comma issue (which Dictate seems to think should be "congress you") it's very easy to ramble on for hours and hours, while the application hastily notes down everything you say.

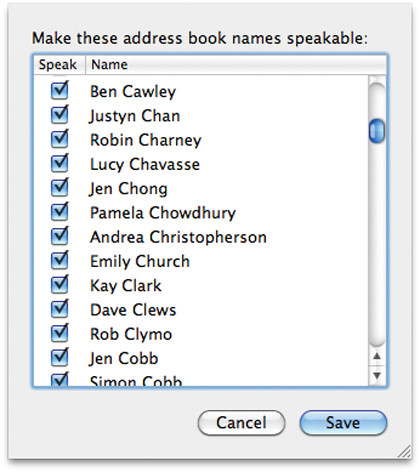

Nuance also provides a piece of software called Scribe, which does largely the same job as Dictate, except it works with audio files you have recorded previously using an iPhone or another recording device.

Again, this software has to learn your voice before it can accurately transcribe your audio file and can only do so when it has a profile created. Once complete, it's a simple process of importing your audio note, checking for errors and receiving the transcribed text.

The same applies to the Dragon Dictation app available for iPhone and iPod, which does a pretty good job of recognising your voice in real-time and saving it as text.

While Dragon is the best way we have found to control applications and accurately dictate, it doesn't provide the totally hands-free experience one might expect. While it's a great deal easier to walk around the room calmly speaking your thoughts while the computer does the work, there has to be a level of editing and adjustment before you save your final copy.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Once again, as if to illustrate the point, we just changed 'savior' to the correct 'save your' in the last line. We dictated more than 50% of this article, amounting to 1000 words or so, and found we only had to weed out a few common mistakes such as similar-sounding words, grammatical errors and missing capitalisation, but it was light work in comparison with many options we've tried before.

Get bossy

It seems that speech recognition isn't quite at the level one would expect at this stage in its development. The software understands what we are saying and can accurately transcribe those words, it can also perform basic commands based on voice input, but it's perhaps the software performing the actions rather than the engine transcribing the text that needs further development.

Rather than simply telling a computer to check for mail as you could do in the same amount of time with a mouse click, why can they not answer more complex questions such as "Do I have any important email?"

There would be more use in a method of using simple scripts along the lines of Google's priority inbox, which understands that when you say "important" you mean a specific set of contacts who may have emailed you.

The same is true of apps such as iCal, where currently scheduling meetings or events isn't as simple as one might think. What if you were able to say to your computer: "Set lunch with Dave tomorrow at two" and the computer understood your command, set the calendar date, emailed Dave and even went ahead and reserved a table at your favourite restaurant using an online booking form.

The technology exists, it's just about how it's applied. And here is where the crossover between desktop and mobile voice recognition is making the biggest difference.