Multisearch could make Google Lens your search sensei

Google’s making good on an old promise to enhance search results

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

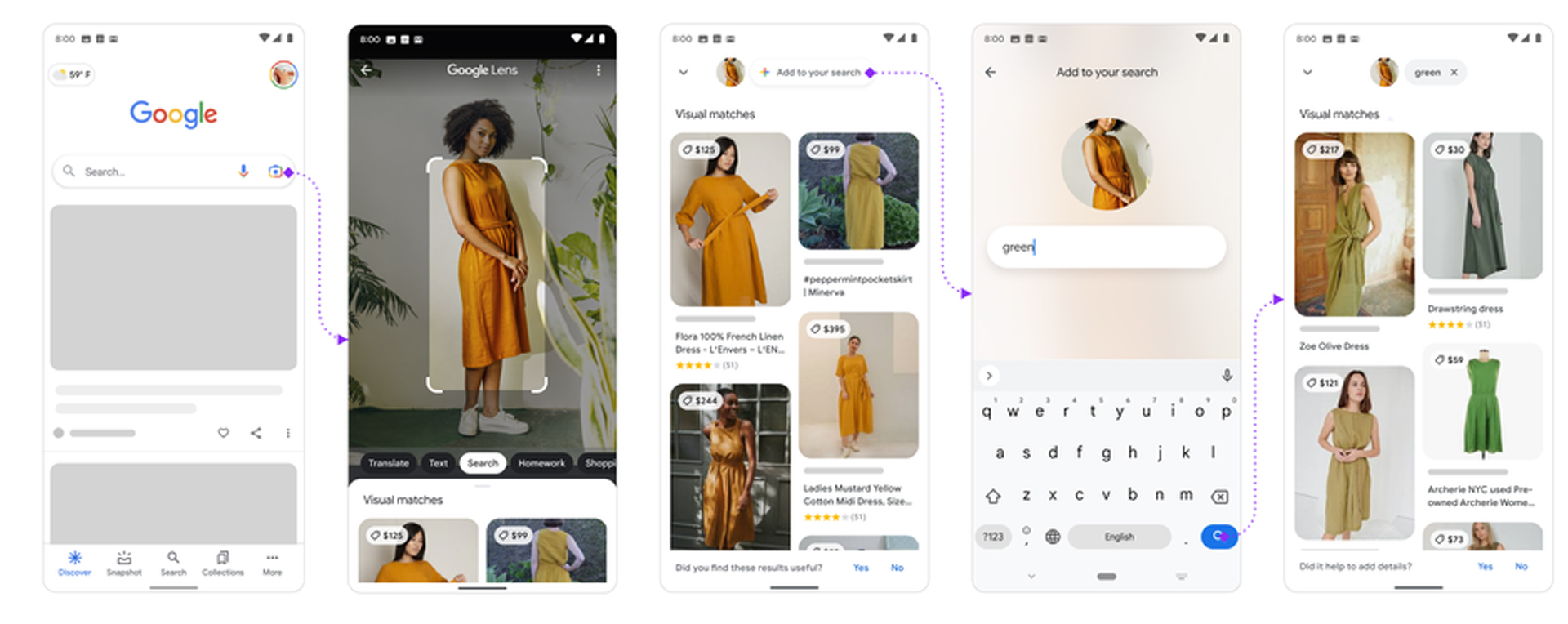

Google searches are about to get even more precise with the introduction of multisearch, a combination of text and image searching with Google Lens.

After making an image search via Lens, you’ll now be able to ask additional questions or add parameters to your search to narrow the results down. Google’s use cases for the feature include shopping for clothes with a particular pattern in different colors or pointing your camera at a bike wheel and then typing “how to fix” to see guides and videos on bike repairs. According to Google, the best use case for multisearch, for now, is shopping results.

The company is rolling out the beta of this feature on Thursday to US users of the Google app on both Android and iOS platforms. Just click the camera icon next to the microphone icon or open a photo from your gallery, select what you want to search, and swipe up on your results to reveal an “add to search” button where you can type additional text.

This announcement is a public trial of the feature that the search giant has been teasing for almost a year; Google discussed the feature when introducing MUM at Google I/O 2021, then provided more information on it in September 2021. MUM, or Multitask Unified Model, is Google’s new AI model for search that was revealed at the company’s I/O event the same year.

MUM replaced the old AI model, BERT; Bidirectional Encoder Representations from Transformers. MUM, according to Google, is around a thousand times more powerful than BERT.

Analysis: will it be any good?

It’s in beta for now, but Google sure was making a big hoopla about MUM during its announcement. From what we’ve seen, Lens is usually pretty good at identifying objects and translating text. However, the AI enhancements will add another dimension to it and could make it a more useful tool for finding the information you need about what you're looking at right now, as opposed to general information about something like it.

It does, though, beg the questions about how good it’ll be at specifying exactly what you want. For example, if you see a couch with a striking pattern on it but would rather have it as a chair, will you be able to reasonably find what you want? Will it be at a physical store or at an online storefront like WayFair? Google searches can often get inaccurate physical inventories of nearby stores, are those getting better, as well?

Sign up for breaking news, reviews, opinion, top tech deals, and more.

We have plenty of questions, but they’ll likely only be answered once more people start using multisearch. The nature of AI is to get better with use, after all.

Luke is a nerd through and through. His two biggest passions are video games and tech, with a tertiary interest in cooking and the gadgets involved in that process. He spends most of his time between those three things, chugging through a long backlog of games he was too young to experience when they first came out. He'll talk your ear off about game preservation, negative or positive influences on certain tech throughout its history, or even his favorite cookware if you let him.