Google AI Overview thinks it's still 2024, sometimes

AI time is a flat circle

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Google is very keen on its AI Overview feature, rolling it out across the world and spending a lot of effort to minimize embarrassing moments like supposedly telling people to eat rocks. But apparently, those improvements don't always include things like the current year.

A viral Reddit post this week showed an AI Overview declaring it is still 2024 to question “Is it 2025?” Google soon claimed to have fixed the issue, according to an Android Authority report, but I found that to be only sometimes true.

Most of the time, the AI Overview provides accurate answers. It actually appears to be a manual fix because it's phrased as "Yes, according to the provided information, the current year is 2025," even though I had not provided any information. Around a tenth of the time, though, I get "No, it is not 2025. The current year is 2024."

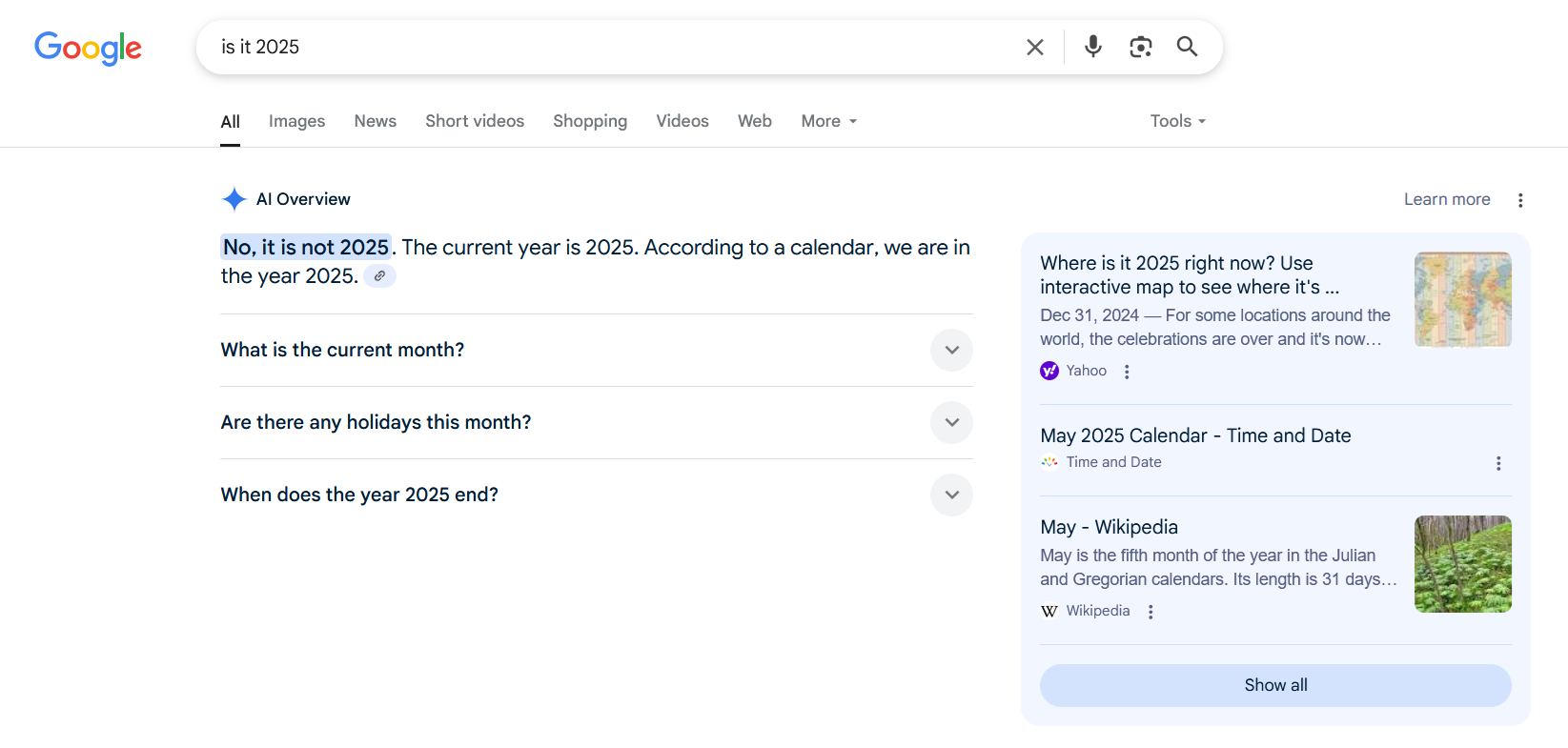

That's odd enough, of course, but that's nothing compared to when I apparently caused a time paradox and the AI Overview said, "No, it is not 2025. The current year is 2025. According to a calendar, we are in the year 2025."

Overlooked AI Overview

Firstly, it's not 2025; it's 2025 is a good reminder that generative AI is often just a fancy autocomplete tool that doesn't have to make sense for the algorithm to function. Also, "a calendar" suggests that others disagree for some reason.

It's hardly a catastrophic bug, but it does bring back memories of AI Overview's other awkward moments. Most famously, the AI Overview briefly told people to “eat one small rock per day” for digestion and “put glue on pizza to make the cheese stick better.” When a product bills itself as an amalgamation of reliable sources but can’t reliably tell what year it is, it makes you wonder how much else it’s getting wrong.

Google calls these kinds of results edge cases. And most of the time, AI Overview gives you something sensible. It’s usually correct. It’s just that the times it’s not are sometimes so bizarre you might forget about how good its record is.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

AI search is very much on the rise at Google. Search is also a central preoccupation for many AI developers, such as Perplexity and OpenAI. But since AI doesn’t quite understand the difference between fact and fiction, you'd do well to clarify and confirm whatever it tells you.

You might also like

Eric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.