We've all got a very sophisticated processing unit – the brain – that can perform some remarkable tasks.

Despite their speed and memory capacity, silicon-based computers struggle to emulate it. The branch of computer science called Artificial Intelligence tries to narrow the gap, and one of the basic tools of AI is the neural network. So let's take a look at what the neural network can do.

Over the years, Artificial Intelligence has had its ups and downs. Generally there would be a period of 'up' when, after a short run of successful papers, researchers would start making prognostications about their discipline that would grow ever more fanciful. This would naturally lead to a period of 'down' when these predictions did not come to pass.

However, just as spin-off software from the space program have made their way into retail products, spin-offs from AI are becoming part of our lives through intelligent software, even though we may not recognise it as such.

Keeping it real

One fairly recent example that comes to mind is the ability of some point-and-shoot cameras to detect when a face is in shot and hence focus on that face. The face detection software is remarkably fast and rarely wrong, so when taking portraits with these cameras it's easy to trust that the faces of the subjects will be in focus and exposed correctly.

Apple's new version of its iPhoto app goes one step further: it includes face recognition software. Import your photos into iPhoto, and it will detect faces. It's then able to recognise the same faces in different photos. Once you've 'named' the face, iPhoto will annotate the picture with the faces it recognises.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Another business-oriented application of AI algorithms is voice recognition in programs like Dragon Naturally Speaking and OSes like Windows 7.

Some cars that include optional 'Technology' packages also have voice recognition for controlling the car's interior functions like the radio or the heating. (I've given up talking to my car: since I'm British but living in the States, the car's voice recognition software doesn't 'get' my voice, perhaps because it's optimised for a US accent.)

Yet another example is OCR (Optical Character Recognition). Here the state of play is quite remarkable, with the top-end packages declaring over 99 per cent accuracy for typewritten or typeset text. Even the old Palm Pilot PDAs had very constrained – yet very successful – handwriting recognition software; once you'd trained yourself to write the modified characters, the PDA recognised them as swiftly as you could write them.

Although these AI applications use many different techniques to do their magic, there is a very fundamental building block called the neural network, from which many of these techniques are but refinements.

How it works

Before we can get an appreciation of what a neural network does, we should look at the biological background from which it is derived.

If you looked at a brain in a microscope you'd see that it consists of specialised cells called neurons. Neurons are peculiar cells indeed.

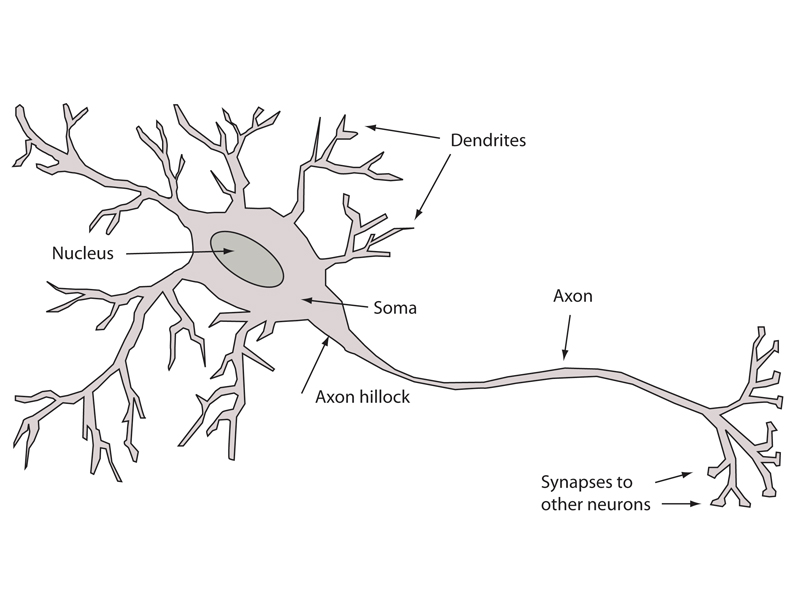

The main body of the neuron is called the soma, and it has a veritable forest of dendrites through which input signals arrive. If the number of incoming signals is sufficient, the difference in voltage potential will cause the axon hillock to fire its own signal down the axon, a comparatively long extension of the cell.

The axon branches out towards the end, and at the end of each branch is a synapse that connects to a dendrite of another neuron. The signal travels through the synapse (we talk of the synapse firing) into the dendrite and this signal then participates in whether the next neuron fires or not.

So, boiling this down to the absolute fundamentals (without worrying about the chemical processes that help the signal travel across the synaptic gap, or about the myriad other processes in the cell) we have:

- a set of input signals coming into the cell from other cells;

- if the sum of the signals reaches a threshold, the cell fires its own signal;

- the output signal from a cell will become the input signal to several other cells.

So, in short: inputs, summation and, if above threshold, output. Sounds computer-like.

In the human brain there are roughly 20 billion neurons (the number depends on various factors, including age and gender). Each neuron will be connected through synapses to roughly 10,000 other neurons.

The brain is a giant, complicated network of dendritic connections. Unlike computers, it's massively parallel: computations are going on all over the brain. It boggles the mind how complex it is – indeed, how it works at all.

So let's draw back from the brink and look at how we might mimic this in computing.