Teaching an AI using a dog garners some unexpected results

Turns out you can teach an old dog new tricks

Artificial intelligence is one of the biggest buzzwords of 2018, and for good reason. AI is making our voice assistants smarter, our cars self-driving, and our photo apps better able to identify our pets.

But what if our pets could be the AI? And no, we’re not talking about the newly back-on-the-market Sony Aibo, we’re talking about a genuine neural network trained to behave like a dog.

That was the aim of a team working out of the University of Washington and Allen Institute for AI when working on the (brilliantly titled) paper ‘Who let the dogs out? Modeling dog behavior from visual data’.

Okay, now sit. I said SIT

How do you train an AI using a dog? Good question. And the answer is just as adorable as you’re probably imagining.

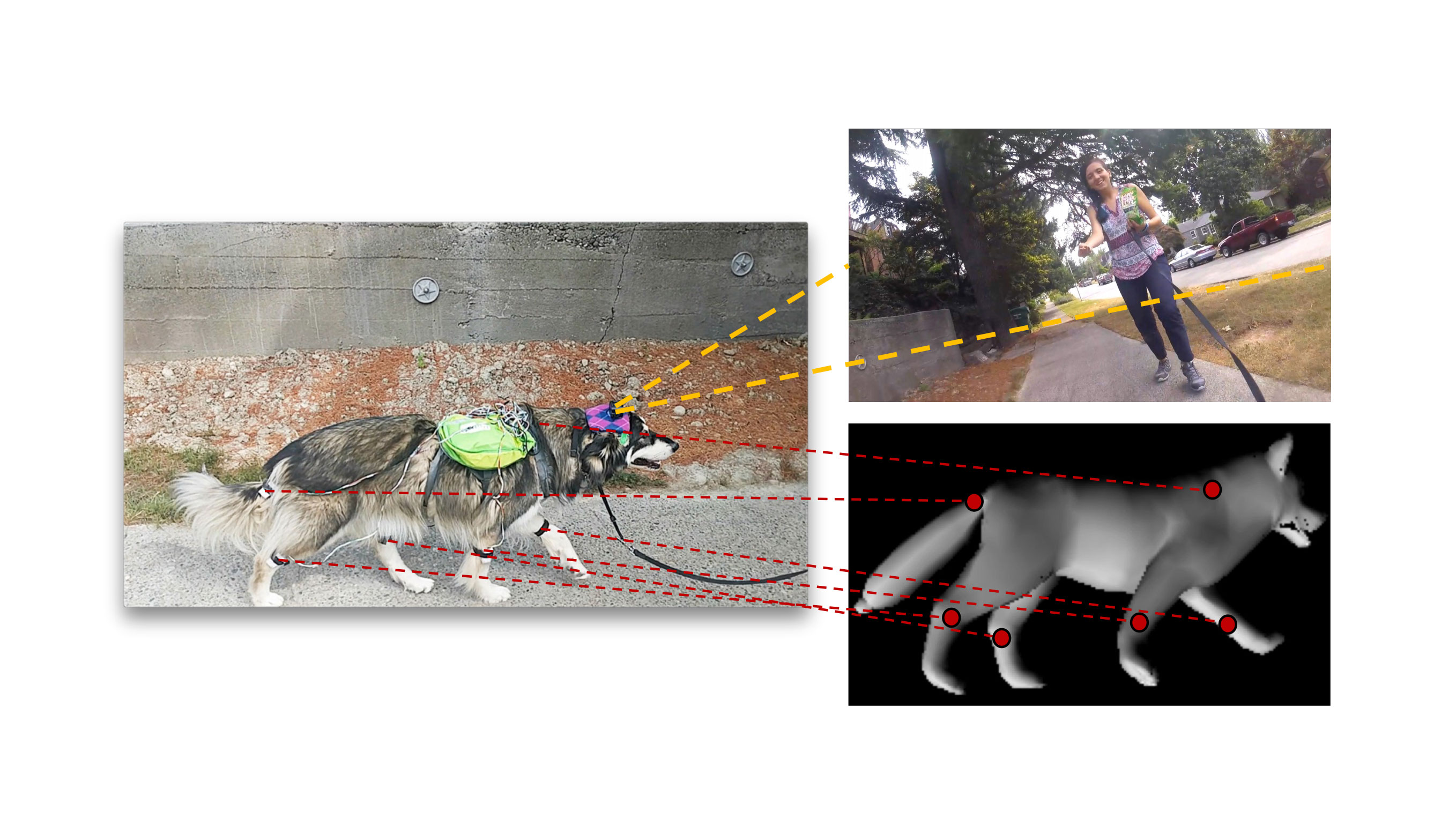

The team used motion tracking sensors (much like the ones used in Hollywood movies and games) and a GoPro camera, all strapped to a – presumably very good natured – Malamute called Kelp.

Reminiscent of the time that Nikon strapped a camera and a heart rate monitor to a dog so photos were taken every time the pup got excited (check it out, you won’t regret it), Kelp was taken through a series of scenarios, including going to the park, playing fetch, and going on walks.

After all the adorableness was over, the data was then collated using deep learning, an AI technique that allows you to search vast quantities of information for meaningful patterns.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

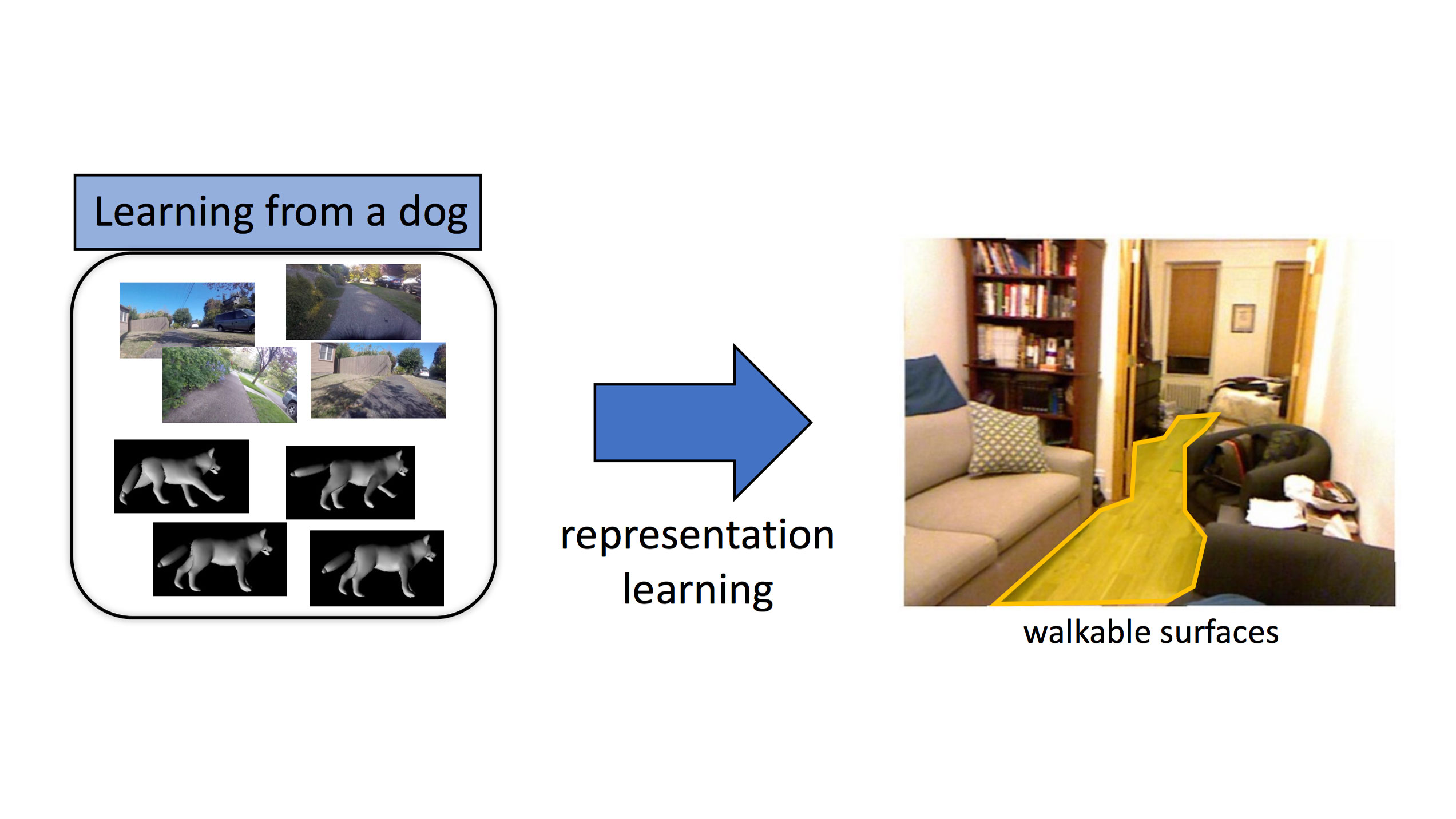

And this is where things get really interesting; one of the obvious patterns that the neural network was able to pick up on was what was a ‘walkable surface’. Robots usually struggle with deciding which surface is appropriate to walk on, which leads to walking into walls, falling over, slipping.

But dogs are very good at being able to decide where they can walk. So the AI trained on Kelp’s behaviour was correctly able to identify walkable surfaces in images, even though it hadn’t been specifically trained to do so.

The AI was also able to identify and even predict the correct responses to certain stimuli:

“For instance, if the dog sees her owner with a bag of treats, there is a high probability that the dog will sit and wait for a treat, or if the dog sees her owner throwing a ball, the dog will likely track the ball and run toward it.”

What’s significant about this is that there are numerous complex things happening in these interactions that the AI doesn't have to be independently trained for. Whereas an AI usually struggles with verb pairing, by modelling on a living creature, it knows what ‘people’ are, what the ‘owner’ subset of people is, what ‘treats’ are, that treats are for eating, and that sitting gets you a treat.

Come here boAI!

Now, as much as it’s able to predict dog behaviour, that doesn’t mean that the AI has the consciousness of a dog. Speaking to The Verge, lead author of the paper Kiana Ehsani said “Whether or not the dog will see a toy or an object it wants to chase, who knows.”

What about the practical application for this research? Beyond the intention stated in the paper to have a “better understanding of visual intelligence and of the other intelligent beings that inhabit our world,” Eshani thinks that “this would definitely help us building a more efficient and better robot dog.”

Would we be less afraid of intelligent robots if they took the form of man’s best friend? Maybe. We certainly would be.

- Want more AI reading material? Check out: You and AI: will we ever become friends with robots?

Andrew London is a writer at Velocity Partners. Prior to Velocity Partners, he was a staff writer at Future plc.