I finally got ChatGPT to stop asking 'Want me to…' at the end of every response – here’s how to do it

ChatGPT sometimes takes a lot of persuading to change its behavior

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

For me, the most annoying thing that ChatGPT does is ending its responses with a query. It usually starts with “Want me to…” followed by a helpful suggestion like “Want me to put all that information in a handy table?” or sometimes a statement like “Let me know if you want a version focused on themes like fate, violence, or morality”.

I know ChatGPT is just trying to be helpful, but it’s like dealing with an over-eager intern who keeps pestering you all the time. I don't want a protracted conversation; I just want to get on with the next thing.

I could, as many have pointed out, just ignore it. After all, ChatGPT is a machine, not a person, so you can't be rude to it. But as somebody who can’t stand the mental load of not immediately replying to a text message and hates it when other people take hours or days - days! - to reply, I feel like I’m constantly leaving somebody ‘on read’ when I want to walk away from a ChatGPT conversation. It vexes me terribly.

I'll tell you what you want, what I really, really want

Imagine my delight then, when I found a setting in, er, Settings, called 'Show follow up suggestions in chats', that I could turn off. Naturally, I turned it off and returned to my conversation with ChatGPT.

Unfortunately, it did not have the desired effect.

ChatGPT was still throwing “Want me to” prompts into our conversations like they were going out of style. Presumably, this setting stops some kind of behavior, but not the behavior that you’d think most closely matches its description. I'm sure that only OpenAI could make software that worked like this.

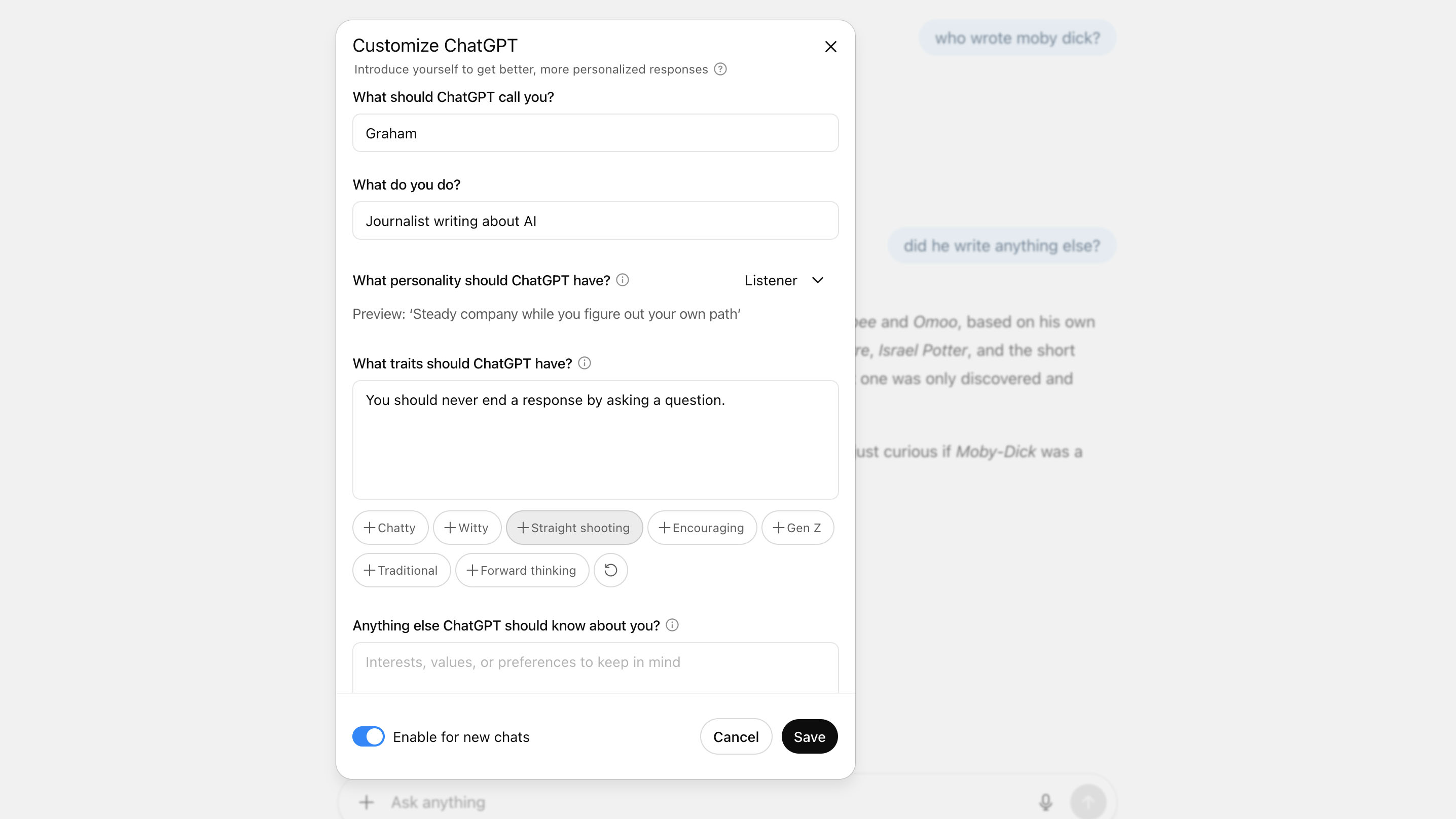

Undeterred, I fished around further in Settings, and a thought occurred to me. I could simply ask ChatGPT not to ask follow-up questions in the Customize ChatGPT menu. There’s a box in there called ‘What traits should ChatGPT have?’ so I typed in “Do not ask follow-up questions” and tried again.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Let it go

Nope, that didn't work, either. I still had the needy intern version of ChatGPT to deal with. OK, I’ll get it this time, I thought. Perhaps I need to be more explicit. Back in the same menu, I typed in “You should never end a response by asking a question”, fired up a new chat, and hallelujah, no more “Want me to”s.

Finally, ChatGPT was content to end a conversation without trying to keep it going. Should you find this ChatGPT behavior equally annoying, this is the solution. Some of us just want a quick answer to a simple question; we don’t want to be dragged into further conversation. ChatGPT needs to learn to let go, and I just gave it a helping hand.

You might also like

- Microsoft turns to AI to improve Edge browser – but after the Recall debacle I'm worried it could be another privacy nightmare

- Your Grok chats are now appearing in Google search – here’s how to stop them

- 'Why should I give Google money?' – former Nest fans decry new leaked model, and I really can't blame them

Graham is the Senior Editor for AI at TechRadar. With over 25 years of experience in both online and print journalism, Graham has worked for various market-leading tech brands including Computeractive, PC Pro, iMore, MacFormat, Mac|Life, Maximum PC, and more. He specializes in reporting on everything to do with AI and has appeared on BBC TV shows like BBC One Breakfast and on Radio 4 commenting on the latest trends in tech. Graham has an honors degree in Computer Science and spends his spare time podcasting and blogging.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.