Why you can trust TechRadar

Before we get into any of that though let's take a look at what exactly is sitting inside that lovely magnesium alloy chassis. The big thing, and I mean that entirely literally, is the GK110 GPU.

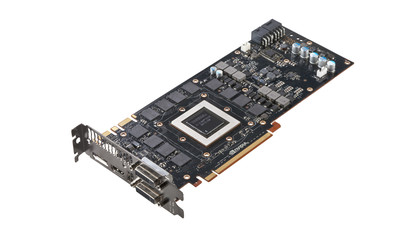

Sitting in the middle of the PCB is a vast chunk of silicon with over 7 billion transistors humming away inside. And despite being manufactured on the smallest GPU lithography available to Nvidia, those 28nm transistors still end up making the GK110 chip around 520mm squared in size.

Inside that silicon is the most complex and powerful graphics architecture around, with 2,688 single precision and 896 double precision CUDA cores.

Those are arrayed over 14 of the familiar Nvidia streaming microprocessors (SMX) set in 5 graphics processing clusters (GPCs). The fully specced GK110 chip has 15 SMX units, but with yields giving more GPUs with 14 functional SMX than with 15 SMX units it was a product decision to ship with one shut down.

That doesn't mean you're getting less than the Tesla crowd though, the full-fat Tesla K20X is similarly running with 14 SMX units and 2,688 single precision cores.

Coupled with the vast number of both single and double precision CUDA cores are 224 texture units and 48 ROPs. Those double precision cores aren't enabled by default though, you have to turn them on in the Nvidia control panel and that limits the base clock.

It's recommended to keep them switched off when you're gaming and to throw the switch only when you're going to be doing some serious compute-based grunt work. And with the Titan the amateur 3D world is going to be rather excited.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

In terms of clocks, the GTX Titan is set relatively conservatively compared to the GHz GPUs we've become used to since the GTX 680 launched. Still, with a boost clock of 876MHz it's no slouch and with the advances made in the new iteration of GPU Boost there is overclocking aplenty available to this card as a matter of course.

Those are all relatively impressive numbers and then you come to the frame buffer. At over 6GB GDDR5, running at over 6GHz, this thing is going to be capable of some incredibly high-resolution gaming. And that's arguably where this kind of 'ultra-enthusiast' card is targeted.

With the new HD standard of 4K now very much on people's radar it makes sense for the very top graphics cards of today to be ready for this new image resolution revolution.

So, you've got a massive GPU, running at relatively high clock speeds with a huge amount of video memory attached to it. You're going to need to plumb it directly into Sizewell B, aren't you?

To be honest, this is probably the biggest surprise of this GTX Titan package, it's actually got a TDP of just 250W. That's comparable with the TDP of the slower AMD Radeon HD 7970. This is all thanks to the impressively power-conscious Kepler architecture we've already seen in the GTX 680, and it means we were able to get a three-way SLI setup running comfortably on a 1,200W PSU without a care in the world.

Soft sells

As with the GTX 680, the Titan isn't just all about the hardware sat on the PCB, the software is almost as important as the actual silicon itself. And just as GPU Boost was vital to the success of the GTX 680, so the new GPU Boost 2.0 is just as vital to the GTX Titan.

It has to be said though the performance improvements don't sound that earth-shattering, Tom Petersen estimates it to offer between 3-7% higher frame rates compared with the original GPU Boost.

But it's the way it's getting there that's impressive. Where before the focus was on the power the GPU was using it has now been switched around so the GPU clock is automatically boosted in line with the temperature the chip is running at.

This has meant that Nvidia can be a lot more cavalier about the voltage it pushes through the chip as it doesn't have to worry anymore about the perfect-storm combination of high voltage and high temperature.

In the original GPU Boost there was no over-voltaging, Nvidia imposed a hard limit on the amount of voltage you could push through to ensure this combination never hit, much to the consternation of the overclocking community.

"The reason there is a reliability limit on voltage is because of one thing," says Tom. "When you have high temperatures and high voltage you blow up transistors.

We are regulating based on temperature so now we can have both high voltage and high temperatures being a possibility, but they never happen at the same time. Now we can raise the reliability voltage without changing anything else. That single change is a lot of what helps us get that performance boost."

So now there is a higher standard voltage, as well as the option to opt-in and allow over-voltaging within whichever overclocking software you choose to use on your expensive ol' card. You can specify a temperature you want to hit as a limit for your card and the software will automatically adjust clock speed and voltage in an effort to boost the GPU as much as it can within the boundaries of that temperature limit.

With the reference cooling design that enabled me to get up to around 1,175MHz, but with a quality water-cooling block that could be raised significantly.

"The other thing that's really cool about doing temperature-based control," says Tom, "is that chassis, water-blocks and everything else that makes a GPU run colder is actually now going to make it run faster."

Now if that isn't a reason to upgrade your dusty, creaking chassis I don't know what is. Sadly though GPU Boost 2.0 is limited to this GPU and those that are to come after, there are no plans to retroactively fit it to older cards.

Aspirations

But what does that all mean for performance? Well, it means base performance is actually quite tough to accurately measure. Each GTX Titan will perform slightly differently and that can be based on not just different GPUs but also on varying environmental conditions.

That said my test card clock was happily topping the 1GHz mark without my even touching the overclocking controls. Sadly that also meant that when I did start getting happy with the EVGA Precision OC tools, and stuck a 120Hz offset onto the base clock, I wasn't really seeing much in the way of actual frame rate improvements.

I can though comfortably confirm that the GTX Titan is the fastest single-GPU graphics card on the market. But it's kind of not actually fast enough. For the most part it's around a third faster than the preceding GTX 680, and in general around 15-20% faster than the top-end HD 7970 we've tested.

There are a lot of variations compared with the Radeon silicon though, with some game engines running 45% faster on the Titan and one in particular, DiRT Showdown, actually running a couple FPS slower than the HD 7970.