I tried iOS 16's Personalized Spatial Audio on my AirPods, and I don't get the fuss

But maybe my ears are too boring

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

One of the quieter announcements of iOS 16 back in June was that Apple is introducing a "Personalized Spatial Audio" feature as part of the software update. This feature literally scans your ears using the iPhone's 3D sensor that's part of its Face ID system, so that it can customize its audio for the shape of your ears.

Personalized audio is a big thing among the best wireless headphones right now, with products such as the NuraTrue putting it front and center, while products like the Sony WH-1000XM5 offer an optional adjustment for your ears if you dig into their settings.

The idea with personalized audio is that if headphones or earbuds tune their sound to the shape of your ears, then they'll remove some detail imperfections you get from reflections and other interference. In theory, that means more precise, realistic, and dynamic performance.

Now that the public beta of iOS 16 is available to download and anyone can try it, I installed it on an iPhone 13, grabbed my AirPods Pro and AirPods Max, and got ready to have my ears scanned.

(Please note: don't download and install the iOS 16 beta on your main iPhone that you use every day. I had a second phone that I don't rely on, because I'm a professional tech nerd, so I installed it there. Beta software can cause data loss or even devices to stop working completely. Wait for the final version if you can! I do this so you don't have to.)

You can already find lots of stories online from people who tried the feature and found that it hugely improved Spatial Audio sound, and in some cases even turned them from a Spatial non-believer into a fan.

But as the headline may have given away, I didn't have that experience. As I sat there switching my headphones between my iOS 15 device and my iOS 16 device trying to hear the difference, I had to conclude that this wasn't going to be a game-changer for me.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

So here's what it's like to go through the process, and why I think it didn't wow me.

The ear scanning process

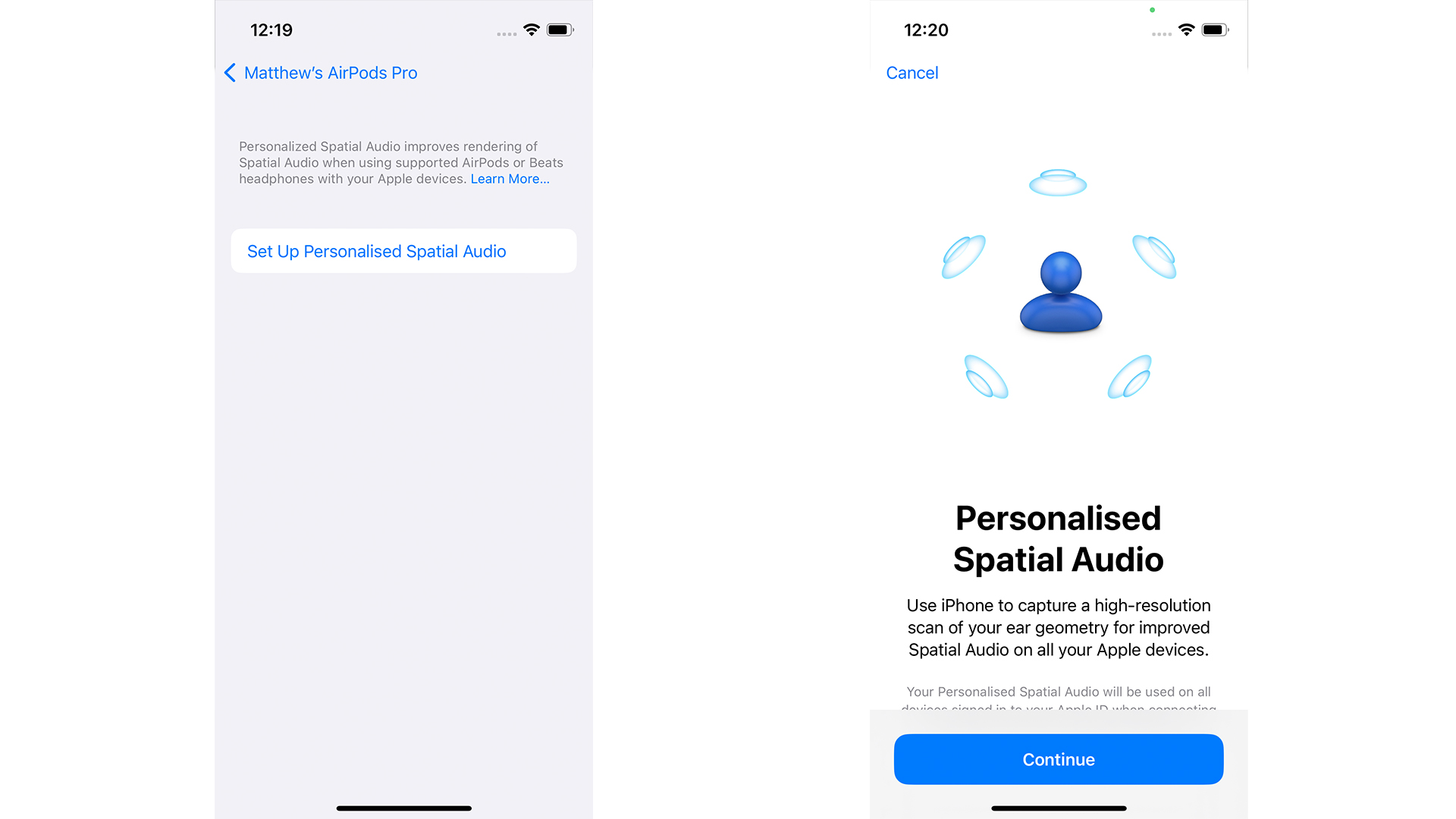

Apple's approach is, in typical Apple fashion, a very technically advanced approach, while trying not to feel like it is. Some companies handle personalized sound by playing you sounds and asking what frequencies you can hear during a focused test; Apple does it by making a 3D map of your ears, which only takes a few seconds, as long as you do it right.

You'll find the ability to run the Personalized Spatial Audio program by going Settings > Bluetooth, selecting your AirPods (when they're connected), and then choosing "Personalize Spatial Audio". It looks like Apple will also pop up an invitation to try it when you connect your AirPods, but I was already going straight there to do it, so I kind of skipped that.

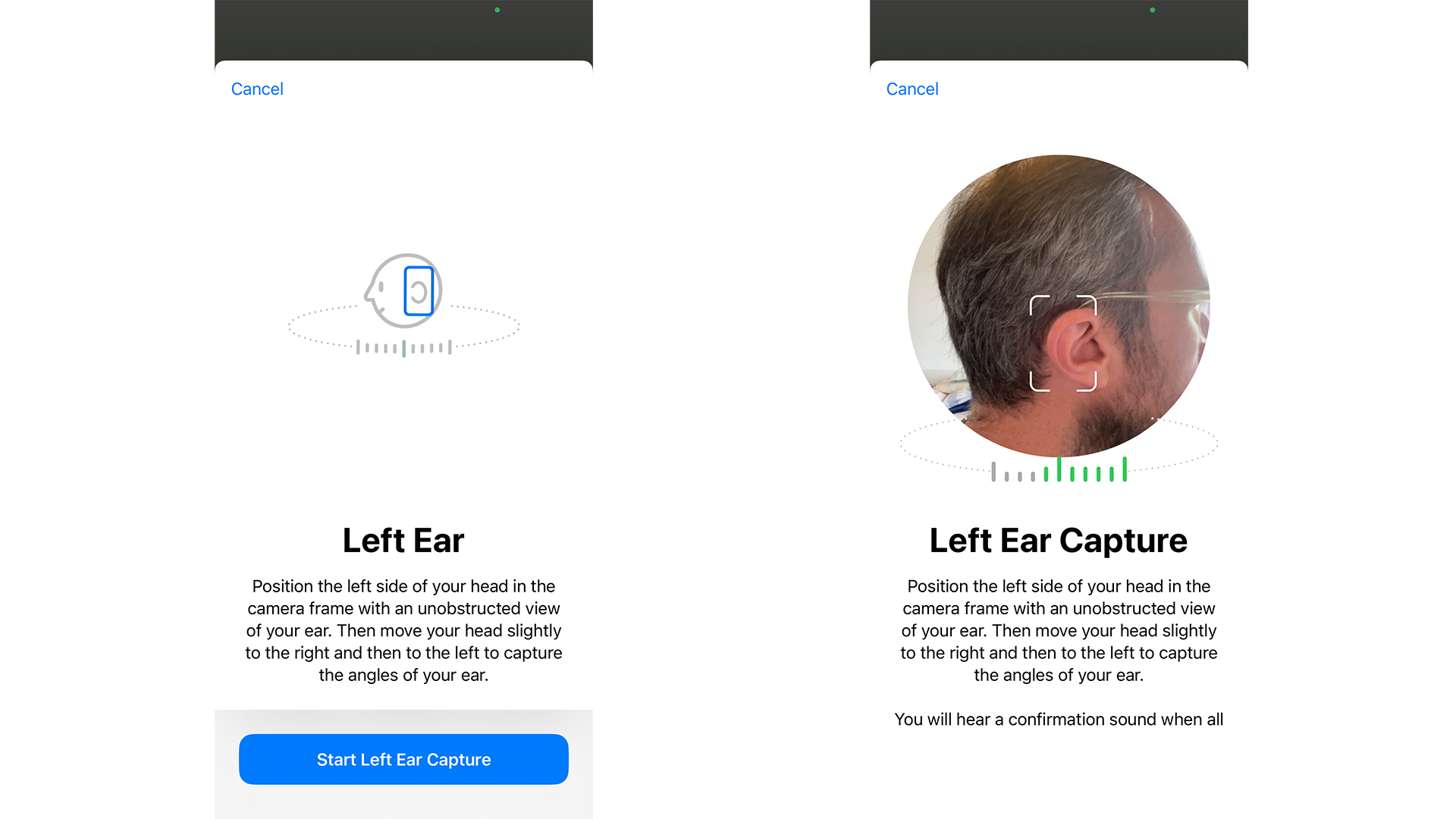

First, you'll be asked to scan your face, in the same way as you do when you set up Face ID. Then, you'll be asked to hold the phone up with the front camera facing one ear and then the other.

No surprise, this is the hard part, but Apple's process is fairly slick. The idea is to hold the phone facing your ear, then slightly turn your head left and right… but obviously, you can't see the screen. So when you hold the phone up, it'll make a noise when your ear is in a good spot. Turn slightly and it'll make another noise when it's happy with the rotation; turn the other way and it'll make another noise. You're done with that ear.

The problem is successfully getting it to make all those noises. You can't see where you're going wrong. If you're not getting it, it's hard to tell why. I went through the process quite a few times, just to see if I could crack a reliable technique… and I did.

The trick was to hold the phone about six inches from your ear. This really seemed to be the sweet spot. It's tempting to hold it further away, but at six inches each scan took barely a few seconds because I was never really able to put my ear in the wrong spot unless I really tried to screw it up.

And then you're done! That personalized ear profile is on your phone (and will be shared with other iOS 16 devices, so it's the same everywhere), and you can get listening.

What effect did it have?

I tried both AirPods Pro and AirPods Max with my new personal profile, and the result was the same in both: I heard very little difference.

I jumped straight into some of my favorite Dolby Atmos test movies and compared playing on an iOS 15 iPhone with playing from the iOS 16 device, and for the most part, the difference felt either non-existent or so minimal that I couldn't really tell you if one was 'better' than the other.

The most prominent difference was in The Matrix, in the bullet-time sequence. In Atmos, that's a real playground of sounds swooping around the listener, just like the camera swoops around Neo. I felt like the whoosh of the bullets was stronger when they were to the left and right of me with the Personalized profile active, but I was really trying to listen for differences. I wouldn't say it was a meaningful improvement.

Switching to Dolby Atmos music, I really couldn't hear anything noteworthy comparing playback from iOS 15 to iOS 16. I tried with and without head tracking, I tried all kinds of genres. It didn't happen.

However, this experience clearly isn't universal. Plenty of people online feel differently about it. Here are a few testimonials I found after the quickest of searches:

"Guys I didn’t know this feature will be this game-changing. I don’t know what apple did but APM sounds miles better than it did before and it was already first class. Spatial audio with head tracking improved a lot too, it separates the instruments and vocals and they are moving differently. Really recommend giving it a try," says a user in one Reddit thread.

"It honestly improved spatial stereo a lot. I’ve listened to a few different genres to test it out and it’s nice," agrees a different user in a totally different thread.

"Still is a little quieter than standard stereo, but definitely sounds way less distant, also a bit more dynamic," adds another user who started one of the many threads praising it.

Analysis: are my ears too boring?

Why would there be such a difference of opinion between myself and others online about the effectiveness of the Personalized Spatial Audio adjustments?

Well, it's possible that I just have a very average set of ears. At least, average in how Apple calculates things.

Think of it this way: until Apple added personalization of the Spatial Audio algorithms, they still had to be designed for some kind of ears, right? What most companies do is create a model of the "average" ear (with lots of wriggle room around it), and design headphones that will sound good for that. The idea is it'll be as pleasing as possible for the largest number of people.

It's possible that I happen to have ears that are actually right in the sweet spot of how Apple had Spatial Audio work by default, meaning that I'm hearing minimal improvement from the personalized audio because it hasn't actually had to change very much.

The problem, of course, is that I can't know this for certain. In some ways, it'd be better if Apple pulled back the curtain here a little more than it does normally – perhaps after the Personalized Spatial Audio tests, it could give you a little stylized graph to suggest how it's going to change the sound.

It's also possible that this whole system will change more before iOS 16 is finalized and released alongside the iPhone 14 (and probably AirPods Pro 2) later in the year. Maybe I'll pick up more differences in the final version, or maybe my ears are just too vanilla.

Matt is TechRadar's Managing Editor for Entertainment, meaning he's in charge of persuading our team of writers and reviewers to watch the latest TV shows and movies on gorgeous TVs and listen to fantastic speakers and headphones. It's a tough task, as you can imagine. Matt has over a decade of experience in tech publishing, and previously ran the TV & audio coverage for our colleagues at T3.com, and before that he edited T3 magazine. During his career, he's also contributed to places as varied as Creative Bloq, PC Gamer, PetsRadar, MacLife, and Edge. TV and movie nerdism is his speciality, and he goes to the cinema three times a week. He's always happy to explain the virtues of Dolby Vision over a drink, but he might need to use props, like he's explaining the offside rule.