Intel predicts death of conventional PC graphics

IDF Spring 2008: Larrabee chip will enable new age of "visual computing"

PC graphics technology as we know it is dying, according to Intel. And its replacement will be a new age of visual computing enabled by Intel's Larrabee chip.

At least, that's what Intel bigwig Pat Gelsinger told attendees during his opening keynote at the Shanghai instalment of the Intel Developer Forum today.

It's a bold claim. But what does it actually mean? Gelsinger's specific beef is with the raster-based 3D graphics hardware that has dominated the PC industry for over a decade. That includes video chips from the two heavyweights of PC graphics, Nvidia and ATI, as well as Intel's own integrated graphics solutions.

Rethinking rasterisation

Raster chips focus on converting vector data into bitmaps that can be displayed on computer screens. It's been an extremely successful strategy to date, enabling ever more sophisticated graphics as raster technology has become more sophisticated.

But Intel reckons they're too rigid and lack scalability. The solution is a new "programmable" approach to graphics that majors on ray-tracing and model-based computing. And that solution will take the form of the near-mythical Larrabee co-processor.

Intel has been slowly trickling out info on Larrabee for over a year. But this is the first time it has unambiguously claimed it will usurp the prevailing graphics technology in PCs.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Visual computing

We already know that it is based on an array of approximately 16 small X86 execution cores, and is largely instruction-set compatible with Intel's existing PC processors.

To that Intel says Larrabee adds a new high-performance vector engine. The result is teraflops of processing power on a single chip, photorealistic graphics and a more immersive user interface. All of which adds up to what Intel is calling "visual computing".

Bigging up the Larrabee chip, Gelsinger said that in his 30-year career he had never been involved in a chip development programme that had generated so much enthusiasm from software developers.

What about software support?

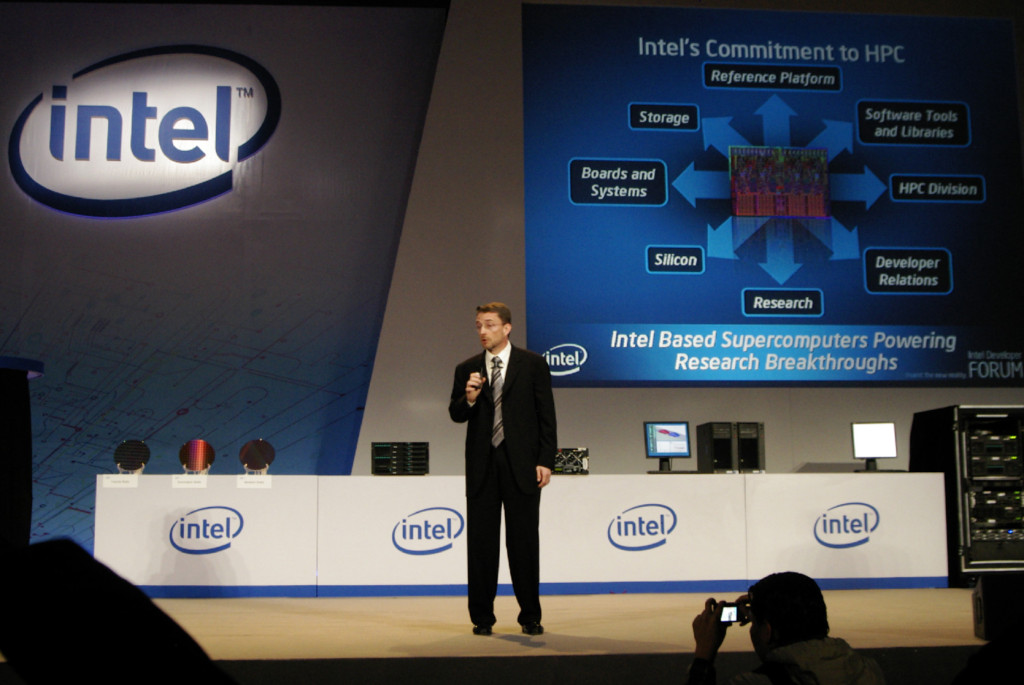

He also emphasised that Intel is working hard to support developers by creating various tools and tuning libraries. Nevertheless, one of the big questions surrounding Larrabee remains software support.

For starters, Intel hardly has a track record for great video drivers. Moreover, raster chips from the likes of ATI and Nvidia have a decade of development and fine tuning behind them. That's a major challenge for Larrabee, whatever its theoretical advantages.

What's more, in a recent interview with TechRadar, Nvidia's chief scientist David Kirk was dismissive of the threat posed by the Larrabee chip. Ray tracing, Kirk says, will merely add to the box of tricks Nvidia has at its disposal.

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.