Need for speed: a history of overclocking

We might have multi-core, but overclocking is bigger than ever

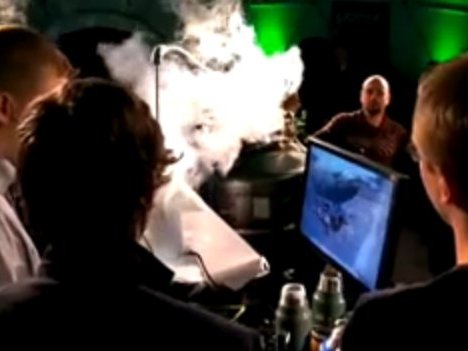

As every pukka PC enthusiast knows, AMD recently set a clockspeed record for a quad-core PC processor. Courtesy of some crazy Scandinavian kids and a bucket load of liquid nitrogen, AMD's newly minted 45nm Phenom II processor hit a heady 6.5GHz.

Of course, such records are thoroughly irrelevant to real-world PC performance. Constantly pouring liquid nitrogen into your PC by hand is hardly the stuff of practical computing. And yet we feel sure beastly old Intel won't be happy allowing AMD to maintain even this symbolic superiority.

You won't, therefore, be surprised to hear that rumours of a new frequency friendly stepping of the mighty Core i7 processor are currently circulating. If true, it could see AMD's 6.5GHz quad-core record blown away sooner rather than later.

Potentially even more worrying for AMD, Intel has also announced that plans to crush the transistors in its PC processors down to a ridiculously tiny 32nm in breadth have been brought forward. You'll be able to buy 32nm processors from Intel before the end of the year. Expect the clocks to keep on climbing.

With all that in mind, now seems like an opportune moment to reflect upon the history of CPU overclocking and work out how we arrived at today's multi-core, multi-GHz monsters and what the future holds.

The original IBM PC

In fact, overclocking is nearly as old as the PC itself. Intriguingly, it was actually PC manufacturers rather than enthusiasts that got the ball rolling. Back in 1983, ever-conservative IBM capped early versions of its eponymous PC at a mere 4.7MHz in the interests of stability.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Soon enough, however, clones of the IBM PC shipped with 8088-compatible processors running at a racy 10MHz. Thus the battle for the highest clockspeed was started.

Of course, at this early stage, end-user overclocking wasn't an awfully practical option. Running higher clocks required a change of the quartz control crystal used to set clock rates, otherwise known as the oscillator module.

Even then, in those days the rest of the platform was hard locked to the CPU frequency. In other words, any change to the CPU frequency was directly reflected in the operating frequency of the system bus, memory and peripherals. What's more, many applications - most notably games - lacked built-in timers and ran out of control or simply crashed on an overclocked platform.

The era of easy overclocking begins

The next big step was the arrival of the Intel 486 processor and the introduction of much more user friendly overclocking methods. It was the latter DX2 version of the 486, launched in 1989, that debuted the CPU multiplier, allowing CPUs to run at multiples of the bus frequency and therefore enable overclocking without adjusting the bus frequency.

While the adjustment of bus speeds usually entailed little more than flicking a jumper or DIP switch, changing the multiplier setting often required a little chip modding with a leaded pencil or at worst perhaps some soldering work. One way or another, impressive overclocks of certain clones of Intel's 486 chip from the likes of Cyrix and AMD were possible. For example, AMD's 5x86 of 1995, a chip based on 450nm silicon, could be clocked up from 133MHz to 150Mhz. Sexy stuff at the time.

What's in a wafer?

It was during this early golden age of enthusiast overclocking in the mid 1990s that the influence of silicon production technology on individual chip frequencies came to the fore. CPUs are essentially etched out of round wafers of silicon substrate. Despite the finely honed processes used in manufacturer, properties vary from wafer to wafer.

Put simply, chips cut from some wafers can attain higher stable clockspeeds than others. In fact, the same goes for the position of an individual CPU die within a wafer. The nearer the middle, the more likely it is to hit high frequencies.

At the same time, the progression to smaller and smaller individual transistor sizes not only allows more features to be crammed into a single processor die, it also tends to reduce current leakage and therefore enable higher clockspeeds.

Then there's the minor matter of steppings – minor revisions made to CPU architectures to fix faults and improve speedpath issues. That's just a fancy way of describing the fine tuning aimed to allow high clockspeeds. There's a lot to be aware of when choosing a chip with overclocking in mind.

Intel gets in on the overclocking game

Intel, of course, has long been the master of making smaller transistors. In 1996 it introduced the Pentium Pro. Firstly, this was a much more sophisticated CPU than any before thanks to out-of-order instruction execution. But it also boasted tiny (for the era) 250nm transistors. 200MHz versions of the Pentium Pro were known to hit 300MHz, an extremely healthy 50 per cent overclock.

However, the Pentium Pro was a painfully expensive chip. In 1998, Intel released the original Celeron, a budget-orientated processor with a cut down feature set including no L2 cache. Stock clocked at 266MHz, retail examples of the chip were sometimes capable of as much as 400MHz. Big clocks on a small budget was possible for the first time.

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.