Where it all went wrong for Intel's Larrabee

And why this isn't the end of Intel's GPGPU ambition

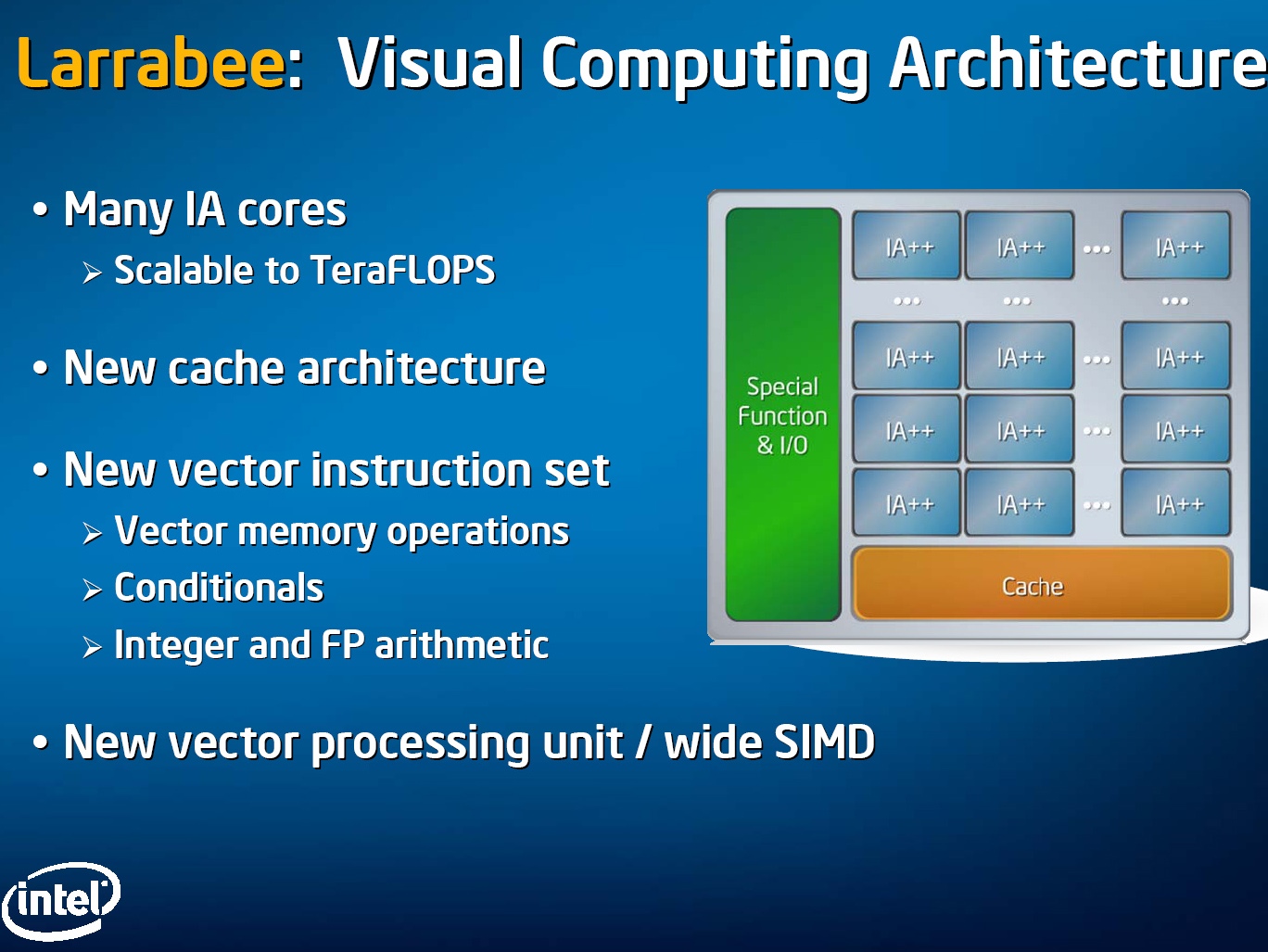

So it's goodbye to Intel's Larrabee, we hardly knew ye. Larrabee, of course, is the much heralded many-cores graphics-come-co-processor architecture with which Intel had been planning to spank NVIDIA and AMD. And it's been canceled.

Strictly speaking, the Larrabee project in its entirety hasn't been canned. Rather, the first Larrabee chip has fallen behind schedule. Plans to market it as a retail product have been humanely destroyed.

As for the whys and wherefores, there are several. The main problem is the sheer competitiveness of the graphics market. Any kind of delay is deathly in PC graphics. That's only more true when you are trying to launch a new architecture powered by a revolutionary programmable graphics pipeline.

The damage is done

Indeed, that very programmability made the delay in readying the chip itself so very damaging. For Larrabee, you see, the hardware is really just one half of the equation. The other is the software layer that effectively translates the DirectX graphics pipeline into something that Larrabee's mini CPU cores can cope with.

Final development of that layer cannot take place until the hardware has been perfected. Unlike AMD and Nvidia, therefore, who must only polish their graphics drivers prior to a hardware launch, Intel needs perhaps six months to finalise the Larrabee software layer.

The net result is that Larrabee Mark I has missed its launch window. To release late would mean pitting Larrabee against even more powerful competition than was originally anticipated.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Lame duck Larrabee

Frankly, that's a fight Intel has no hope of winning. In fact, it's likely the first Larrabee chip would have been something of a laggard even it Intel had got it out on time. A late Larrabee would certainly be a lame duck.

Of course, the writing has been on the wall ever since the feeble Larrabee demo Intel sheepishly gave at IDF this September. But credit is due for coming clean at what was probably the earliest possible opportunity.

All of which just leaves the question of the broader implications of the Larrabee Mark I cancellation. Officially, Intel remains committed to delivering a "many cores" graphics architecture. Indeed, we are told that an announcement along those lines will be made later in 2010.

Moreover, the first Larrabee chip will not be entirely defenestrated. Instead it will be made available to trusted partners as a development platform. The better for coders to get to grips with its tricky programmable architecture, in other words.

That suggests Intel will eventually release a discrete graphics product based on the Larrabee philosophy of many mini x86 CPU cores. However, TechRadar understands that a fairly fundamental architectural overhaul is required. There's a good chance Larrabee Mark II may not appear until 2012.

Reboot to the rescue

But a 2012 reboot isn't the simple solution it seems. Inherent to the Larrabee project is the assumption that CPU and GPU architectures are converging. CPUs are adding cores, becoming more parallelised and GPU-like. GPUs, already highly parallelised, are becoming more programmable. Ten years from now, there will be little substantive difference.

Instead, the two architectures will sire a single progeny, known as the Accelerated Processing Unit (APU). Look deep inside an APU and you'll find elements that are recognisably derived from today's CPUs and GPUs. The whole, however, will be neither. Or both. Or whatever. You get the idea.

Anyway, while Intel has the CPU half of the APU conundrum covered, the Larrabee project is supposed to get the graphics sorted. For that reason, it cannot - it must not - fail in the long run. But the cancellation of the first chip may yet put paid to Larrabee's prospects as a stand alone product. After all, by the time competitive Larrabee boards are on sale, time will be running out on the very concept of a graphics card.

-------------------------------------------------------------------------------------------------------

Liked this? Then check out After Lynnfield: what Intel is working on next

Sign up for TechRadar's free Weird Week in Tech newsletter

Get the oddest tech stories of the week, plus the most popular news and reviews delivered straight to your inbox. Sign up at http://www.techradar.com/register

Technology and cars. Increasingly the twain shall meet. Which is handy, because Jeremy (Twitter) is addicted to both. Long-time tech journalist, former editor of iCar magazine and incumbent car guru for T3 magazine, Jeremy reckons in-car technology is about to go thermonuclear. No, not exploding cars. That would be silly. And dangerous. But rather an explosive period of unprecedented innovation. Enjoy the ride.