The 2024 Paris Olympics are gone, but AI surveillance may be here to stay

The experiment will be in place at least until March 2025

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

With the 2024 Paris Olympics coming to an end, sports fans worldwide will look away from France until the next big competition. For privacy advocates, however, the match isn't anything but done.

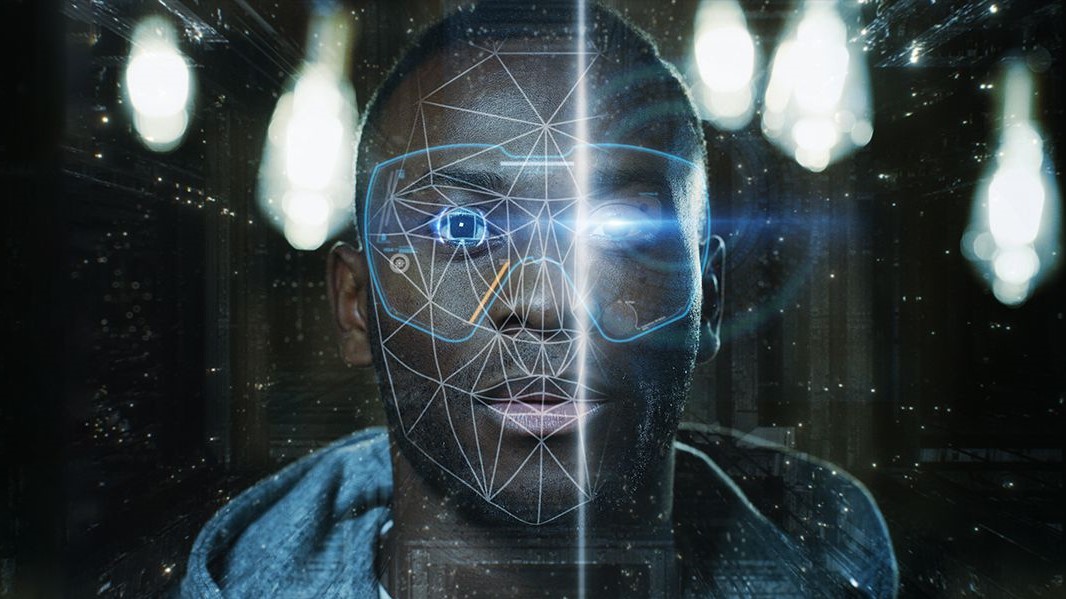

France beefed up its surveillance system on the occasion of the Games, rolling out AI-powered CCTV cameras across the capital. The experiment is set to continue until March 2025. Needless to say, this algorithmic video surveillance (VSA) has attracted strong criticism - and now experts fear such a system is here to stay.

"The ongoing experiments are the first step towards the legalization of these technologies," Félix Tréguer, a member of the digital rights advocacy group La Quadrature du Net, told me. "Each time, those large events are used as a way to legitimize controversial surveillance practices that then stay in the long run. In that respect, France is no exception."

Legalize AI surveillance

A legal framework for carrying out these tests already exists. In May 2023, the French Parliament enforced a new ad-hoc law to regulate the use of AI-powered cameras on an "experimental basis" during some large events until March 31, 2025. This made France the first country in the European Union to do so, effectively introducing VSA into the law.

As Le Monde reported, 200 cameras in the Paris and Ile-de-France area have been powered with AI for the Olympics. The new AI system was also deployed across 46 subway stations with a maximum of 300 cameras.

It's worth noting that VSA is different from the even more controversial live facial recognition technology. Here, the algorithmic scan of the videos is used to flag potentially dangerous situations. These include changes in crowd size and movement, abandoned objects, the presence or use of weapons, a body on the ground, smoke, or flames.

"The operational alert remains in the sole hands of the operators who decide whether or not to report the detected situation," reads the Ministry Of the Interior's website.

A privacy nightmare?

French VSA might not be as invasive as live facial recognition on paper. Yet, privacy experts believe there are still high risks in introducing AI into the national surveillance system.

Back in March 2023, 38 civil society organizations (including La Quadrature du Net and Amnesty International France) signed an open letter warning how the proposed surveillance measures violate international human rights law's principles of necessity and proportionality while posing "unacceptable risks to fundamental rights," such as the right to privacy, the freedom of assembly and association, and the right to non-discrimination.

As Katia Roux, a specialist in technology and human rights at Amnesty International France, told Euractive, "Algorithmic video surveillance can analyze biometric data (body data, behavioral data, gait), which is protected personal data."

On August 1, 2024, the European Union enforced its landmark AI Act to provide a legal framework for categorizing and scrutinizing AI. Among other measures, the new law bans the untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases.

Tréguer from La Quadrature du Net also sees the move as "a constant and indiscriminate monitoring of the population," which could make room for abuses. Homeless people, for instance, might be wrongly targeted by smart cameras, potentially becoming one of the collateral damages of the new tech.

Crucially, Tréguer also pointed out how systemic illegality around VSA implementation has occurred so far, with the most significant being the national police starting the experiment years before the introduction of the legal framework.

He said: "This is why we say that the 'limited experiments' provided by the 2023 law are a scam, a diversion. They are meant to divert attention from rampant illegality and stage a process whereby a so-called delimited sandbox approach to deploying them is conducive to a long-term, permanent legal framework."

David Libeau, a French software developer and privacy advocate, is also worried about the privacy implications of the law, which allows the training of the AI algorithm with collected CCTV footage.

"I believe that it is not really legal from the GDPR perspective, but also from the new AI act," he told me, adding that he had already filed a complaint to his local data protection authority (DPA) and still waiting for a response at the time of writing.

What's next?

Both Tréguer and Libeau strongly believe that, even though the ongoing VSA experiments will fail, the failure will be used to make the case for an extension of the experimental framework.

"In the end, we believe that VSA will be dominant both ways," Libeau told me.

However, if on the internet you can use tools like the best VPN apps or the Tor browser to boost your anonymity when surfing the web, speaking out against the risks of AI-powered CCTV cameras seems the best bet to try and secure your privacy across the streets.

It's exactly with this in mind that La Quadrature du Net launched a campaign to raise awareness about the risks posed by this technology and help citizens mobilize against it.

"France might be leading the way, but across Europe, other countries are following suit," said Tréguer. "They are waiting for the right political and legal conditions for full-fledged deployments. And France creating a permanent legal framework would surely help them speed up that process.

"The only way to turn the table is to make grassroots opposition to these surveillance technologies visible and heard, loud and clear. "

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Chiara is a multimedia journalist committed to covering stories to help promote the rights and denounce the abuses of the digital side of life – wherever cybersecurity, markets, and politics tangle up. She believes an open, uncensored, and private internet is a basic human need and wants to use her knowledge of VPNs to help readers take back control. She writes news, interviews, and analysis on data privacy, online censorship, digital rights, tech policies, and security software, with a special focus on VPNs, for TechRadar and TechRadar Pro. Got a story, tip-off, or something tech-interesting to say? Reach out to chiara.castro@futurenet.com