A brief history of film

It wasn't always superheroes and sci-fi, you know

1. The history of film

Back in the late 1800s, entertainment on a Friday night was noticeably lower tech than today. But that wasn't so much an obstacle as it was an opportunity, which saw the birth of the cinematic art form.

Over the 120 years or so since those first attempts at creating moving pictures using consecutive still images, films have come a long way, both in terms of storytelling and in terms of technical achievement.

So as TechRadar kicks off its inaugural Movie Week, celebrating the majesty of films, it's appropriate to dive into the history books to see just how we got to the point where we can travel to galaxies far, far away or ride motorbikes with velociraptors.

Image: Flickr

2. Technology vs narrative

The truth is, finding the exact birth of what we consider to be cinema is a rather challenging task. Back in the late 19th century, inventors across the world were all racing to be the first to create not only the hardware to record and display a film, but also the films themselves.

While there is evidence that much of the technology to create moving pictures had been invented as far back as 1888, for many people it was a pair of French brothers named Antoine and Louis Lumiere who gets the credit for the birth of the cinema in 1895.

Lumiere, like a 19th Century Steve Jobs, managed to pick up on an expired patent for a device called the Cinématographe, which they improved to turn into a device that acted as a camera, film processing unit and projector all in one.

In the same year, the brothers created their first film, La Sortie de l'usine Lumière à Lyon (or Workers leaving the Lumiere factory in Lyon, if your French isn't up to snuff), a 46 second documentary about – you guessed it – workers leaving the Lumiere factory in Lyon.

Image: WIkimedia Commons

3. Edit this

But really, film is about so much more than just a static camera pointed at something happening. Arguably, what makes modern filmmaking possible is the editing of multiple shots into a single film in order to create a narrative.

And the first examples of that started cropping up back in 1900. In the short film Grandma's Reading Glass by George Albert Smith, a series of close ups of items are intercut with footage of a young boy looking through his grandmother's reading glass.

This is the first real example of films using different cuts to help tell a story, something that we now take for granted in modern cinema.

4. The first feature film

For the first decade or so of movie making, creators generally focussed on short films that ran on a single reel.

The first example of the standard feature film that we've come to know and love today can be traced back to 1906, when a young Australian man named Charles Tait created The Story of the Kelly Gang, a film about notorious Australian bushranger Ned Kelly.

The film lasted well over an hour, and was both a critical and commercial success for the time. It's also one of the movies about Ned Kelly that don't star Mick Jagger or Yahoo Serious, so that's got to give it some bonus points, doesn't it?

5. The talkies

While the early movie scene was all about black and white moving images on the screen, the technology for pairing it with synchronised audio came much later. Early films were generally accompanied by a live musical performance, with occasional commentary from a showman.

But that all changed with 1927's The Jazz Singer. While previous films had tried to accompany the film with a proper soundtrack, The Jazz Singer is widely regarded as the first film to combine a synchronised audio track, despite being mostly silent.

But what the film did do is change cinema forever. By 1929, almost every Hollywood film released was considered a "talkie", replacing the live musical backing with a synchronised audio track of dialogue, sound effects and music.

6. Colored in

It's a little bit surprising to know that color came to films in the first few years of the 20th century. These earliest colorized films were colored by hand, which meant that the majority of prints were still in black and white. In 1903, the French film La Vie et Passion du Jesus Christ used a process to add some color to its film, but leaves a monochrome appearance.

In 1912, a UK documentary dubbed With our King and Queen through India was the first example of a film that captured natural colour instead of using colorization techniques.

But ultimately it was the 1930s before color films became the norm, as Technicolor released what it called Process 4, which combined a negative for each primary colour and a matrix for better contrast.

The first feature to use this colorful process was a Walt Disney animation called Flowers and Trees in 1932.

In 1934, The Cat and the Fiddle featured the first live-action sequence using the Technicolor Process 4 technique.

After that, color quickly became the norm for Hollywood, starting with Becky May, the first movie to use Process 4 for the entire feature.

And since then, color has been all the rage – minus some artistic black and white films (like Kevin Smith's Clerks) of course.

7. Fantasound

The man behind Mickey Mouse did so much more than just simple animation. Walt Disney is also credited with being one of the founders of modern day surround sound.

Back in the 1940s when he was working on Fantasia, Walt wanted to somehow get the sound of a bumblebee flying around the audience during the "Flight of the Bumblebee" section of the film.

Disney spoke with the engineers at Bell labs, who took to the challenge like bees to honey and created what is known as "Fantasound".

But while Fantasound was one of the first examples of surround sound, it was also prohibitively expensive, costing $US85,000 to install. As such, only two theatres in the US had it installed, which is probably why you've never really heard of Fantasound before.

Of course, surround sound has come a long way since then, which we'll get to shortly...

8. Cinemascope

By the time the 1950s came around, movie studios were starting to turn to technology to try and bolster dropping cinema tickets sales caused by the arrival of television.

The first of these technologies to launch was called Cinemascope, launched by 20th Century Fox, which made its debut with 1953's release of The Robe.

Essentially a refinement on a 1926 idea, Cinemascope used anamorphic lenses to create a much wider – and subsequently larger – image. The aspect ratio of Cinemascope films was 2.66:1, compared to the 1.37:1 ratio standard of the time.

While Cinemascope was largely made redundant by newer technologies the aspect ratios it created are still roughly the standard we see on films today.

9. Cinerama

At the same time as Cinemascope was starting to take a hold of Hollywood, another technology was offering the widescreen format in a different way.

Instead of relying on anamorphic lenses, Cinerama required cinemas to feature three synchronized 35 mm projectors, projected onto a deeply curved screen.

The end result was a picture running at about a 2.65:1 aspect ratio, but one that had some obvious challenges, especially where the projectors overlap.

By the 1960s, the rising costs associated with filming on three cameras simultaneously led to the technology being tweaked to record using a single widescreen Panavision camera lens, which was then displayed using the three cameras.

Cinerama also brought with it one of the first instances of magnetic multitrack surround sound. Seven tracks of audio (five front, two surround) were synced with the footage, with a sound engineer directing the surround channels of audio as necessary during playback.

Today, there are still a limited number of Cinerama theatres scattered around the world, offering the full experience, if you're wondering what all the rage was back in the 1950s.

Image: Wikimedia Commons

10. VistaVision

Hey! If everyone else was going to experiment with widescreen cinema technologies, then there was no way Paramount Pictures was going to be left behind.

VistaVision was Paramount's answer to Cinemascope and Cinerama. Instead of using multiple cameras or anamorphic lenses, VistaVision ran 35mm film horizontally through the camera gate to shoot on a larger area.

The obvious benefit of this approach was that it didn't require cinemas to get all new equipment. With the competing technologies though, VistaVision films were all shot in a way that they could be displayed at a variety of aspect ratios.

Launching with White Christmas in 1954 and used in a number of Alfred Hitchcock films over the 1950s, ultimately VistaVision was made obsolete by the arrival of improved film stock, and the rise of cheaper anamorphic systems.

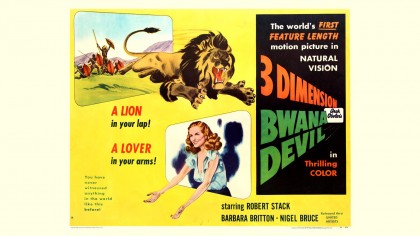

11. 3D

If you think the rise (and fall) of 3D cinema started with Avatar, then you're mistaken. 3D actually goes back to the very beginnings of cinema history, with a patent filed in the late 1890s, with two films screened side by side and made 3D through the use of a stereoscope. It wasn't very practical, and so ultimately failed to take off.

But that didn't stop people trying, all through the early decades of cinema, 3D was tried using many of the technologies we still see today. In 1922, a film called The Power of Love was shown using anaglyph glasses (the red and blue ones).

But it was in the 1950s that 3D had its first real wave of success. Led by the release of Bwana Devil in 1952, the first color stereoscopic 3D film, and with releases across most of the major film studios, 3D took cinema by storm.

For a couple of years, anyway. While 3D films continued to be produced throughout the 50s and 60s, competing technologies like Cinemascope, coupled with the rise of television and the expense of having to run two projectors simultaneously for 3D meant the format never really took off.

Of course, more recently the technology has seen a resurgence, largely thanks to James Cameron's Avatar. Opinions are pretty divided on the technology, but it is definitely seen as a drawcard for the more recent trend for blockbuster releases.

12. IMAX

In an attempt to show that bigger is better, back in 1970 a Canadian company showcased the very first IMAX film, Tiger Child, at Expo 70 in Osaka. Using a special camera that supports a larger film format, IMAX films offer a significantly higher resolution than that of standard film counterparts.

With dedicated IMAX cinemas launching from 1971, the increased resolution means viewers can typically sit closer to the screen. Typical IMAX theatres have screens 22 metres wide and 16 metres high, although they can be larger – in Sydney Australia, the world's largest IMAX screen measures 35.7 metres wide and 29.7 metres high. It's pretty awesome.

While many of the films shown on an IMAX screen are either documentaries or upscaled versions of 35mm films, there has been a growing tendency for filmmakers to shoot parts of their Hollywood blockbusters using IMAX cameras.

Image: Wikimedia Commons

13. Dolby sound

While it's natural to associate the history of film with the visual spectacle, it's important to remember the importance of sound.

And while we've already seen that surround sound made its way into cinemas as far back as the 1940s, it was during the 1970s that a company called Dolby Labs began having a very significant impact on cinema sound.

From the release of A Clockwork Orange – which used Dolby noise reduction on all pre-mixes and masters – Dolby has fundamentally changed the way we hear our movies.

In 1975, Dolby introduced Dolby Stereo, which was followed by the launch of Dolby Surround (which itself became Dolby Pro Logic) which took the technology into the home.

With the release of 1992's Batman Returns, Dolby Digital introduced cinemas to digital surround sound compression, which was reworked as the Dolby AC-3 standard for home setups.

While there are other film audio technologies out there, Dolby has no doubt led the way, and become the international standard for surround sound, both in the cinema and the home.

14. DTS

Four years after Dolby started work on Dolby Digital, another company came along to try and revolutionise cinema sound.

Initially supported by blockbuster director Steven Spielberg, DTS made its cinema debut in 1993 with the release of Jurassic Park, roughly 12 months after Dolby Digital's launch.

Jurassic Park also saw the format's debut in a home cinema environment' with the film's laserdisc release offering the technology.

Nowadays, there's an abundance of DTS codecs available, for both cinema and home theatre releases.

15. THX

Oh, George Lucas, we can't stay mad at you. Sure, you absolutely ruined our childhood memories with your Star Wars prequels and your Crystal Skulls, but we can't forget that your legacy extends beyond mere Star Wars and Indiana Jones Credits.

You were also instrumental in the creation of the THX certification for audio. While THX is often confused as an alternative codec system for audio to the likes of Dolby Digital, the truth is that THX is more of a quality assurance certification. With it, viewers could rest assured that the sound they were experiencing was what the sound engineers who created the film wanted them to.

So while the fundamental credit for THX actually goes to Tomlinson Holman, the fact Lucas introduced the standard to accompany the release of Return of the Jedi means that we can be a little less angry at him for Jar Jar Binks.

16. CGI

While people were playing around with computer graphics on screens as far back as the 1960s and 70s, with examples like Westworld showing a graphical representation of the real world, things really started taking off with Star Trek II: The Wrath of Khan.

In the film, the Genesis Effect sequence is entirely computer generated, a first for cinema.

Again, we can partially thank George Lucas for this trend, as the effects were created by his company, Industrial Light and Magic. From here, the trend for incorporating CGI elements into cinema cascades, with hundreds of developments over hundreds of films.

Notable examples include Toy Story as the first CGI animated feature, Terminator 2 for the T-1000's morphing features and The Matrix with its bullet time sequences.

Oh, and the Star Wars prequels for the extensive use of CG support characters and backgrounds, Avatar for mo-capped virtual characters and The Lord of the Rings trilogy for introducing AI software for digital characters.

CGI has completely changed filmmaking, and it continues to get better.

17. Digital cinematography

We take it for granted with our iPhones and digital cameras these days, but the truth is that recording digital video is a relatively recent phenomenon. In fact, while Sony tested the waters in the 1980s and 90s, it again fell to perpetual pioneer George Lucas to take the technology mainstream.

With (groan) The Phantom Menace, Lucas may have ruined Star Wars, but he also managed to revolutionise filmmaking by including footage shot on digital cameras. The film also saw the arrival of digital projectors in theatres around the world.

By late 2013, Paramount had moved entirely to digital distribution of its films, eliminating 35mm film from its lineup entirely.

That said, film isn't going away – even Star Wars Episode VII director JJ Abrams professes his love for shooting on film, while Quentin Tarantino has confirmed that he is shooting his latest film, The Hateful Eight, in 70mm film specifically to avoid digital projection. But despite these setbacks, the trend to move to digital is continuing to grow.

18. High Frame rate

While film has matured a lot over the past 100 years or so, it's interesting that the framerate of 24 frames per second has stayed fairly constant throughout.

While early cinema experimented with framerate, ever since 24 frames per second was adopted as the standard, it has largely been left alone.

That is, right up until an excitable filmmaker named Peter Jackson decided to film his return to Middle Earth – 2012's The Hobbit: An Unexpected Journey – at the high frame rate of 48 frames per second.

The technology wasn't universally loved – criticisms included the loss of the "cinematic" feel of the movies, as well as resulting in a sharper image that feels more like a video game than a film.

But with James Cameron planning to film his Avatar sequels at 48 frames per second, the technology isn't going away.

19. Atmos

As we've already discovered, Dolby has a long history of revolutionising cinema audio. In 2012, the company did it again with the launch of Dolby Atmos.

Atmos enables 128 channels of synchronised audio and metadata associated with the panning image to create the most lifelike surround sound solution to date.

What makes Atmos truly magnificent is that it renders the sound based on the metadata in real time using whatever speaker system is in place, rather than having a sound engineer dictate which sounds are playing through which speaker.

The technology, while originally destined for cinema use, has also made its way to home theatres, with compatible AV receivers that is.

20. The future

With technology developing at an exponential rate, the future of film is sure to be an exciting one.

Already, with the arrival of VR devices like the Oculus Rift, filmmakers are beginning to dabble in 360 degree filmmaking.

Others are experimenting with interactive cinema, turning film into a choose-your-own-adventure type experience.

One thing's for certain: Movie making has come a long way in a relatively short period of time, and it's undoubtedly going to advance even faster as we roll deeper into the 21st Century.

- TechRadar's Movie Week is our celebration of the art of cinema, and the technology that makes it all possible.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful