AI coaches from Fitbit and Apple could be great for health – and bad for privacy

We're about to give away more health data than ever before

Fitbit made headlines last week for debuting its AI health coach, designed to help you make better use of the mountain of data it collects. Dubbed Fitbit Labs, the service is arriving in 2024 and is said to generate deeper insights in a more accessible way using AI.

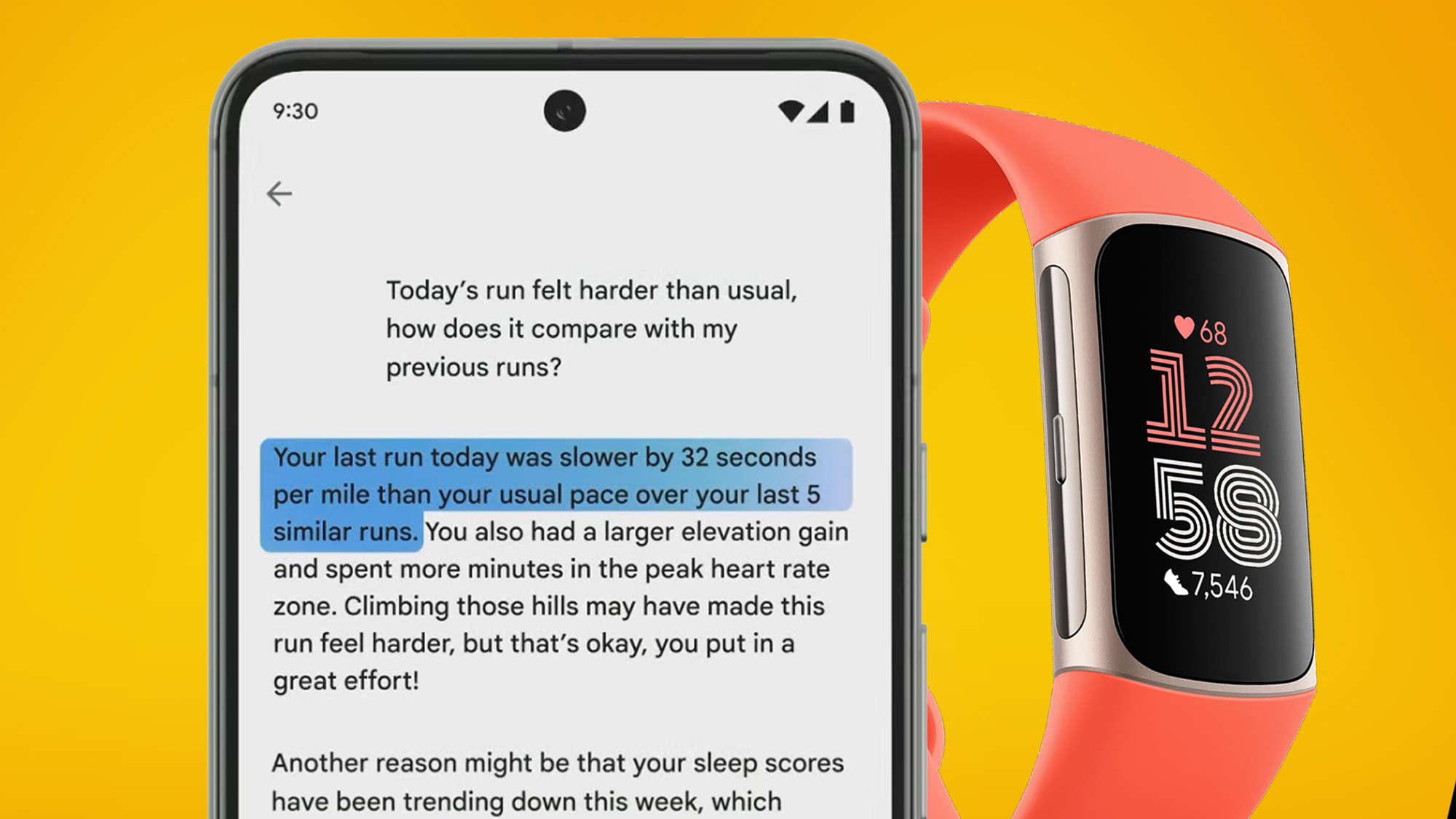

Part of this new feature will be a chatbot added to the Fitbit app that you can ask questions like “What can you tell me about my last run?” The Fitbit Labs chatbot will then regurgitate information, such as your pace, distance, and time. It can even offer more advanced analysis like reasons why that run wasn’t as good as your previous run on a similar route.

This is based on the glut of information that the best Fitbits, and many of the best smartwatches, already collect. Garmins, Pixel Watches, WHOOP, the best Apple Watches, Amazfit models, and more are able to collect information about stress levels, heart rate, body mass, sleep quality, exercise habits, blood oxygen levels… the list goes on. Your personal trainer, or your doctor, probably doesn’t know you half as well as your smartwatch does.

To access all this data, you have to dive into your watch’s companion app and find detailed graphs and breakdowns of your activities, usually summed up or aggregated into numerical scores. Having a conversation with a Fitbit chatbot about your health data, in which it can bring all this data to the fore on demand without you going to look at it, is an understandable logical step.

It’s a step Apple has also taken with the Apple Watch Series 9 and Apple Watch Ultra 2, as you will be able to ask Siri about your health data later this year. It’s not a full coaching service, but Apple stated Siri will be able to help with “fitness-related queries”, thanks to on-device processing.

On-device processing just means Siri doesn’t have to process a request by anonymizing and sending your health data to its own servers, before coming up with a response. Instead, it can answer your question natively on the Apple Watch without sending your data anywhere. Apple Quartz, a full AI fitness coaching program, is reportedly in development.

While Apple’s Siri improvement isn’t explicitly a coaching service, WHOOP Coach, the new service available on WHOOP 4.0 bands, is very much designed to be a personal trainer in your pocket. WHOOP Coach uses OpenAI’s GPT-4 (yes, as in Chat GPT) combined with WHOOP’s algorithms to generate answers to questions such as “How does my sleep compare with other people my age?” and “Can you build me a training program?”

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Like Apple, WHOOP has tried to address privacy concerns, stating: “Your conversations with WHOOP Coach will not be accessed without your consent and your data is not stored with 3rd parties. When you use WHOOP Coach, your metrics are anonymized and go through our third-party Large Language Learning Model (“LLM”) partner.” Unlike Apple, your metrics do not, apparently, stay on-device.

Other AI chatbots devoted to health and fitness are coming thick and fast. Amazfit is also getting in on the act, and I have had a private conversation with another smart device manufacturer, the identity of which I can’t disclose, asking me what customers will think of their own AI plans. On the surface, it all seems like a very useful tool. It’s a way to use AI to help navigate complex statistics people might find impenetrable, sifting through all the user’s health metrics and serving up useful information related to the user’s query.

However, the problems lie in how the chatbot generates answers to your queries, and how Large Language Learning Models operate: by being trained on lots of data. At some point down the line, your health data is being shared, fed into a central system, and becomes part of a statistical model. When Gary from Indiana asks a question about how his heart rate compares to other over-30s, his data will be compared against a graph of other users. One of those data points could be mine.

You might not care that your heart rate information is being used in this way. After all, an anonymous heart rate is pretty inconsequential. But what about fertility information for women, or GPS data detailing your favorite running route that starts and ends at your house? Would you be just as happy to feed that sort of information into a central hub, for other users to access via a ChatGPT-style bot?

Services like WHOOP are keen to stress that any sharing of sensitive health data about your body is anonymized, and all of this will make its way into an amended user agreement whenever Google, Apple, Amazfit, etc. launch these services. However, the temptation will always be there for companies to access this wealth of data for less altruistic advertising purposes.

All this data can be turned into money for big tech companies. It’s why the European Union slapped Google with a 10-year ban on using Fitbit’s cache of data for advertising purposes.

If sensitive data isn’t properly encrypted or anonymized, it’s possible governments and police forces can access it too. This is why tech companies were flooded with questions about cycle tracking data privacy in the wake of Roe v. Wade being overturned in the US. People were concerned about whether data from period tracking apps would be used by their home state to determine if the user had an illegal abortion.

Before agreeing to use these services, I think it’s important to know exactly how our data is being treated. What does being ‘anonymized’ mean? Is our data being stored for any length of time? Is our data being sent to any third parties for processing services? Is there a guarantee in place that this data won’t be used for advertising purposes?

We’re sure this wave of AI health coach services is going to prove incredibly useful and make health data accessible and convenient for fitness newcomers to browse. Learning more about your body is an important step on a fitness journey. However, before fully embracing this new trend, we should be cautious that we don’t sacrifice any more privacy for convenience.

You might also like

Matt is TechRadar's expert on all things fitness, wellness and wearable tech.

A former staffer at Men's Health, he holds a Master's Degree in journalism from Cardiff and has written for brands like Runner's World, Women's Health, Men's Fitness, LiveScience and Fit&Well on everything fitness tech, exercise, nutrition and mental wellbeing.

Matt's a keen runner, ex-kickboxer, not averse to the odd yoga flow, and insists everyone should stretch every morning. When he’s not training or writing about health and fitness, he can be found reading doorstop-thick fantasy books with lots of fictional maps in them.