Silicon chips are reaching their limit. Here's the future

A tech step-change will be needed if our gadgets are to keep getting smaller and faster

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

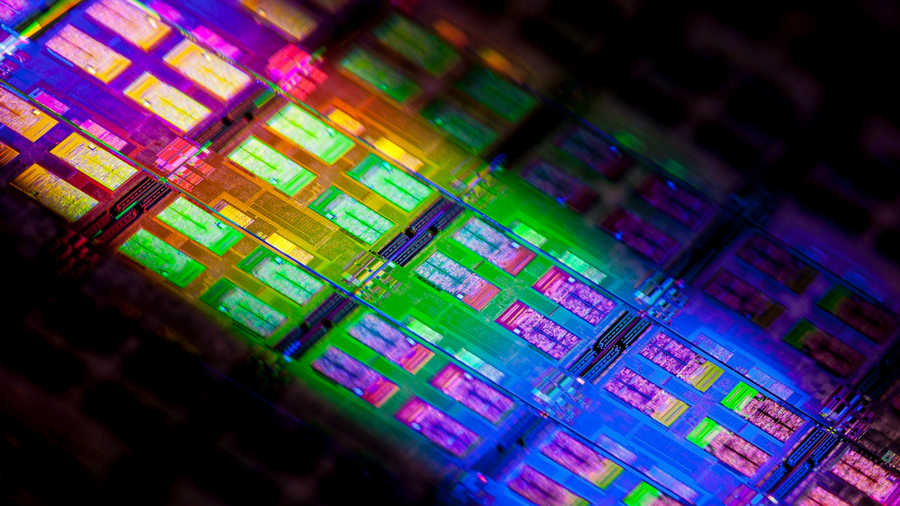

Main image credit: Intel

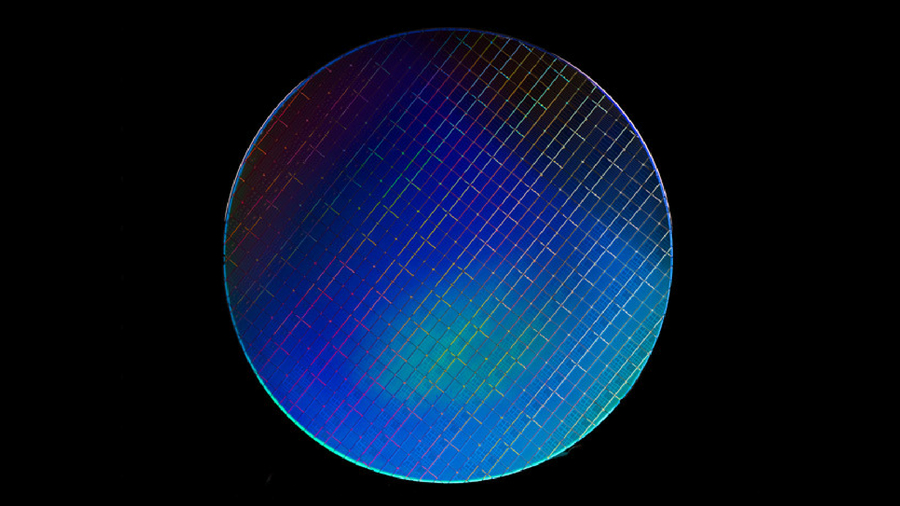

We live in a world powered by computer circuits. Modern life depends on semiconductor chips and transistors on silicon-based integrated circuits, which switch electronic signals on and off. Most use the abundant and cheap element silicon because it can be used to both prevent and allow the flow of electricity; it both insulates and semiconducts.

Until recently, the microscopic transistors squeezed onto silicon chips have been getting half the size each year. It’s what’s produced the modern digital age, but that era is coming to a close. With the internet of Things (IoT), AI, robotics, self-driving cars, 5G and 6G phones all computing-intensive endeavors, the future of tech is at stake. So what comes next?

- Do you have a brilliant idea for the next great tech innovation? Enter our Tech Innovation for the Future competition and you could win up to £10,000!

What is Moore's Law?

That would be the exponential growth of computing power. Back in 1965, Gordon Moore, co-founder of Intel, observed that the number of transistors on a one-inch computer chip double every year, while the costs halve. Now that period is 18 months, and it's getting longer. In truth, Moore's Law isn't a law, merely an observation by someone who worked for a chip-maker, but the increased timescales mean intensive computing applications of the future could be under threat.

Is Moore's Law dead?

No, but it's slowing so much that silicon needs help. ”Silicon is reaching the limit of its performance in a growing number of applications that require increased speed, reduced latency and light detection,” says Stephen Doran, CEO of the UK’s Compound Semiconductor Applications Catapult.

However, he thinks it's premature to be talking about a successor to silicon. ”That suggests silicon will be completely replaced, which is unlikely to happen any time soon, and may well never happen,” he adds.

David Harold, VP of Marketing Communications, Imagination Technologies, says: ”There is still potential in a Moore’s Law-style performance escalation until at least 2025. Silicon will dominate the chip market until the 2040s.”

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Computing's second era is coming

It's important to get the silicon transistor issue in perspective; it’s not ‘dead’ as a concept, but it is past its peak. ”Moore’s Law specifically refers to the performance of integrated circuits made from semiconductors, and only captures the last 50-plus years of computation,” says Craig Hampel, Chief Scientist, Memory and Interface Division at Rambus.

”The longer trend of humanity’s need for computation reaches back to the abacus, mechanical calculators and vacuum tubes, and will likely extend well beyond semiconductors [like silicon] to include superconductors and quantum mechanics.”

The topping-out of silicon is a problem because computing devices of the future will need to be both more powerful and more agile. ”Increasingly the problem of computing is that future systems will need to learn and adapt to new information,” says Harold, who adds that they will have to be 'brain-like'. ”That, in combination with chip manufacturing technology transition, is going to create a revolutionary second era for computing.”

What is cold computing?

Some researchers are looking into new ways of getting higher-performance computers that use less power. ”Cold operation of data centers or supercomputers can have significant performance, power and cost advantage,” says Hampel.

An example is Microsoft's Project Natick, as part of which an enormous data center was sunk off the coast of Scotland's Orkney Islands, but it's only a small step. Taking the temperature down further means less leakage of current and reducing the threshold voltage at which transistors switch.

”It reduces some of the challenges to extending Moore’s Law,” says Hampel, who adds that a natural operating temperature for these types of systems is that of liquid nitrogen at 77K (-270C). ”Nitrogen is abundant in the atmosphere, relatively inexpensive to capture in liquid form and an efficient cooling medium,” he adds. ”We hope to get perhaps four to 10 additional years of scaling in memory performance and power.”

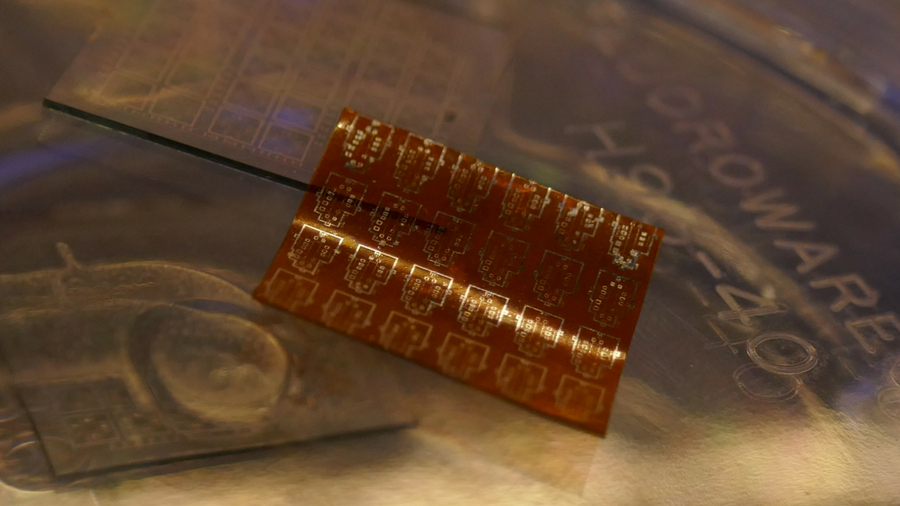

What are compound semiconductors?

Next-gen semiconductors made from two or more elements whose properties make them faster and more efficient than silicon. This is 'the big one'; they’re already being used, and will help create 5G and 6G phones.

”Compound semiconductors combine two or more elements from the periodic table, for example gallium and nitrogen, to form gallium nitride,” says Doran. He explains that these materials outperform silicon in the areas of speed, latency, light detection and emission, which will help make possible applications like 5G and autonomous vehicles.

Although they may be used alongside regular silicon chips, compound semiconductors will find their way into 5G and 6G phones, essentially making them fast enough and small enough while also having a decent battery life.

”The advent of compound semiconductors is a game-changer that has the potential to be as transformational as the internet has been for communications,” says Doran. That’s because compound semiconductors could be as much as 100 times faster than silicon, so could power the explosion of devices expected with the growth of the IoT.

What is quantum computing?

Who needs the on-off states of a classical computer system when you can have the quantum world's superposition and entanglement phenomena? IBM, Google, Intel and others are in a race to create quantum computers with enormous processing power, way more than silicon transistors, using quantum bits, aka 'qubits'.

The problem is that quantum physicists and computer architects have many breakthroughs to make before the potential of quantum computing can be realized, and there's a simple test that some in the quantum computing community think needs to be met before a quantum computer can be said to exist: 'quantum supremacy'.

”It means simply showing that a quantum machine is better at a specific task than a conventional semiconductor processor on the path of Moore’s Law,” says Hampel. So far, achieving this has remained just out of reach.

What is Intel doing?

Since it pioneered the manufacturing of silicon transistors, it should come as no surprise that Intel is heavily invested in research into silicon-based quantum computing.

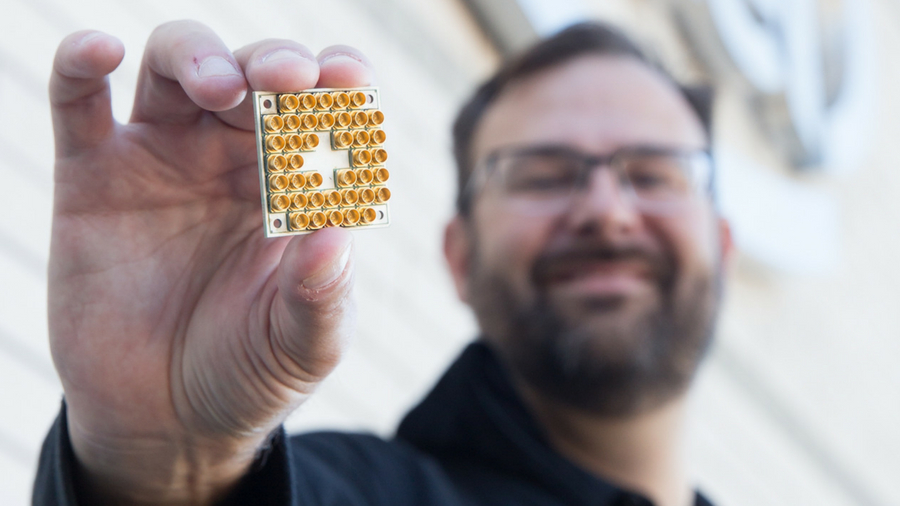

”As well as investing in scaling-up superconducting qubits that need to be stored at extremely low temperatures, Intel is also investigating an alternative method,” says Adrian Criddle, Vice President Sales and Marketing Group and UK General Manager at Intel. ”The alternative architecture is based on ‘spin qubits’, which operate in silicon.”

A spin qubit uses microwave pulses to control the spin of a single electron on a silicon-based device, and Intel recently utilized them on its recent 'world's smallest quantum chip'. Crucially, it uses silicon and existing commercial manufacturing methods.

”Spin qubits could overcome some of the challenges presented by the superconducting method as they are smaller in physical size, making them easier to scale, and they can operate at higher temperatures,” explains Criddle. ”What’s more, the design of spin qubit processors resembles traditional silicon transistor technologies.”

However, Intel's spin qubit system still only works close to absolute zero; cold computing will go hand in hand with the development of quantum computers. Meanwhile, IBM has its Q, a 50-qubit processor, and the Google Quantum AI Lab has its 72-qubit Bristlecone processor.

What about graphene and carbon nanotubes?

These so-called miracle materials could one day replace silicon. ”They have existing electrical, mechanical and thermal properties that go much beyond what can be done with silicon-based devices,” says Doran. However, he warns that it may take many years before they're ready for prime time.

”Silicon-based devices have been through many decades of refinement and have developed along with associated manufacturing technology,” he says. ”Graphene and carbon nanotubes are still at the beginning of this journey, and if they are to replace silicon in the future the manufacturing tools required to achieve this still need to be developed.”

The atomic era

Whatever the prospects for other materials, we're now in an atomic era. ”Everyone is thinking about atoms,” says Harold. ”Our progress has now reached the point where individual atoms count, (and) even storage is finding ways to work at the atomic level – IBM has demonstrated a possible route for storing data on a single atom.” Today, creating a 1 or a 0, the binary digits used to store data, takes 100,000 atoms.

However, there is a problem. “Atoms are inherently less stable as a means of storing or transmitting information, which means more logic for things like error correction is needed,” adds Harold. So computer systems of the future will probably be layers of various technologies, each one there to counteract the disadvantage of another.

So there's no one answer to extending the life of silicon into the next computing era. Compound semiconductors, quantum computing and cold computing are all likely to play a major role in research and development. It's likely that the future of computing will see a hierarchy of machines, but as of now, nobody knows what tomorrow's computers will look like.

”While Moore’s Law will end,” says Hampel, ”the secular and lasting trend of exponential computing capacity will likely not.”

TechRadar's Next Up series is brought to you in association with Honor

Jamie is a freelance tech, travel and space journalist based in the UK. He’s been writing regularly for Techradar since it was launched in 2008 and also writes regularly for Forbes, The Telegraph, the South China Morning Post, Sky & Telescope and the Sky At Night magazine as well as other Future titles T3, Digital Camera World, All About Space and Space.com. He also edits two of his own websites, TravGear.com and WhenIsTheNextEclipse.com that reflect his obsession with travel gear and solar eclipse travel. He is the author of A Stargazing Program For Beginners (Springer, 2015),