Google blows our minds at I/O with live-translation glasses

Forget Google Glass. Google has instant translation specs

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

Yes, you heard that right. During Google I/O 2022, Google finally blew our minds with eyeglasses that literally translate what someone is saying to you before your eyes -- provided you're wearing the glasses, of course.

The glasses look perfectly normal, appear to work without any assistance from a phone, and almost have us forgetting all about Google Glass.

In truth, not much is known about the Google AR translation glasses beyond the short video demonstration, which Google ran at the tail end of a nearly two-hour event -- a Steve Jobsian, "one more thing moment."

The glasses use augmented reality and artificial intelligence (and possibly embedded cameras and microphones) to see someone speaking to you, hear what they're saying, translate it and display the translation live on the embedded, translucent screens built into the eyeglass frames.

"Language is so fundamental to communicating with each other," explained Google CEO Sundar Pichai, but he noted that trying to follow someone who is speaking another language "can be a real challenge."

The prototype lenses (thankfully, Google did not call them "Google Glass 3") uses Google's advancements in translation and transcription to deliver translated words "in your line of sight," explained Pichai.

In the video, a young woman explains how her mother speaks Mandarin and she speaks English. Her mother understands her but can't respond to her in English.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The young woman dons the black, horn-rim-style glasses and immediately sees what the researcher is saying, transcribed in yellow English letters on a screen. Granted, we're seeing an overlay of this in the video and not what the woman is actually seeing.

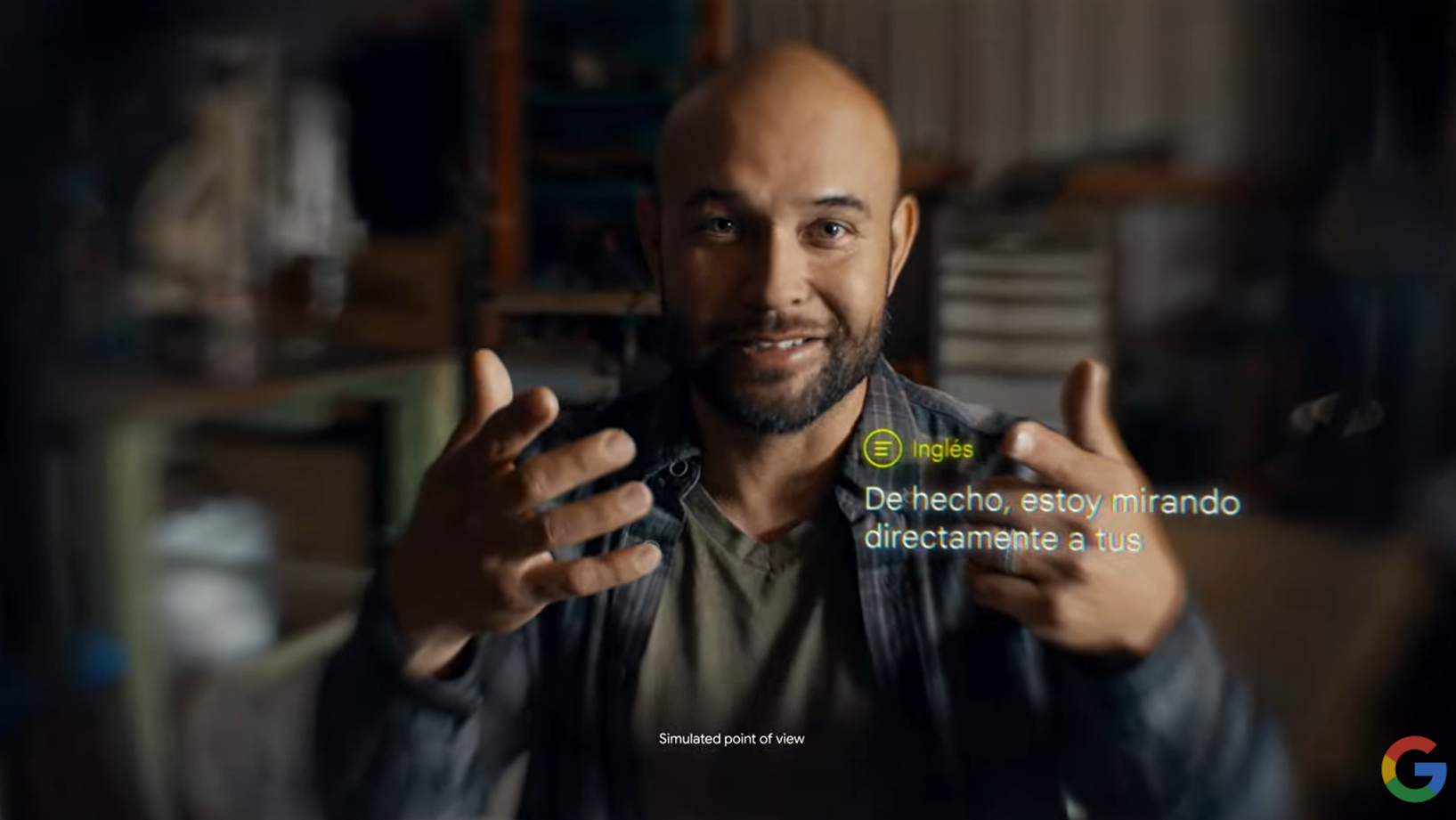

What is clear, though is that no one is, as they had to with Google Glass, glancing upward to see an oddly-placed, tiny screen. The prototype lets wearers look directly at the speaker, so the words are overlayed on top of them. In one segment, we see a point-of-view image of the translation at work. Again, this is Google's illustration of the prototype view. We won't know how they really work until the prototype leaves the lab.

Google offered no time frame for completing and shipping this project. We don't even have a name. Still, seeing normal-looking augmented reality glasses that could solve a very real-world problem, (they could translate sign language for someone who doesn't know it or show words to the hearing impaired) is exciting.

As one researcher noted in the video, "Kind of like subtitles for the world."

A 38-year industry veteran and award-winning journalist, Lance has covered technology since PCs were the size of suitcases and “on line” meant “waiting.” He’s a former Lifewire Editor-in-Chief, Mashable Editor-in-Chief, and, before that, Editor in Chief of PCMag.com and Senior Vice President of Content for Ziff Davis, Inc. He also wrote a popular, weekly tech column for Medium called The Upgrade.

Lance Ulanoff makes frequent appearances on national, international, and local news programs including Live with Kelly and Mark, the Today Show, Good Morning America, CNBC, CNN, and the BBC.