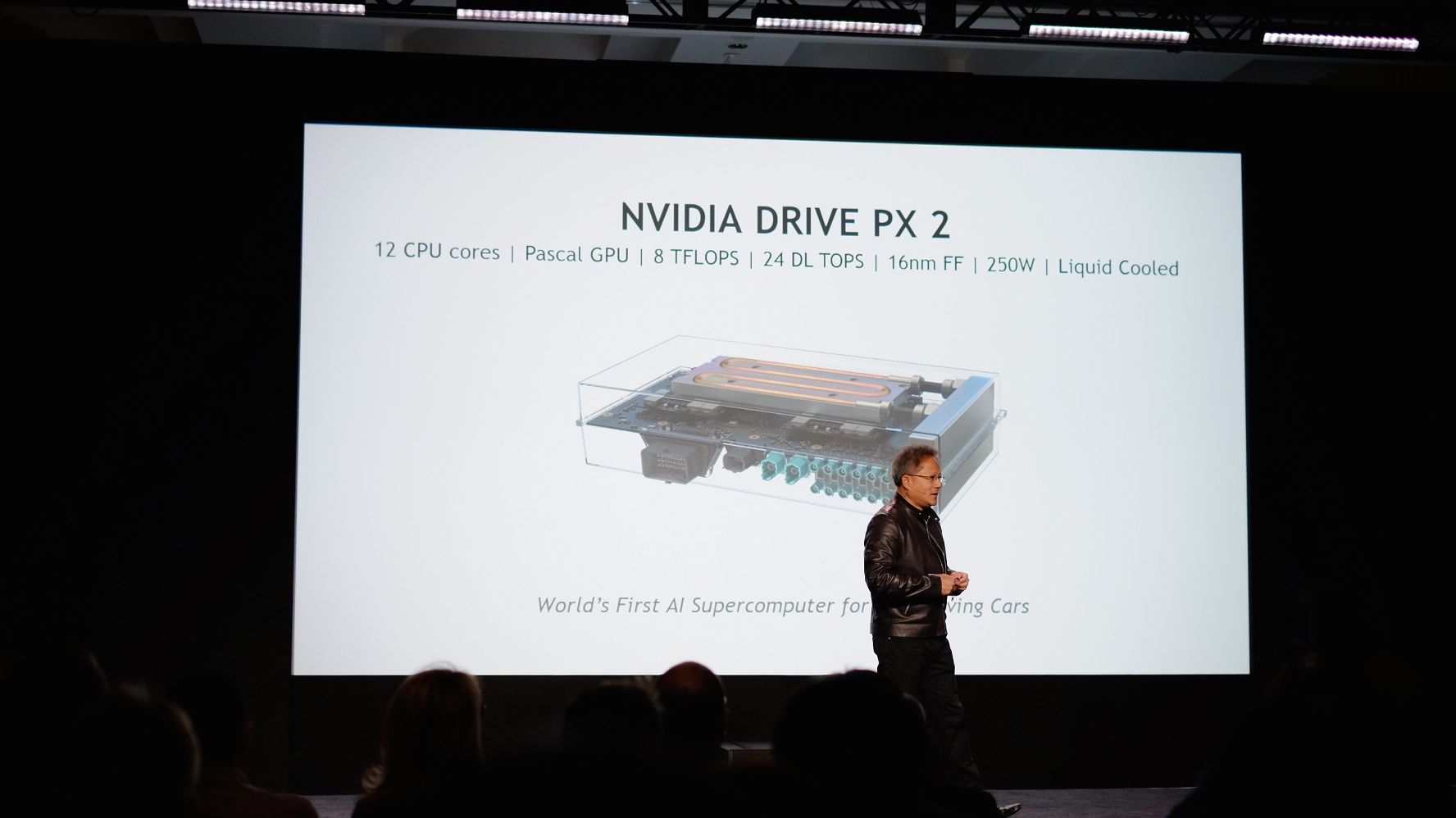

Nvidia's Drive PX 2 is a super-computer for self-driving cars

Driving into the future

In order to build self-driving cars, manufacturers need to incorporate three components: software, supercomputers, and deep learning.

Building a self-driving car is hard, said Nvidia CEO Jen-Hsun Huang during a CES 2016 keynote address. To help manufacturers build a self-driving car, Huang unveiled a new platform that delivers the computational ability of roughly 150 MacBook Pros.

Called the Nvidia Drive PX 2, the platform consists of 12 CPU cores built on a 16nm FinFET architecture, four chips with Pascal GPUs, and eight teraflops worth of processing power. So how powerful is this? Huang said that the Drive PX 2 requires water-cooling to ensure that it doesn't overheat and can perform in any condition and under any environment.

The first company to deliver cars with Drive PX 2 will be Volvo, and Nvidia says that more partners will follow.

A societal contribution

Nvidia claims that there are two visions for self-driving cars right now. The first vision is to create an auto-pilot that requires human participation. The second vision is similar to Google's effort, which is a fully autonomous vehicle that doesn't require any human intervention.

To solve the self-driving car problem, Huang says, is to contribute to society. These autonomous machines will help traffic as fewer cars will be on the road, and the cars on the road will be used more frequently. Removing cars means that more parks can be created as parking lots get eliminated. Additionally, accidents can be prevented, and Huang says that Nvidia's vision is to create a platform that makes this vision possible.

The key to this is control. Nvidia wants the car to perceive complex, unpredictable real-world conditions, anticipate what will happen and make decisions. The goal is to create an end-to-end platform for deep learning called a neural net. The deep learning platform is called Nvidia Digits.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

The artificial intelligence will require constant learning and training. As the cars learn, information will be sent to the cloud, and the the new information will be processed, refined and get pushed to other cars.

The reference platform that Nvidia created is called the Nvidia Drivenet. It has nine inception layers, which is nine deep nested neural networks. This is comprised of 37 million neurons. Nvidia is training the network to perceive the things in the world. The network has to be able to recognize things in real-time, and Huang provided examples such as Shazam that can identify songs, and Google's and Microsoft's image recognition software.

Training

In a demo, Nvidia showed that its algorithm can identify cars, signs, streetlights and the road. With GPU acceleration, it takes a day to train the network, but without the graphics power, it would take a month of training.

Every time the car senses another car or person on the road, a yellow box is created to identify these objects.

This amounts to about 1.2 million images that's manipulated to look like it's 120 million objects. GPU processing power allows for faster training, translating to faster iterations and updates.

"Not one feature detection was coded by hand," Nvidia said in its demo. "This is a work in progress that's done very quickly."

Nvidia mentioned that it was working with several auto partners to build its data sets, including Daimler, Ford and Audi.

Nvidia says that it can reach 50 frames per second with a high-performance desktop-class GPU.

The deep neural network is able to achieve better street sign recognition than a human can, Huang said.

BMW is using the network not just for self-driving cars, but to inspect its manufacturing work on the production line. This means that the platform can be used for other applications beyond smarter autonomous cars.

Beyond objects

Beyond object recognition, the network can also be used to identify circumstances, such as roadways that don't have lines, or school buses that you'd need to stop behind. These are trainable things.

Recognizing circumstances will be the next step.

For its current setup, Nvidia has four LIDAR cameras, each capable of capturing 40,000 frames per second. There are different configurations available, and car-makers will be able to choose different configurations with different sensor options. The network will be trained by fusing the information captured by these different cameras and sensors.

Infotainment to tie it together

All the information that's gathered by Drive PX 2 will be transferred to the infotainment system. Nvidia's infotainment system is called the Drive CX, which will help drivers see what the car is seeing, including other cars on the road, lanes on the road and streets drawn by HERE Maps.

Essentially, you're seeing your drive in GPS in real-time with real information on the road.

"We don't need rear view mirrors anymore," Nvidia proudly proclaimed, because the information is in front of us.

Drive PX does the sensor fusion and computation, and then that information is sent to Drive CX to display the information in real-time to the driver.

- More from CES 2016