Is the outrage over AI energy use overblown? Here’s how it compares to your Netflix binges and PS5 sessions

You've seen the headlines: AI's insatiable thirst for energy is on par with that of a small country and threatens to overwhelm power grids while sucking up all the water.

But what's the real cost when you ask AI how to cook instant noodles instead of just reading the packet?

Until recently, estimating AI resource use per prompt required a healthy dose of speculation and insight from (potentially unreliable) sources like Sam Altman’s blog.

But now, in a first for the industry, Google has published official figures for the median Gemini text prompt. It's a solid starting point, but it does have a few limitations (like only measuring text-based outputs, with no figures for image or video generation) that you can explore further in our deeper dive on the subject.

The key number is 0.24 Wh per prompt, which makes it easy to compare the impact to other common uses of power.

So, is AI power-use killing the planet, or is your nightly Netflix binge actually the bigger threat?

The power of a prompt

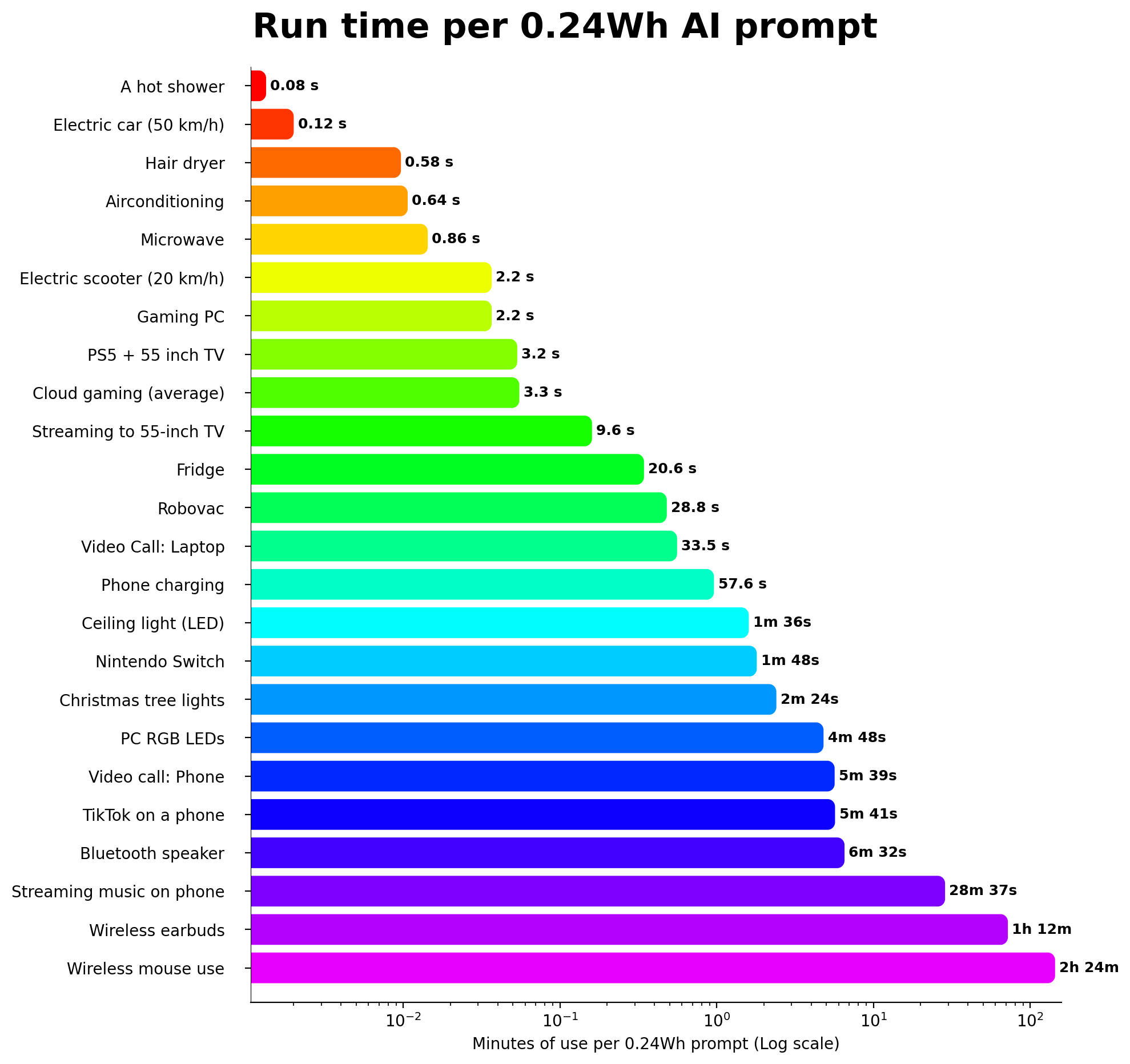

Let's see how a prompt stacks up against other contributors to your power bills. The numbers below show how long the power used by a single prompt lasts for other tasks. Then we'll compare that to just the power use inside a data center.

Good news then – your AI chats are not single-handedly destroying the planet, and one prompt equates to just 1.5% of a charge for a shiny new iPhone 17 or under 10 seconds of streaming video to a 55-inch TV.

In fact, when streaming video at home, 99.97% of the electricity is used by your TV, with the data center power use accounting for just 0.03%. Don't have a TV? The ratio is about 99.6% if you’re watching on a laptop and 98.4% if you’re watching on a phone.

While the AI power-use figure from Google is just for the data center, in our broader ‘end-to-end’ comparisons above, it's the end device – like a TV or phone – that uses most of the electricity. For tasks like streaming video, your personal share of the electricity used by the data center is tiny.

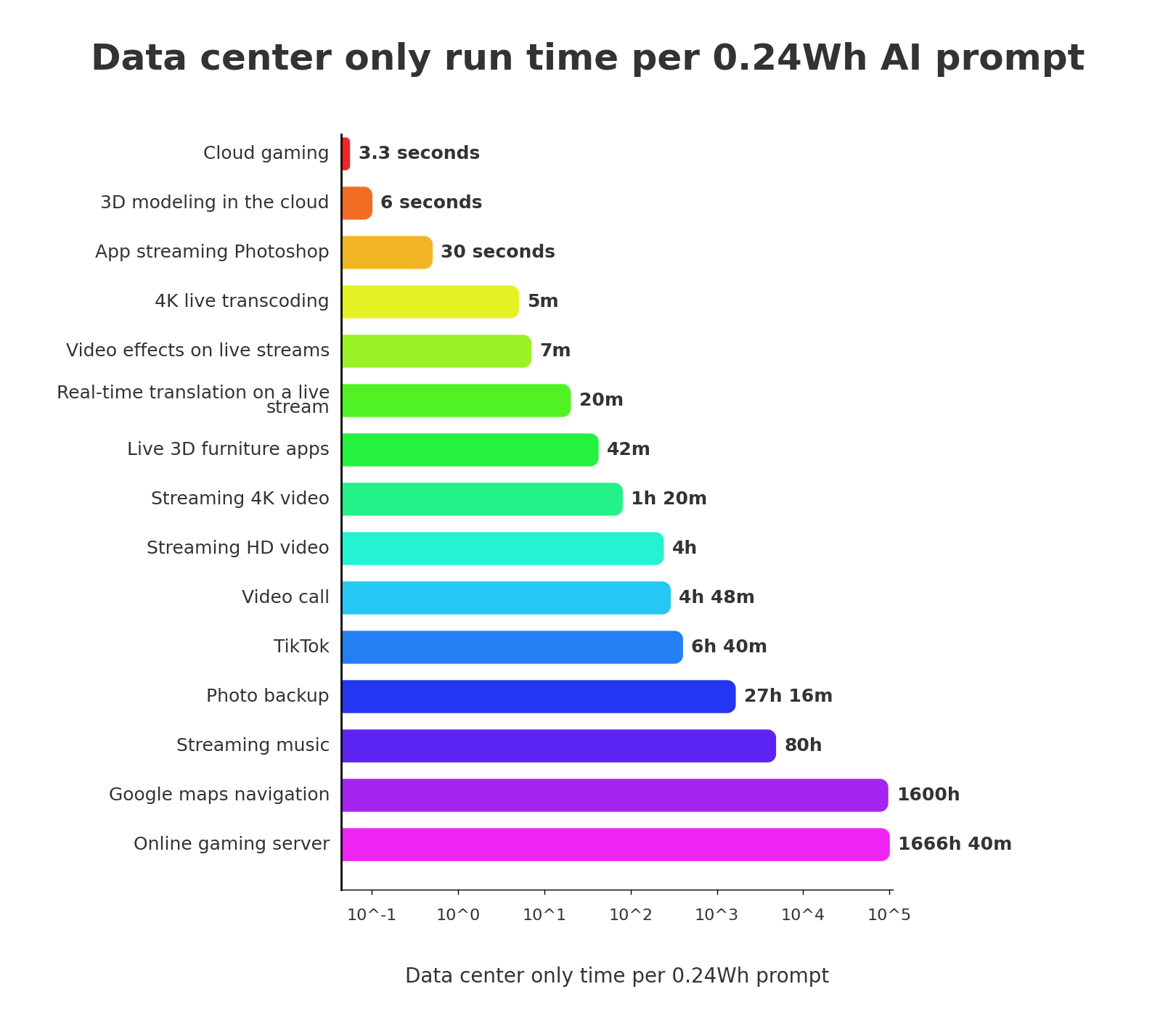

So, if you want to do an apples-to-apples comparison, it’s fairer to ask how one 0.24 Wh AI prompt compares to the other activities’ data center power use?

This chart really highlights that for just the data center, AI prompts are much more power-hungry than tasks such as streaming.

Data centers don't generally use a lot of power per user unless they’re performing compute-heavy tasks, like for example cloud gaming, where one 0.24 Wh prompt uses the same power as just 3.3 seconds of play time.

That's just one AI prompt, though – how do the numbers compare with total daily usage?

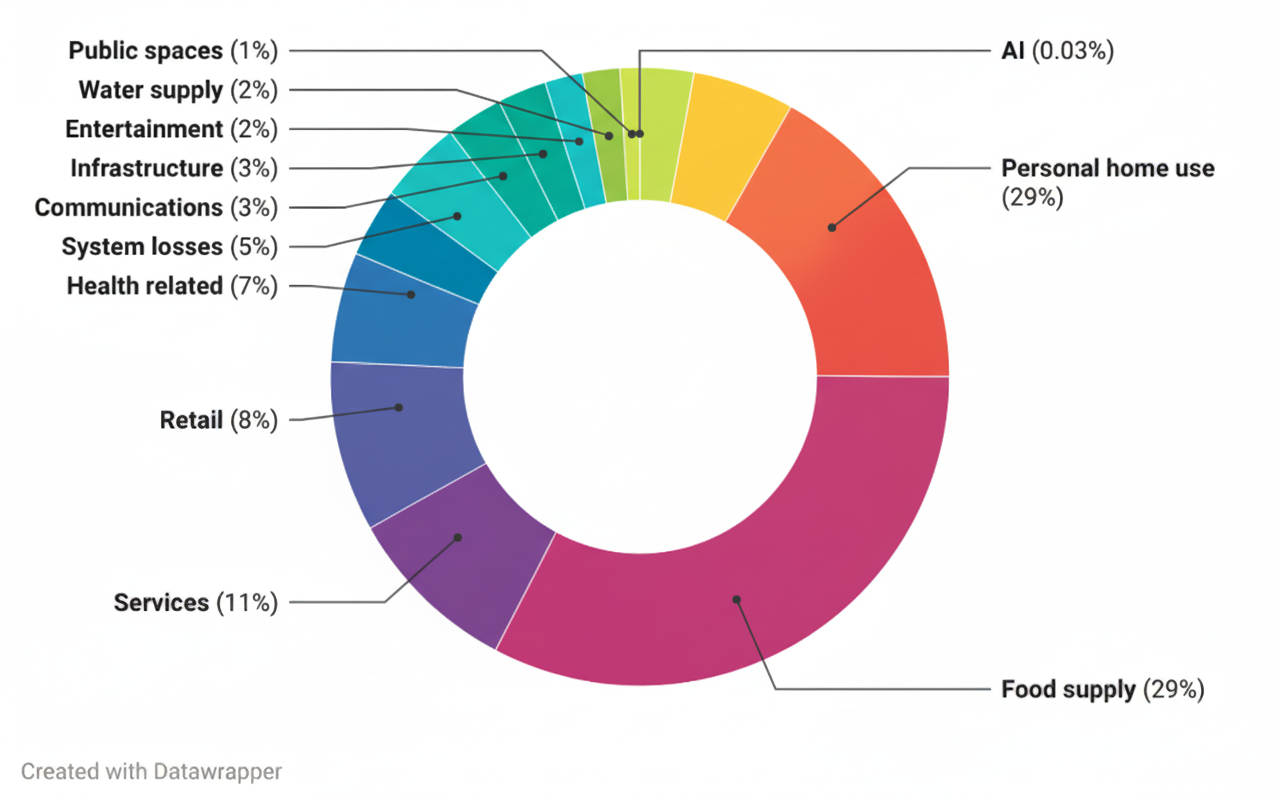

If we divide the number of active ChatGPT users by the number of daily prompts, we get between 10 to 20 prompts a day (an average of 3.6 Wh), which is about 0.03% of a users overall daily electricity use and less than what's wasted by the little glowing power indicator LEDs in your home.

In contrast, a heavy user might average 50 prompts a day, making up 0.15% of their total electricity use – about the same as your TV uses while it sits ‘switched off’ in standby mode.

For the overall electricity used to keep society from falling apart, the per-person share is mostly consumed outside the home on everything from food production to running street lights at night.

While the numbers show that a single prompt is a drop in the bucket, where they potentially start to become problematic is when they’re used at scale. There’s an ever-increasing number of people using AI, with over 2.5 billion prompts a day handled by OpenAI alone, and it has hundreds of millions of active users, so those individual drops have the potential to turn into a flood.

Of course, the story of AI resource use is about more than just electrons, and Google estimates that each prompt uses 0.26 milliliters of water for cooling and produces 0.03 grams of carbon dioxide equivalent. More on this later, but it's about 5 tears shed for the loss of ChatGPT 4o and equivalent CO2 to the bubbles in a single sip of soda.

So what should you know about that bigger picture?

We'll cover how billions of AI prompts add up on a global scale next week, when we return for the second installment of our three-part Keep calm and count the kilowatts series.

Do you object to my numbers or calculations? School me in the comments!

AI skeptics might also like

- Google reveals just how much energy each Gemini query uses - but is it being entirely truthful?

- Your ChatGPT use is probably the reason your electric bill is rising

- Global AI usage surge could cause US electricity prices to increase by 18% within years - and that's just the beginning

AI enthusiasts might also like

- Not as thirsty as we thought - average data center uses less water than a 'typical leisure center', study claims

- AI’s energy appetite is real — but so is its climate potential

- New fanless cooling technology enhances energy efficiency for AI workloads by achieving a 90% reduction in cooling power consumption

How we use AI

Here at TechRadar, our coverage is author-driven. AI helps with searching sources, research, fact-checking, plus spelling and grammar suggestions. A human still checks every figure, source and word before anything goes live. Occasionally we use it for important work like adding dinosaurs to colleagues’ photos. For the full rundown, see our Future and AI page.

Lindsay is an Australian tech journalist who loves nothing more than rigorous product testing and benchmarking. He is especially passionate about portable computing, doing deep dives into the USB-C specification or getting hands on with energy storage, from power banks to off grid systems. In his spare time Lindsay is usually found tinkering with an endless array of projects or exploring the many waterways around Sydney.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.