Sponsor Content Created With Xpeng

Your own private aircraft carrier? Chinese EV-maker Xpeng's annual 2025 showcase just gave us a tantalizing glimpse of the future

If the brand name Xpeng is unfamiliar, that may soon be about to change. The major Chinese EV manufacturer has its sights set on becoming a leader in all kinds of future tech, from robots to electric aircraft and beyond.

In terms of cars, it sells the G6 and G9 SUVs, the P7 sedan and the X9 MPV, plus the MONA M03 in China. In the UK and Australia, Xpeng is well known for the recent launch of the G6, with the G9 coming soon. In the US, you can’t actually buy the company's EVs yet, so it's perhaps best known for flying cars and CES demos.

Xpeng AI Day 2025 – looking to the future

AI Day is Xpeng’s annual showcase, and it recently took place in Guangzhou, with 2025 themed around the concept of Emergence, with a large focus on new capabilities for the companies ‘physical AI’ systems – ones designed to interact with the physical world – across cars, robots and aircraft. The main headliner was VLA 2.0, Xpeng’s vision-to-action model that introduces new capabilities for efficiently turning camera vision to control output.

During the event, the brand announced the new VLA 2.0 model, teased a mass production robotaxi, demoed a next-generation humanoid robot and showed off the upcoming tiltrotor hybrid flying car.

We also got loads of updates on previously announced products, plus new insight into how Xpeng plans to build an AI ecosystem with partners, and even make its latest AI model open source.

VLA 2.0: vision-to-action AI model

Xpeng’s VLA 2.0 model takes a vision-to-action approach to processing visual data, so instead of translating what its cameras see into text and then turning that into control output, the model goes straight from pixels to driving or manipulation.

That contrasts with the ‘vision language action’ path that is used, for example, in Google’s recent robotics work and autonomous driving research. Vision language action has an AI model that first describes a scene in natural language, then decides what to do from that description.

This approach is flexible and can fold in extra information, such as written instructions or web context, but it adds extra processing, power draw and latency that current hardware and batteries struggle to supply in mobile uses, like vehicles and robots.

Vision to action is more akin to fast automatic reflexes, and Xpeng uses variants of the VLA 2.0 model across cars, aircraft and robots – each with its own specific training. For cars, Xpeng says it trained the AI on about 100 million videos, and runs a distilled model with a parameter count in the billions. In comparison, Xpeng has said rivals are using models with parameter counts in the tens of millions.

Making this system work reliably on the road still needs serious computing power and the effective on-device compute is quoted at 2,250 TOPS (Tera Operations Per Second, a common way to measure performance of AI hardware) in consumer vehicles and up to 3,000 TOPS in the Robotaxi and and the Iron robot.

In comparison, Tesla’s current Hardware 3 platform has 144 TOPS, and unofficial estimates for the not-yet-released Hardware 4 range from roughly 430 to 1,150 TOPS.

Given the limitations of current energy and thermal budgets inside cars and mobile robots, vision-to-action is a pragmatic choice. It sacrifices some flexibility compared with language-in-the-loop systems, but can deliver big payoffs in efficiency, responsiveness and deployability at scale. Plus, Xpeng’s approach of throwing plenty of computing power at the problem shows it’s serious about making it work now, rather than trying to optimize for more efficient models or hardware in the future.

While we await the consumer rollout of VLA 2.0 and real-world feedback, Xpeng is confident enough in its design to plan on VLA 2.0 underpinning and helping create a wider physical ecosystem that other manufacturers can build on, and plans to make VLA 2.0 open-source to business partners.

VLA 2.0 in cars

VLA 2.0 will run on Ultra vehicles – Xpeng’s 2025 top-spec trims with the full sensor suite and compute platform already built in. Using VLA 2.0, Ultra vehicles get what Xpeng calls XNGP – short for Navigation Guided Pilot. For now, in passenger cars, this is equivalent to SAE (Society of Automotive Engineers) Level 2 driver assistance, where the driver needs to stay attentive and supervise the AI control.

The system is designed to handle most driving situations, including tight streets, parked-car pinch points, complex merges, roundabouts and construction zones. Lane choice and gap selection are part of the training, so Xpeng says it can ease into traffic, negotiate with other drivers and recover if something unexpected happens. It also supports parking and low-speed maneuvers that use curb detection and path planning to handle tight spots, and avoid any bumps or scrapes.

Rollout of VLA 2.0 will reach new models and existing Ultra cars through over-the-air updates, beginning with a small early-access test in China, then wider release once safety goals are met. Xpeng highlights that their approach is all about low latency, predictable behavior and road skills rather than piling on more features. For example, VLA 2.0 is built to work with basic mapping (or none at all), to better handle road changes between map updates.

In the driver assist landscape, Xpeng and Tesla are the main companies pushing to use camera vision by itself (rather than adding sensors like LIDAR) for consumer cars. Older Xpeng vehicles included additional sensors, so this is a bold approach with a lot of advantages, but also risk, and we’ve seen Tesla struggle to take camera-only vision to higher levels of autonomy. Based on demo videos, Xpeng’s high compute strategy looks very promising though, and having the same system underpin multiple platforms should help it scale faster.

Xpeng also announced a partnership with Volkswagen, which has confirmed it plans to use Xpeng’s technology in its China-market vehicles. There’s also an existing Xpeng and VW partnership for two jointly developed vehicles that will be built and sold in China.

At AI Day 2025, Xpeng also announced three upcoming Robotaxi models using the same VLA 2.0 model, but here they were operating in fully driverless autonomous mode in defined zones. This is equivalent to SAE Level 4, like what has been achieved by companies such as Waymo with their sensor-packed vehicles.

Xpeng says it’ll use the passenger-car learnings to fast track the validation process of the Robotaxis, with a planned pilot program and then mass production goal of 2026. Passenger cars are also expected to get a fully autonomous mode trim that uses the same Robotaxi hardware on select models.

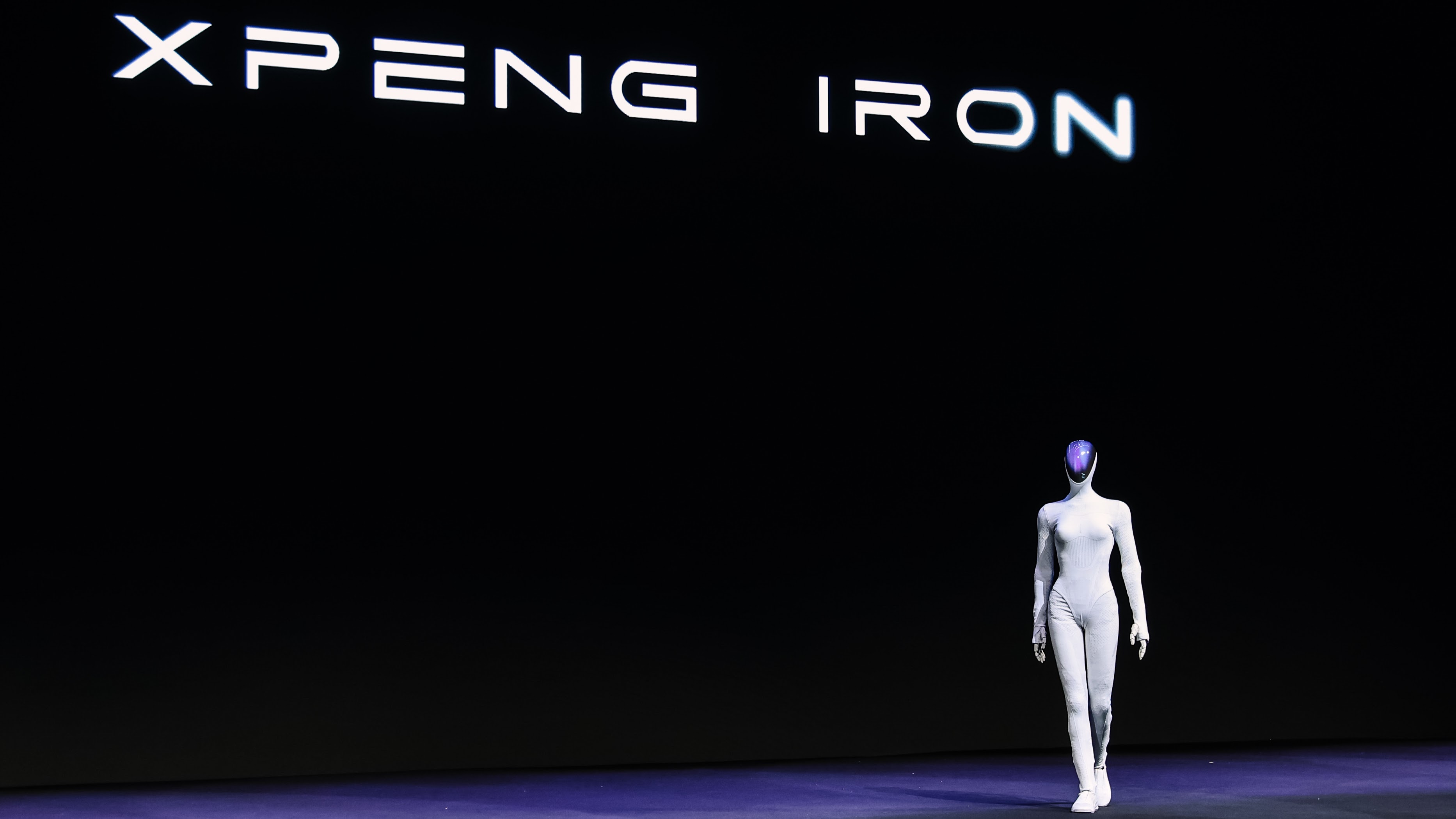

Iron humanoid robot

Iron, Xpeng’s next-gen humanoid robot, strongly leans into anthropomorphism and has features like a bionic spine, flexible skin and 82 degrees of freedom (82 independently controllable movement axes).

There’s also a big emphasis on hands: Xpeng highlights higher-degree-of-freedom hands than many rivals, aiming for fine manipulation to make them more useful in everyday tasks and a better fit for tasks currently done by humans without retooling.

Iron uses three AI models in concert: VLA (vision-language-action), VLT (vision-language-task) and VLM (vision-language model) for conversation, walking and interaction. All computation runs on the robot itself thanks to powerful hardware delivering 3,000 TOPS of processing. This is the same core hardware setup as Xpeng’s vehicles, and again shows the company is willing to use a lot of computing resources to make it work.

Compared to competitor robots like Tesla Optimus and the Figure 01, Iron’s bet is on dexterity and on-device processing. This matters because object manipulation is a key bottleneck for robots doing real work today, and having everything run on the robot should both reduce latency and improve privacy.

Detailed specs of Iron haven’t been released yet, but Xpeng is targeting large-scale mass production by the end of 2026. By using the same underlying systems across robotics and vehicles, Xpeng has a big advantage in scaling mass production of core hardware and software. The company suggests initial use cases could include jobs like giving tours, performing retail tasks and back-office logistics.

Xpeng takes to the air

Xpeng announced a new tiltrotor aircraft called the A868 and gave us loads of new information on the Land Aircraft Carrier hybrid vehicle with launchable copter, including the news it's near to mass production.

Watch the video below to see how this innovative system works and to read more, check out my Xpeng coverage on our sister publication, Tom's Guide.

Xpeng plans for the next 12 months

- Dec 2025: Pioneer pilot of supervised co-driving on Ultra cars.

- Q1 2026: Full VLA 2.0 rollout on Ultra models.

- 2026: Robotaxi city pilot program using only camera vision.

- 2026: Iron units on sale, mass production target late 2026.

- 2026: Land Aircraft Carrier mass production.

Want to know more? You can watch Xpeng's full AI Day 2025 presentation below: