The iPhone 15 doesn't need 200MP to beat the Galaxy S23, it just needs focus

I need less blur not more pixels

Sign up for breaking news, reviews, opinion, top tech deals, and more.

You are now subscribed

Your newsletter sign-up was successful

I'm always looking for the best camera phone, but what is there left to improve? Our Galaxy S23 Ultra review has piqued my interest, though I’m skeptical about that 200MP sensor. Even if it works as Samsung claims, it won’t fix my biggest photo problem. When Apple’s next iPhone 15 Pro launches later this year, instead of megapixels, I want to see better focus. Reducing blur in my photos would be a bigger help than anything.

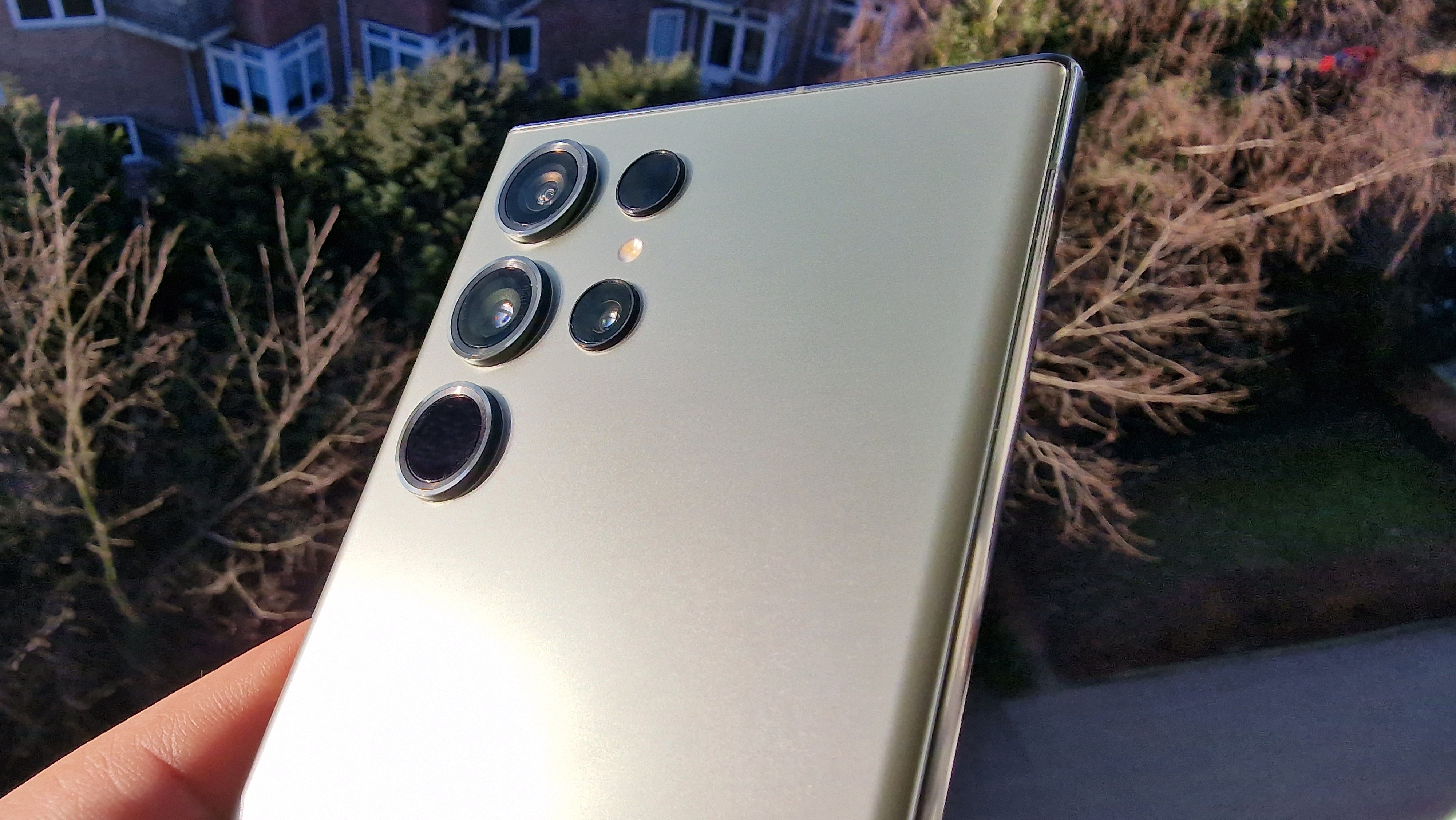

It may seem like Samsung has moved dramatically ahead of Apple in terms of sensor size. With 200 million pixels at its disposal, the Samsung sensor surely takes much better (bigger?) photographs than Apple’s best iPhone 14 Pro, which uses only a 48MP main camera.

In fact, If you look at the specs on a site like GSMArena, you’ll see the camera sensors are almost the same size. Manufacturers use archaic measuring systems based on old photo film aspect ratios, so you’ll find sensors that measure 1/1.3” or 1/1.28”. Do the math on that fraction and you’ll find the real size.

Collecting photons of light like rainwater

a camera sensor isn’t really like a bucket on a lawn collecting rain, unless it’s raining during an earthquake

Smartphones use a technique called pixel-binning that combines information to create the best overall average. A 48MP sensor uses information from four sensor pixels to draw one pixel on your final 12MP image. Samsung’s big 200MP sensor uses up to 16 pixels for each final pixel.

The sensor size is still the same. If you imagine buckets collecting rainwater and the sensor is your garden, Samsung is using 200 million tiny buckets, while Apple is using 48 million slightly larger buckets. Both cover the whole lawn; the lawn is smaller than a postage stamp.

The problem is that a camera sensor isn’t really like a bucket on a lawn collecting rain, unless it’s raining during an earthquake. Phones don’t sit still like buckets. Our subjects don’t line up with our phones, like rain falling to earth. Everything is moving and shaking and the camera is trying its best to keep up.

A camera image happens in a hundredth of a second. You only need to remain perfectly still for a hundredth of a second for an image to be perfectly sharp. Somehow, I still fail at this all the time. My biggest problem with my photos is that they are simply blurry, probably because my hand was shaking.

Sign up for breaking news, reviews, opinion, top tech deals, and more.

More MPs doesn't not mean bigger

Whether you buy an iPhone 14 Pro, a Galaxy S23 Ultra, or even a Google Pixel 7 Pro, your phone collects light on a sensor that measures around 0.76 inches diagonally. That 200MP sensor on the new Galaxy S23 Ultra has more megapixels, so it must be bigger, right? Nope. In fact, the Apple sensor is the fractionally larger 1/1.28” size above, which is bigger than the Samsung, the former 1/1.3” measurement.

The difference is that Apple cuts its sensor into 48 million pixels that are 1.22 micrometers (µm). Each of Samsung’s 200 million sensor pixels is 0.6µm. The overall sensor is still roughly the same size.

What’s more, you don’t even get a 200MP image from the Samsung camera, nor do you get a 48MP image from the iPhone. The final image will likely be around 12MP by default. You can increase the resolution by tweaking the settings, but if you don’t mess with anything, 12MP is what you’ll get.

Phone makers settled on 12MP as a good size for average photos. At 12MP, your photo is less than 10MB, which is small enough to share via text message attachment or quickly upload to your favorite social site. A 12MP photo prints nicely up to 11 x 14 inches.

What I really want is a wider lens

I don’t need more detail, or more accurate color. This is the problem I need fixed: I need my photos to be clear. I need the intended part of my photo to be my subject. I need my subject to be in focus.

There are many ways that a camera can make photos more clear, but only a few options for smartphones. A larger sensor could capture more light, but phone makers won’t use a much larger sensor.

A wider aperture lens could also gather more light. Huawei uses a very wide f/1.4 lens in its Mate 50 Pro device, and camera testing firm DxO says that phone is the #1 camera phone you can buy. Its limited availability means we haven’t reviewed one here at TechRadar.

Make the iPhone 15 camera faster, especially autofocus

If I can’t have a much larger sensor or a much wider lens on my camera phone, how can I take sharp photos? How can Apple give me an iPhone 15 Pro that takes sharper photos than the many-megapixel Galaxy S23 UItra? There are a couple of other improvements that could make photos sharper.

One of those is faster shooting all around. Faster camera opening, faster focus, and a faster shutter. This used to be an important spec to Samsung, which aimed to take a photo within a second of pressing the camera shortcut. Today, most phones use the same Power button shortcut to activate the camera, but not Apple.

Instead of improving upon other specs, I’d love to see Apple improve its camera speed. Give us the fastest camera to open, with faster autofocus than anyone else. A big reason my photos are so blurry is because I barely got the shot at all.

The next time I’m at a stop light and a family of wild turkeys crosses my path, I want a phone that can grab the picture before the light turns green.

My hand shakes and blurs all the birds in the yard

After autofocus, I want to see Apple pull ahead in optical image stabilization (OIS). Apple already uses an OIS solution that is unique among smartphone makers, but I’d still love to see this technology improved before the iPhone gets more boastful camera specs.

The OIS feature on your camera uses a tiny motor paired with a motion sensor. Using a combination of magnetic proximity and gyroscopic sensors, it detects the way your phone is moving and adjusts the camera with incredibly fast counter-movements. The difference between phone makers is where the motor is placed.

Samsung and other phone makers use OIS on the camera lens. It can reportedly move up to 1,000 times per second. Professional DSLR lenses also pack OIS right on the lens, so this is a fine solution.

Apple, on the other hand, uses OIS on the sensor side, instead of on the lens itself. Apple’s solution can purportedly move up to 5,000 times per second. In theory, it could be a superior solution, as it moves the motor closer to the center of movement. It is a sensor that travels faster but needs to travel a shorter distance, so it should be superior.

In practice, Apple’s solution may be better than the competition, but not enough to make it a standout feature for the iPhone 14 Pro. I’d like to see that change for the iPhone 15 Pro and every iPhone 15 model. I’d love to see image stabilization become a key selling point for Apple’s camera phones.

Cameras are great so fix me instead

We all know that our phones are capable of taking amazing photos. Every couple of years some phone maker trots out a National Geographic or Italian Vogue photographer to demonstrate how amazing the photos can look … if you’re a professional photographer.

I don’t need a better image sensor. If the image sensor is good enough to shoot advertising billboards and magazine covers, it’s good enough for my homemade pizza and my puppy play date. I need that sensor to work for me on every shot the way it works for the professionals.

I'm not good enough for the camera. I'm not steady enough for the stabilizer. I'm not focused enough for autofocus. I'm not as good as a professional. Instead of giving me a camera that will do even more for the pros, give me a camera that helps me fix what keeps me from being that great.

The first step is fixing the blur, and I think the best way to do that will be to focus (intended) on better autofocus and better image stabilization. Help me shoot faster, steadier photos, and I’m sure they’ll turn out great.

Starting more than 20 years ago at eTown.com. Philip Berne has written for Engadget, The Verge, PC Mag, Digital Trends, Slashgear, TechRadar, AndroidCentral, and was Editor-in-Chief of the sadly-defunct infoSync. Phil holds an entirely useful M.A. in Cultural Theory from Carnegie Mellon University. He sang in numerous college a cappella groups.

Phil did a stint at Samsung Mobile, leading reviews for the PR team and writing crisis communications until he left in 2017. He worked at an Apple Store near Boston, MA, at the height of iPod popularity. Phil is certified in Google AI Essentials. His passion is the democratizing power of mobile technology. Before AI came along he was totally sure the next big thing would be something we wear on our faces.